Compare commits

18 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

18d58ce124 | ||

|

|

b8f8837a8f | ||

|

|

0c796c8cfc | ||

|

|

b14fbc29b7 | ||

|

|

6e29f97881 | ||

|

|

a164939161 | ||

|

|

09ab11ef01 | ||

|

|

ac34edec7f | ||

|

|

6dd8ffa037 | ||

|

|

eaed3f40a2 | ||

|

|

e48f39375e | ||

|

|

9b7b651ef9 | ||

|

|

b5623cb9c2 | ||

|

|

144d12b463 | ||

|

|

fa452f5518 | ||

|

|

a159d21d45 | ||

|

|

3a00bbf44d | ||

|

|

9f5e94fa8f |

1

.gitattributes

vendored

1

.gitattributes

vendored

@@ -3,6 +3,7 @@ backend-python/wkv_cuda_utils/** linguist-vendored

|

||||

backend-python/get-pip.py linguist-vendored

|

||||

backend-python/convert_model.py linguist-vendored

|

||||

backend-python/convert_safetensors.py linguist-vendored

|

||||

backend-python/convert_pytorch_to_ggml.py linguist-vendored

|

||||

backend-python/utils/midi.py linguist-vendored

|

||||

build/** linguist-vendored

|

||||

finetune/lora/** linguist-vendored

|

||||

|

||||

7

.github/workflows/release.yml

vendored

7

.github/workflows/release.yml

vendored

@@ -65,7 +65,10 @@ jobs:

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../libs" -Destination "py310/libs" -Recurse

|

||||

./py310/python -m pip install cyac==1.9

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

(Get-Content -Path ./backend-golang/app.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/app.go

|

||||

(Get-Content -Path ./backend-golang/utils.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/utils.go

|

||||

make

|

||||

Rename-Item -Path "build/bin/RWKV-Runner.exe" -NewName "RWKV-Runner_windows_x64.exe"

|

||||

|

||||

@@ -93,6 +96,8 @@ jobs:

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

make

|

||||

mv build/bin/RWKV-Runner build/bin/RWKV-Runner_linux_x64

|

||||

|

||||

@@ -117,6 +122,8 @@ jobs:

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

make

|

||||

cp build/darwin/Readme_Install.txt build/bin/Readme_Install.txt

|

||||

cp build/bin/RWKV-Runner.app/Contents/MacOS/RWKV-Runner build/bin/RWKV-Runner_darwin_universal

|

||||

|

||||

1

.gitignore

vendored

1

.gitignore

vendored

@@ -8,6 +8,7 @@ __pycache__

|

||||

*.st

|

||||

*.safetensors

|

||||

*.bin

|

||||

*.mid

|

||||

/config.json

|

||||

/cache.json

|

||||

/presets.json

|

||||

|

||||

@@ -1,17 +1,8 @@

|

||||

## Changes

|

||||

|

||||

- add web-rwkv-converter (Safetensors Convert no longer depends on Python) (WebGPU Server 0.3.3)

|

||||

- model tags classifier

|

||||

- improve presets interaction

|

||||

- better state cache

|

||||

- better customCuda condition

|

||||

- add python-3.10.11-embed-amd64.zip cnMirror

|

||||

- always reset to activePreset

|

||||

- for devices that gpu is not supported, use cpu to merge lora

|

||||

- RWKV_RESCALE_LAYER 999 for music model

|

||||

- disable hashed assets

|

||||

- fix webWails undefined functions

|

||||

- fix damaged logo

|

||||

- rwkv.cpp(ggml) support

|

||||

- allow playing mid with external player

|

||||

- allow overriding Core API URL

|

||||

- chore

|

||||

|

||||

## Install

|

||||

|

||||

113

README.md

113

README.md

@@ -21,7 +21,7 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

[![MacOS][MacOS-image]][MacOS-url]

|

||||

[![Linux][Linux-image]][Linux-url]

|

||||

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [Preview](#Preview) | [Download][download-url] | [Server-Deploy-Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [Preview](#Preview) | [Download][download-url] | [Simple Deploy Example](#Simple-Deploy-Example) | [Server Deploy Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples) | [MIDI Hardware Input](#MIDI-Input)

|

||||

|

||||

[license-image]: http://img.shields.io/badge/license-MIT-blue.svg

|

||||

|

||||

@@ -57,20 +57,49 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

|

||||

## Features

|

||||

|

||||

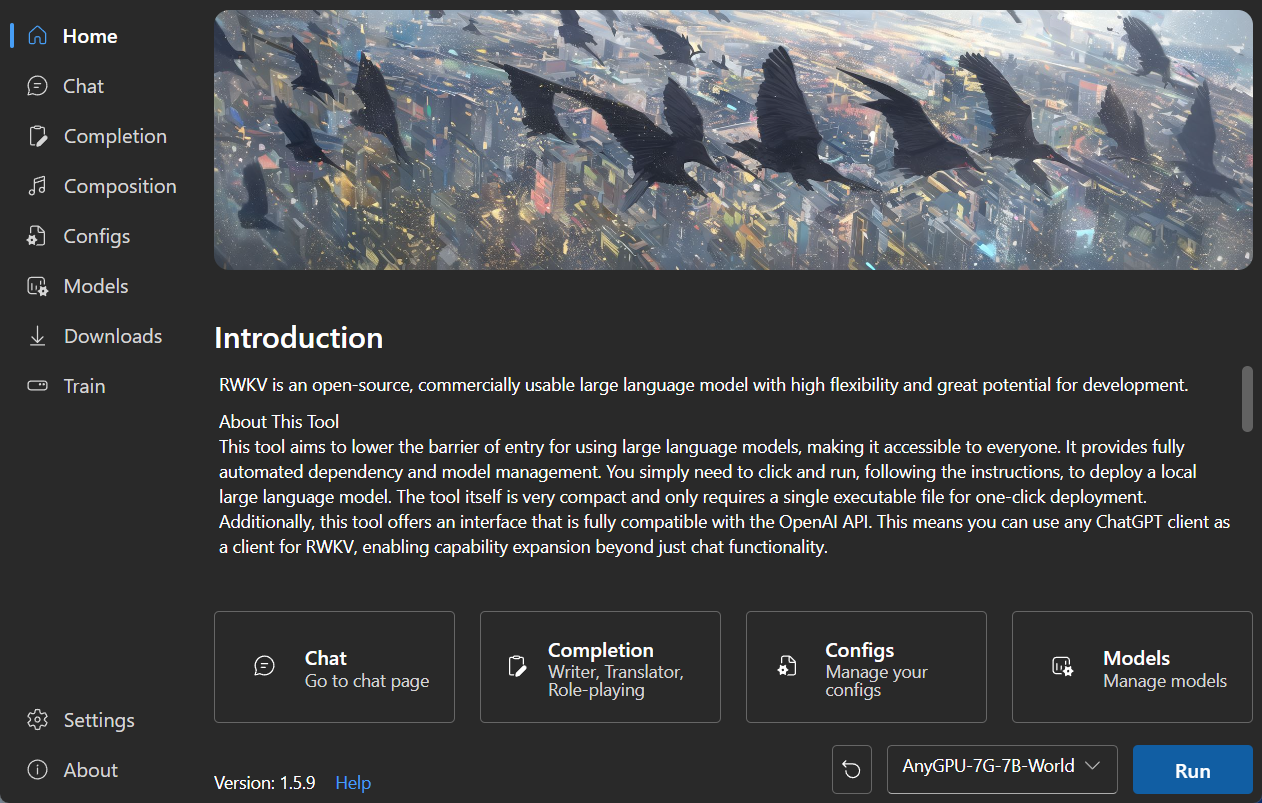

- RWKV model management and one-click startup

|

||||

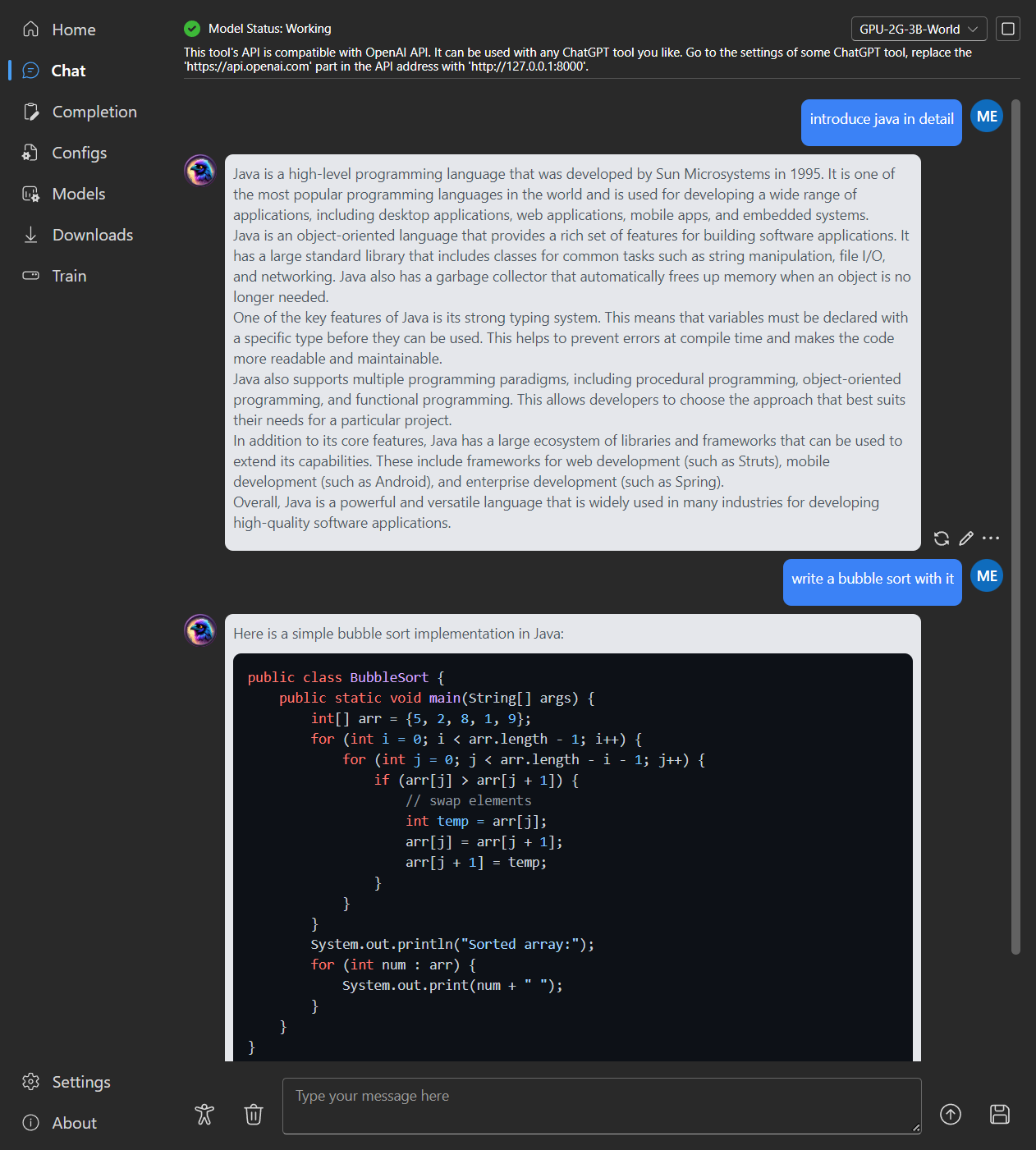

- Fully compatible with the OpenAI API, making every ChatGPT client an RWKV client. After starting the model,

|

||||

- RWKV model management and one-click startup.

|

||||

- Front-end and back-end separation, if you don't want to use the client, also allows for separately deploying the

|

||||

front-end service, or the back-end inference service, or the back-end inference service with a WebUI.

|

||||

[Simple Deploy Example](#Simple-Deploy-Example) | [Server Deploy Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- Compatible with the OpenAI API, making every ChatGPT client an RWKV client. After starting the model,

|

||||

open http://127.0.0.1:8000/docs to view more details.

|

||||

- Automatic dependency installation, requiring only a lightweight executable program

|

||||

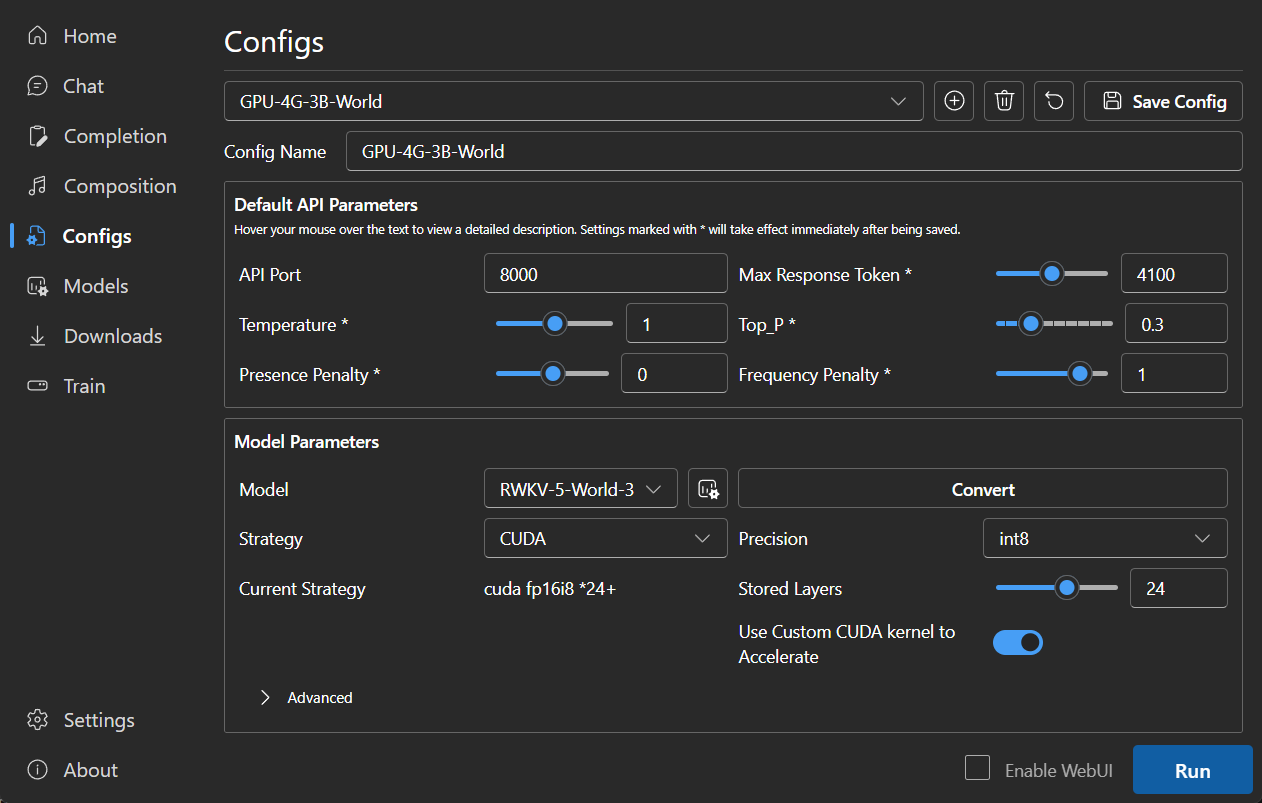

- Configs with 2G to 32G VRAM are included, works well on almost all computers

|

||||

- User-friendly chat and completion interaction interface included

|

||||

- Easy-to-understand and operate parameter configuration

|

||||

- Built-in model conversion tool

|

||||

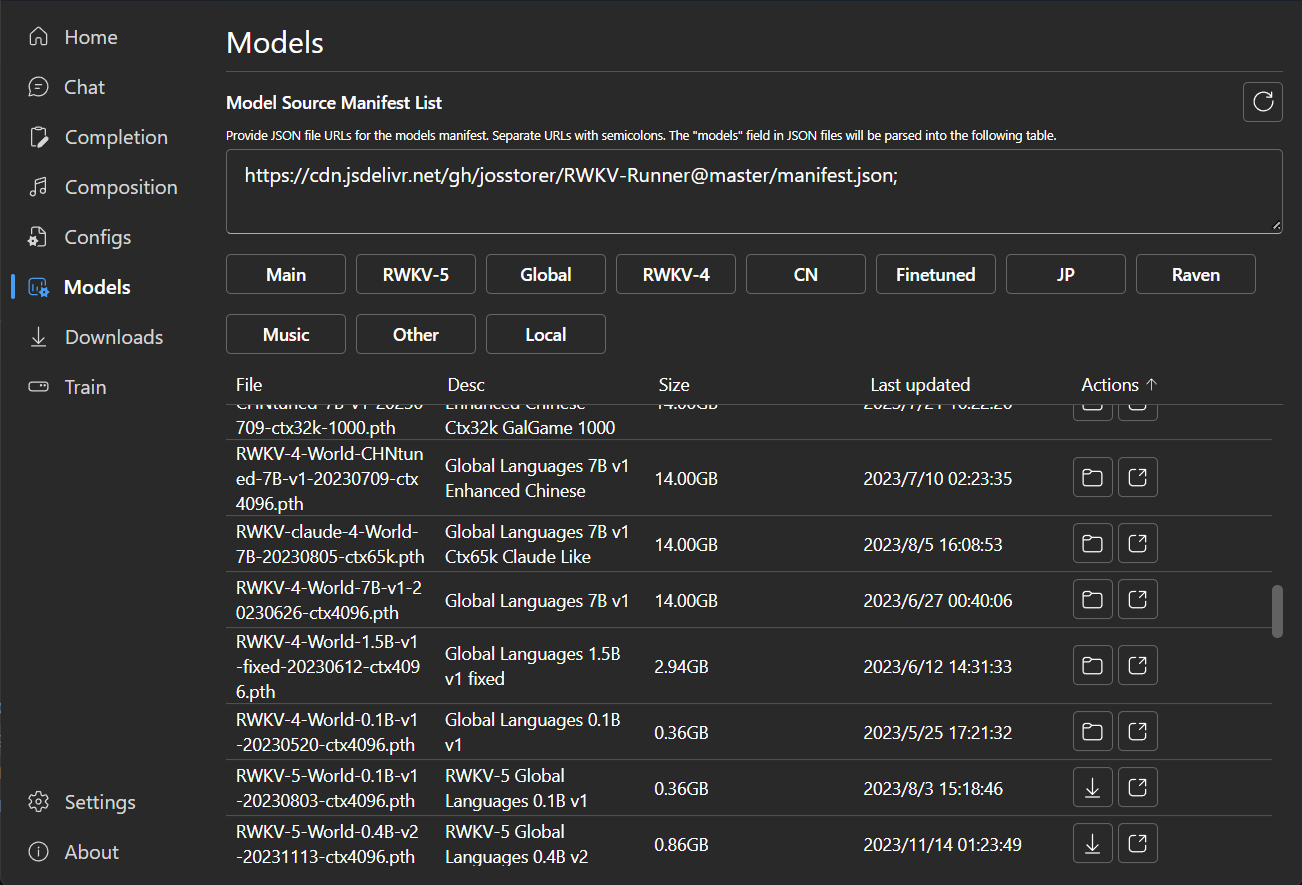

- Built-in download management and remote model inspection

|

||||

- Built-in one-click LoRA Finetune

|

||||

- Can also be used as an OpenAI ChatGPT and GPT-Playground client

|

||||

- Multilingual localization

|

||||

- Theme switching

|

||||

- Automatic updates

|

||||

- Automatic dependency installation, requiring only a lightweight executable program.

|

||||

- Pre-set multi-level VRAM configs, works well on almost all computers. In Configs page, switch Strategy to WebGPU, it

|

||||

can also run on AMD, Intel, and other graphics cards.

|

||||

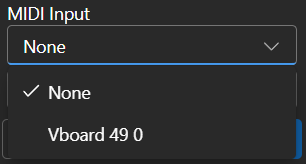

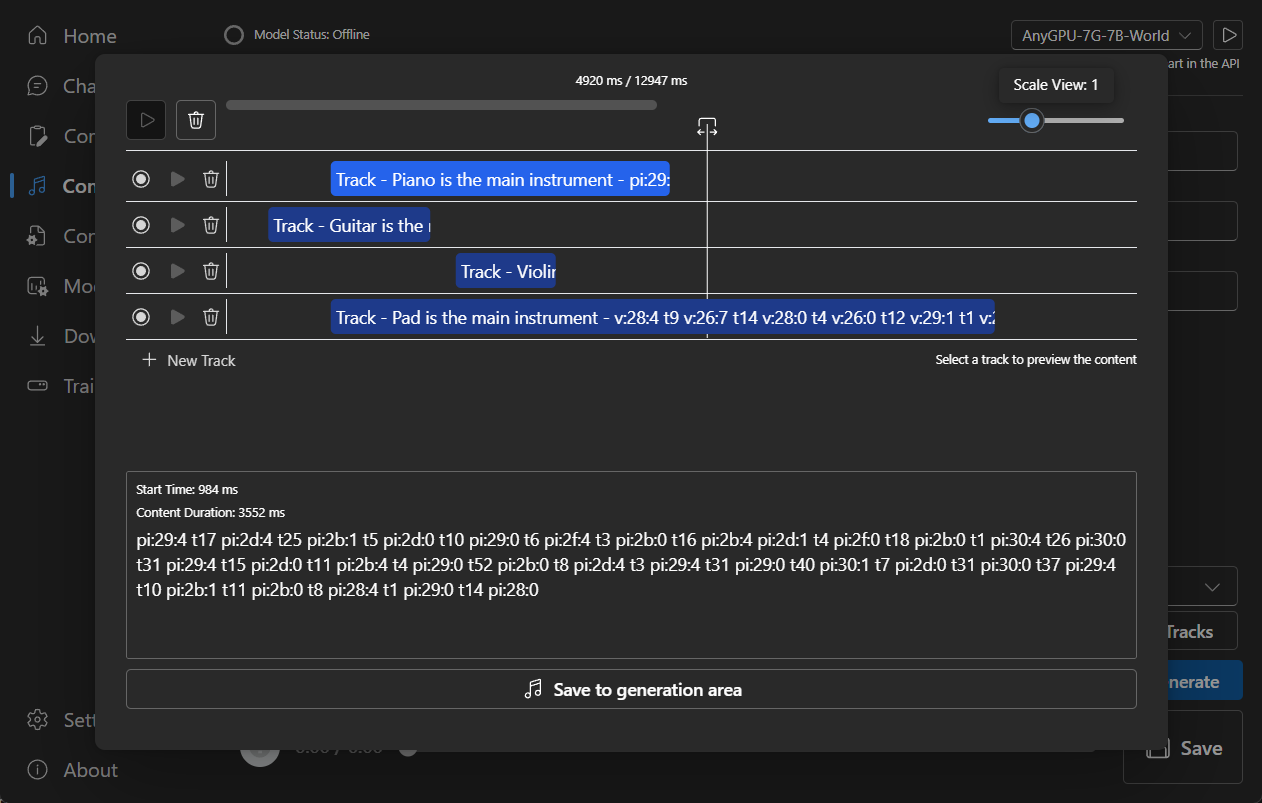

- User-friendly chat, completion, and composition interaction interface included. Also supports chat presets, attachment

|

||||

uploads, MIDI hardware input, and track editing.

|

||||

[Preview](#Preview) | [MIDI Hardware Input](#MIDI-Input)

|

||||

- Built-in WebUI option, one-click start of Web service, sharing your hardware resources.

|

||||

- Easy-to-understand and operate parameter configuration, along with various operation guidance prompts.

|

||||

- Built-in model conversion tool.

|

||||

- Built-in download management and remote model inspection.

|

||||

- Built-in one-click LoRA Finetune. (Windows Only)

|

||||

- Can also be used as an OpenAI ChatGPT and GPT-Playground client. (Fill in the API URL and API Key in Settings page)

|

||||

- Multilingual localization.

|

||||

- Theme switching.

|

||||

- Automatic updates.

|

||||

|

||||

## Simple Deploy Example

|

||||

|

||||

```bash

|

||||

git clone https://github.com/josStorer/RWKV-Runner

|

||||

|

||||

# Then

|

||||

cd RWKV-Runner

|

||||

python ./backend-python/main.py #The backend inference service has been started, request /switch-model API to load the model, refer to the API documentation: http://127.0.0.1:8000/docs

|

||||

|

||||

# Or

|

||||

cd RWKV-Runner/frontend

|

||||

npm ci

|

||||

npm run build #Compile the frontend

|

||||

cd ..

|

||||

python ./backend-python/webui_server.py #Start the frontend service separately

|

||||

# Or

|

||||

python ./backend-python/main.py --webui #Start the frontend and backend service at the same time

|

||||

|

||||

# Help Info

|

||||

python ./backend-python/main.py -h

|

||||

```

|

||||

|

||||

## API Concurrency Stress Testing

|

||||

|

||||

@@ -133,6 +162,48 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")

|

||||

```

|

||||

|

||||

## MIDI Input

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

### USB MIDI Connection

|

||||

|

||||

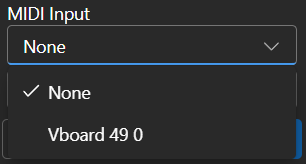

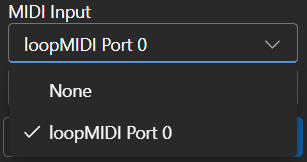

- USB MIDI devices are plug-and-play, and you can select your input device in the Composition page

|

||||

-

|

||||

|

||||

### Mac MIDI Bluetooth Connection

|

||||

|

||||

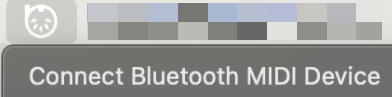

- For Mac users who want to use Bluetooth input,

|

||||

please install [Bluetooth MIDI Connect](https://apps.apple.com/us/app/bluetooth-midi-connect/id1108321791), then click

|

||||

the tray icon to connect after launching,

|

||||

afterwards, you can select your input device in the Composition page.

|

||||

-

|

||||

|

||||

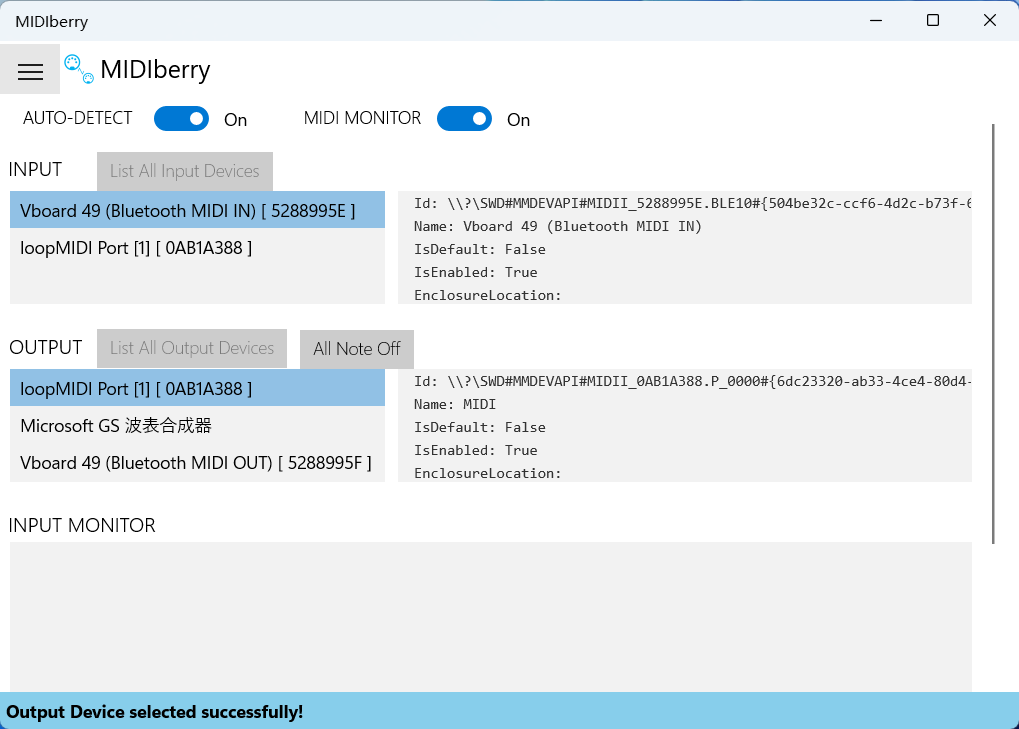

### Windows MIDI Bluetooth Connection

|

||||

|

||||

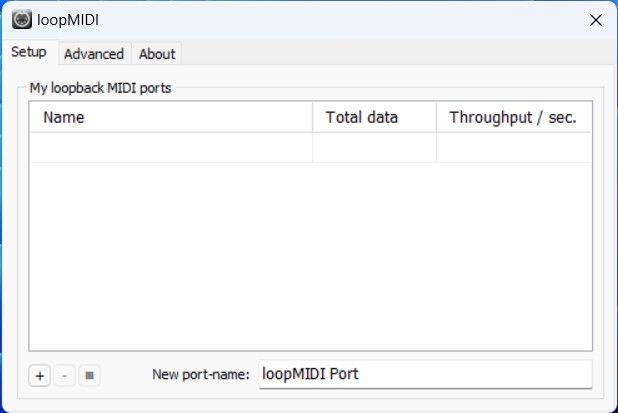

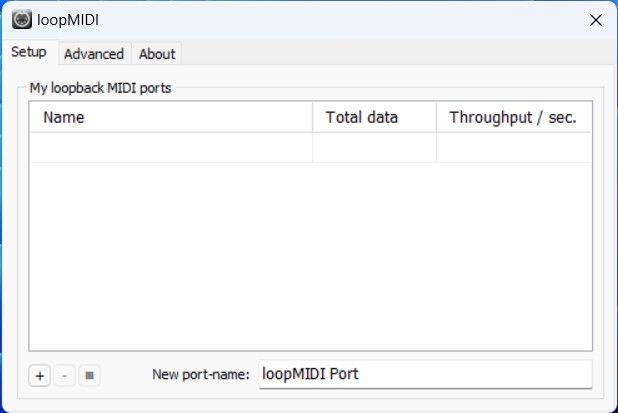

- Windows seems to have implemented Bluetooth MIDI support only for UWP (Universal Windows Platform) apps. Therefore, it

|

||||

requires multiple steps to establish a connection. We need to create a local virtual MIDI device and then launch a UWP

|

||||

application. Through this UWP application, we will redirect Bluetooth MIDI input to the virtual MIDI device, and then

|

||||

this software will listen to the input from the virtual MIDI device.

|

||||

- So, first, you need to

|

||||

download [loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip)

|

||||

to create a virtual MIDI device. Click the plus sign in the bottom left corner to create the device.

|

||||

-

|

||||

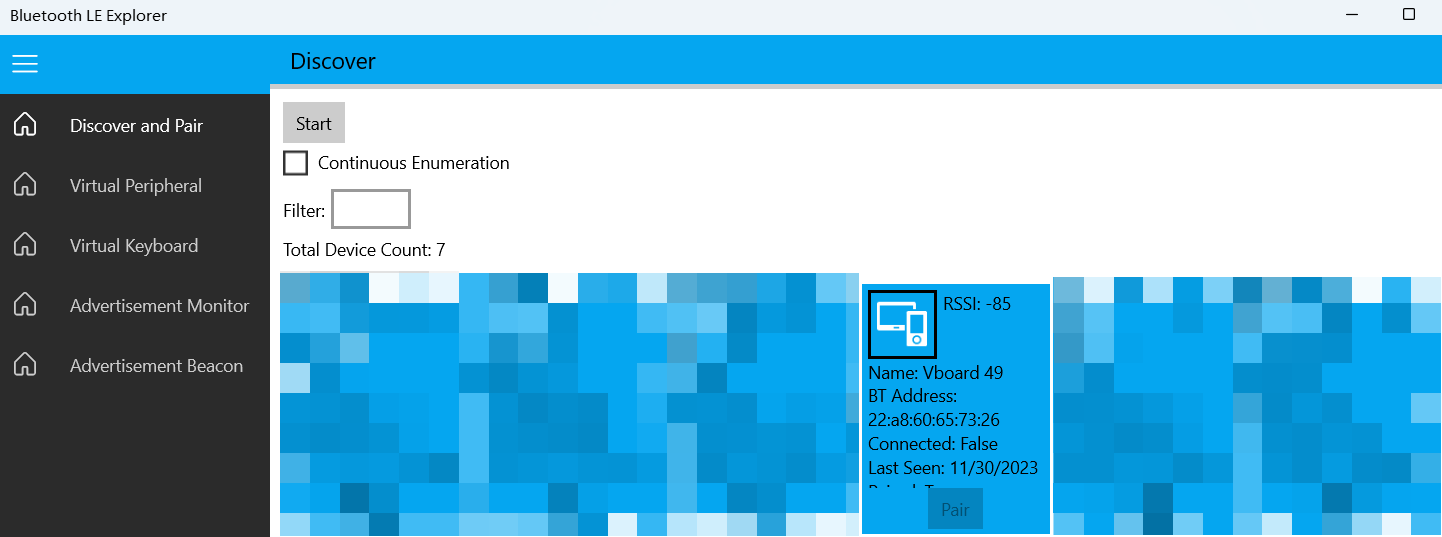

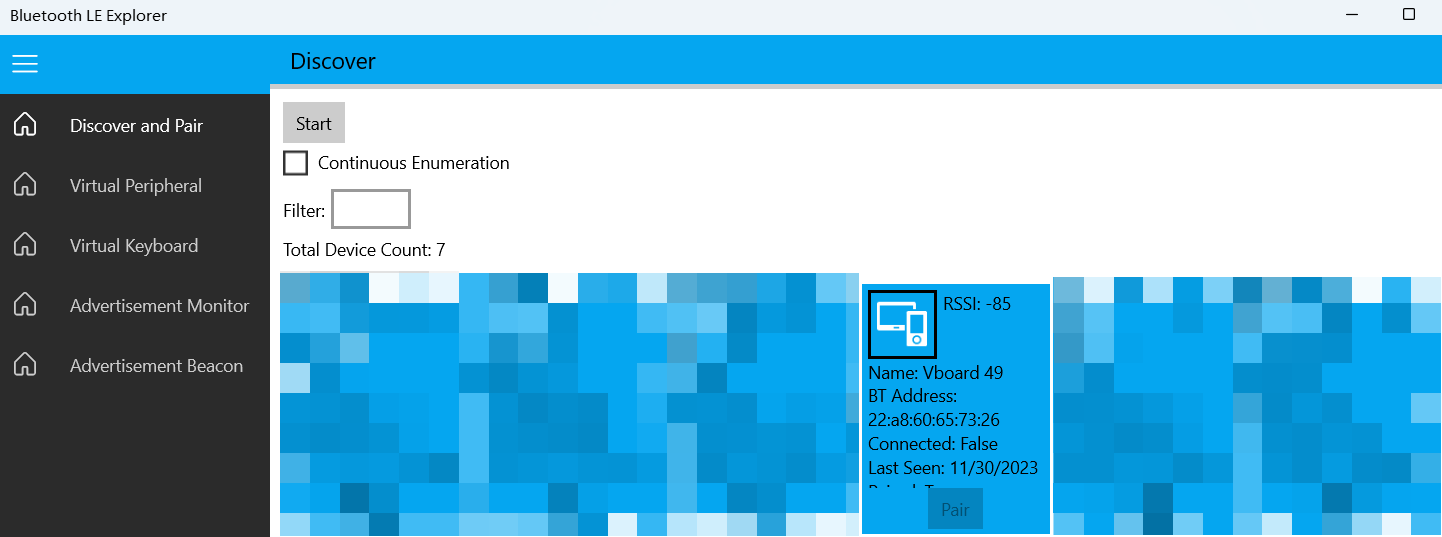

- Next, you need to download [Bluetooth LE Explorer](https://apps.microsoft.com/detail/9N0ZTKF1QD98) to discover and

|

||||

connect to Bluetooth MIDI devices. Click "Start" to search for devices, and then click "Pair" to bind the MIDI device.

|

||||

-

|

||||

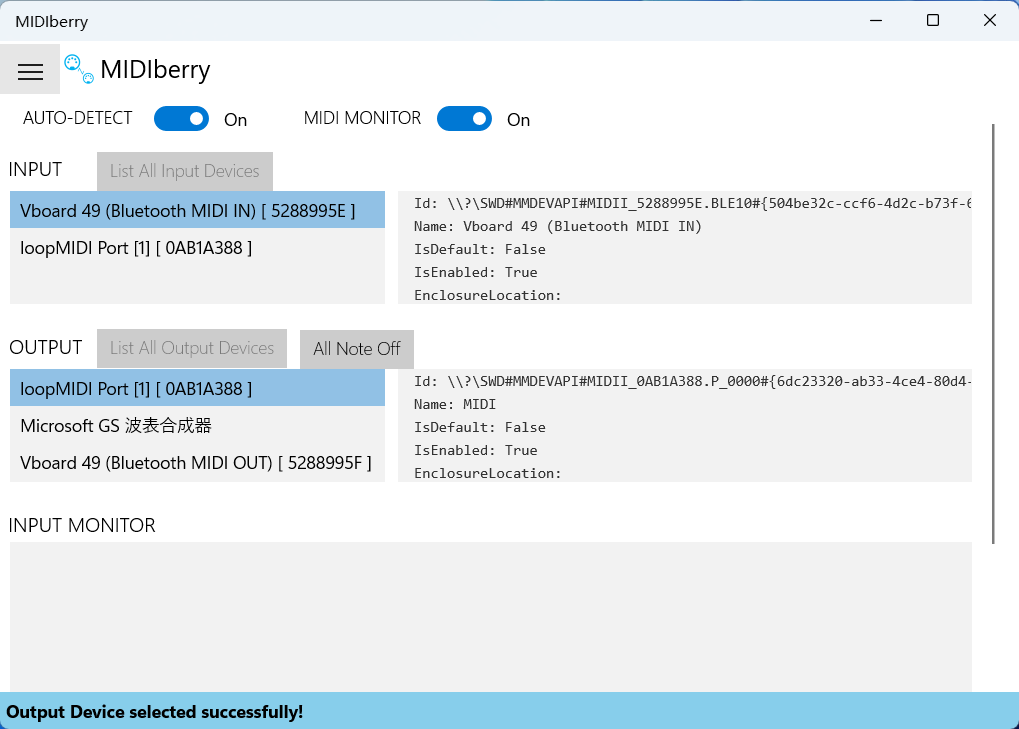

- Finally, you need to install [MIDIberry](https://apps.microsoft.com/detail/9N39720H2M05),

|

||||

This UWP application can redirect Bluetooth MIDI input to the virtual MIDI device. After launching it, double-click

|

||||

your actual Bluetooth MIDI device name in the input field, and in the output field, double-click the virtual MIDI

|

||||

device name we created earlier.

|

||||

-

|

||||

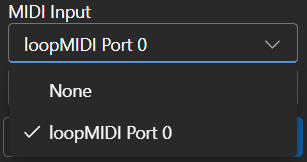

- Now, you can select the virtual MIDI device as the input in the Composition page. Bluetooth LE Explorer no longer

|

||||

needs to run, and you can also close the loopMIDI window, it will run automatically in the background. Just keep

|

||||

MIDIberry open.

|

||||

-

|

||||

|

||||

## Related Repositories:

|

||||

|

||||

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

|

||||

@@ -146,27 +217,35 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

|

||||

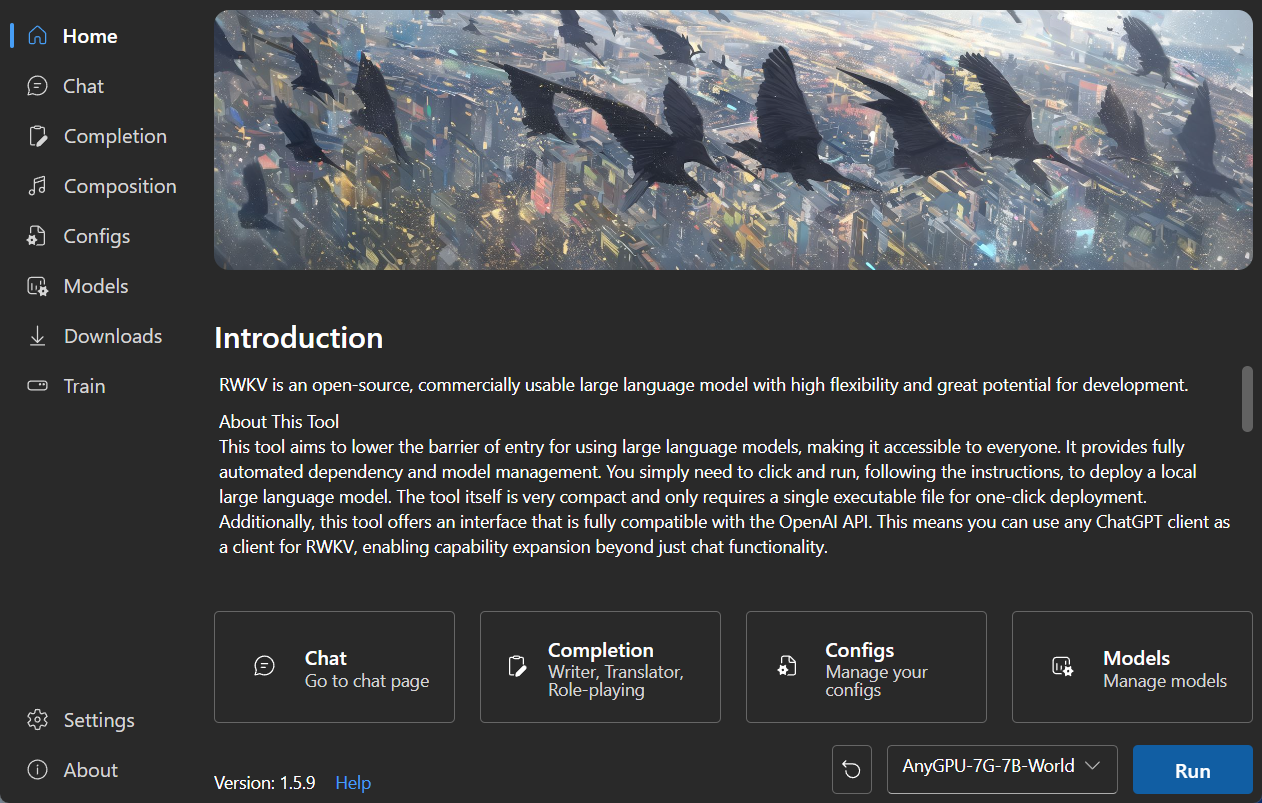

### Homepage

|

||||

|

||||

|

||||

|

||||

|

||||

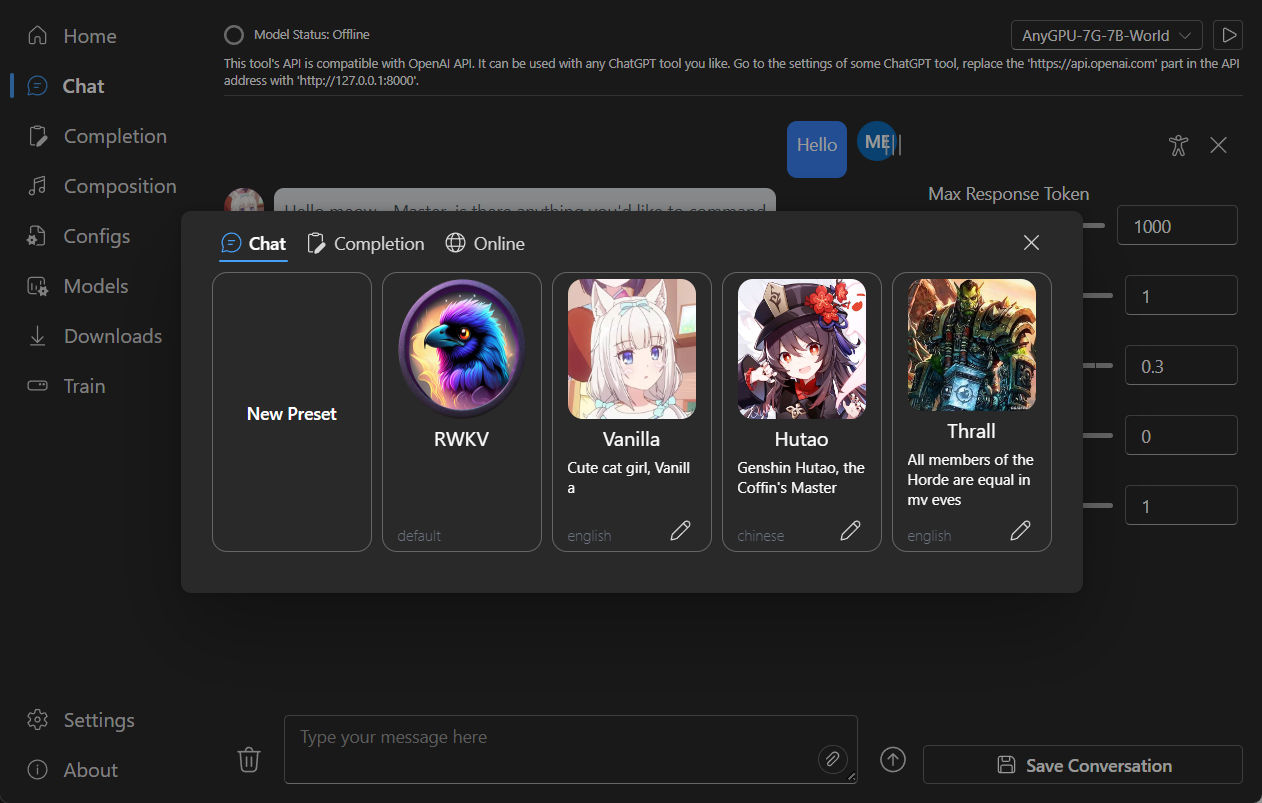

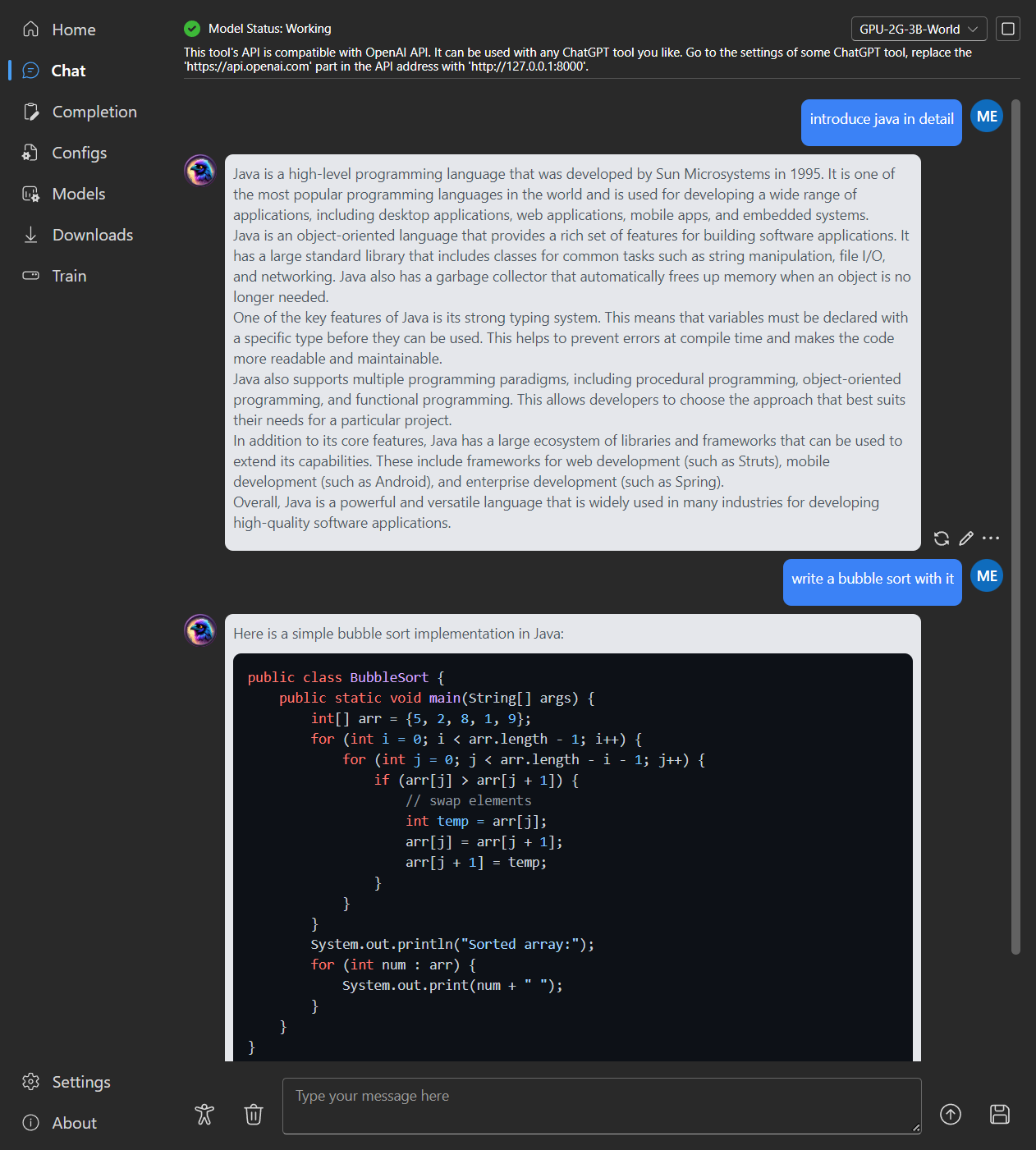

### Chat

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

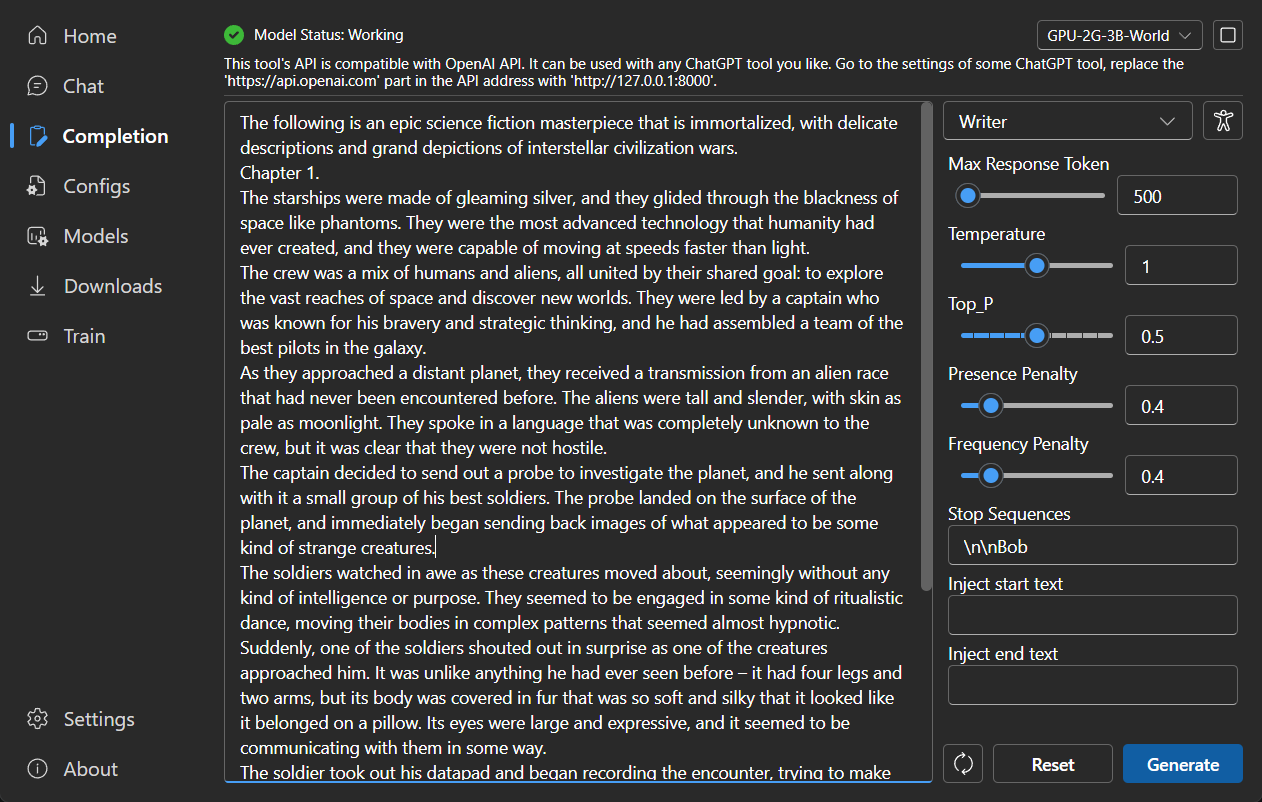

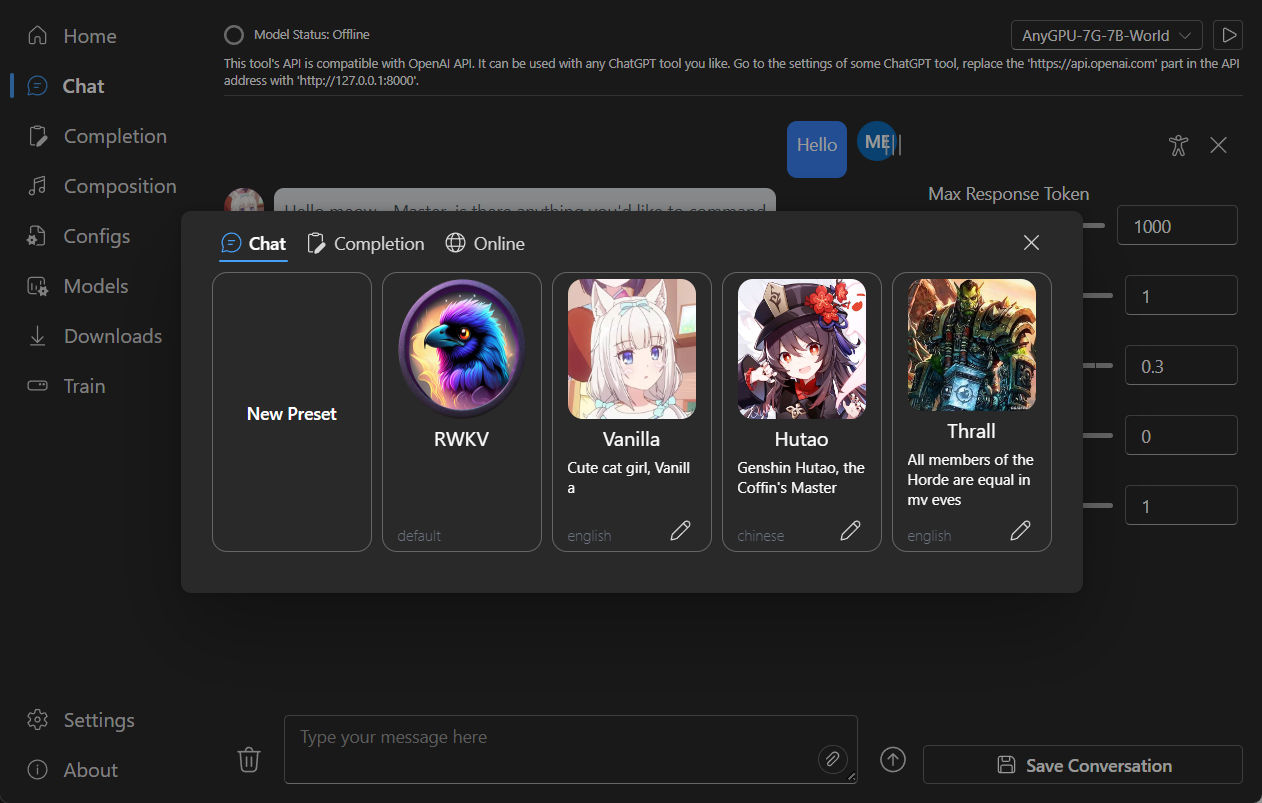

### Completion

|

||||

|

||||

|

||||

|

||||

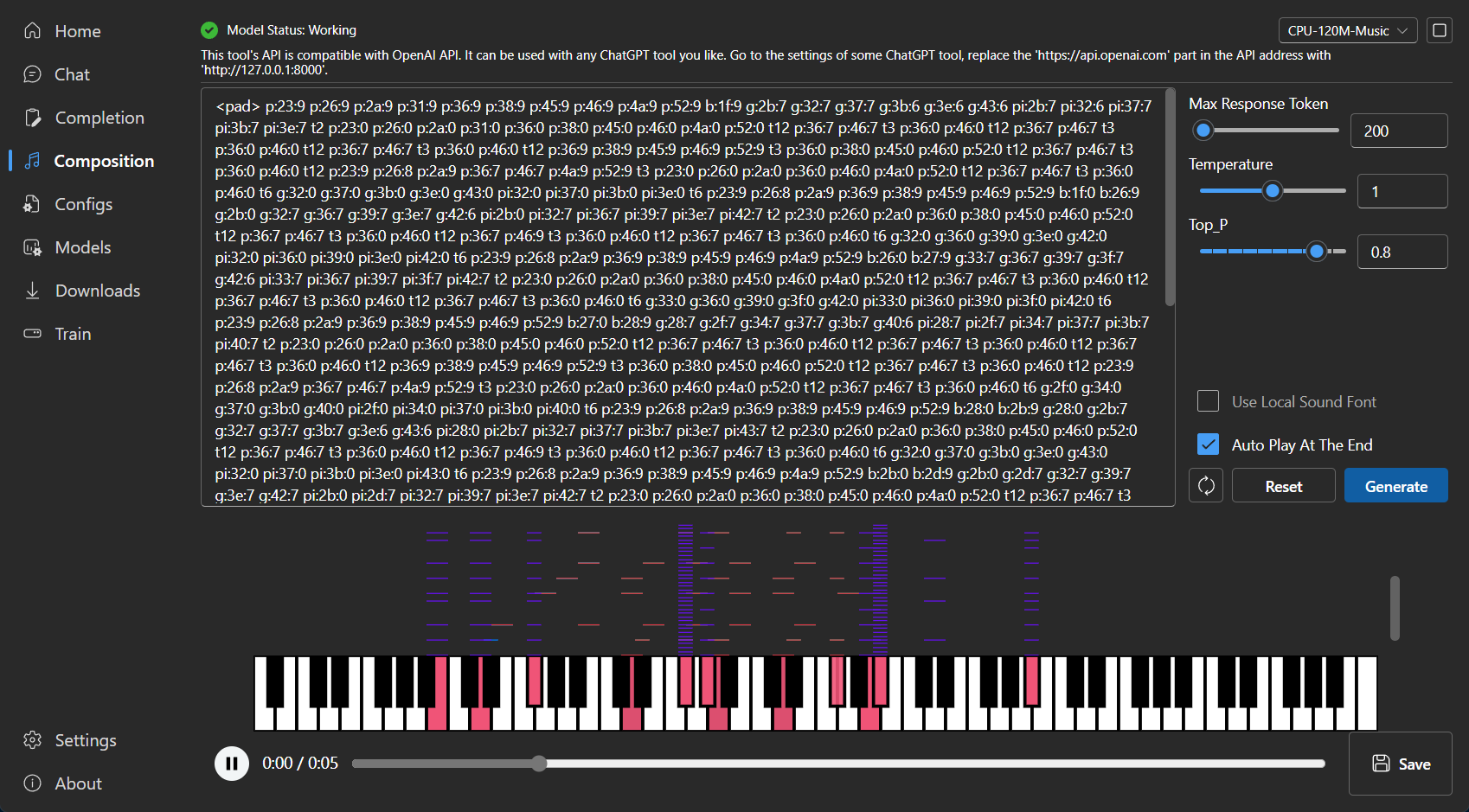

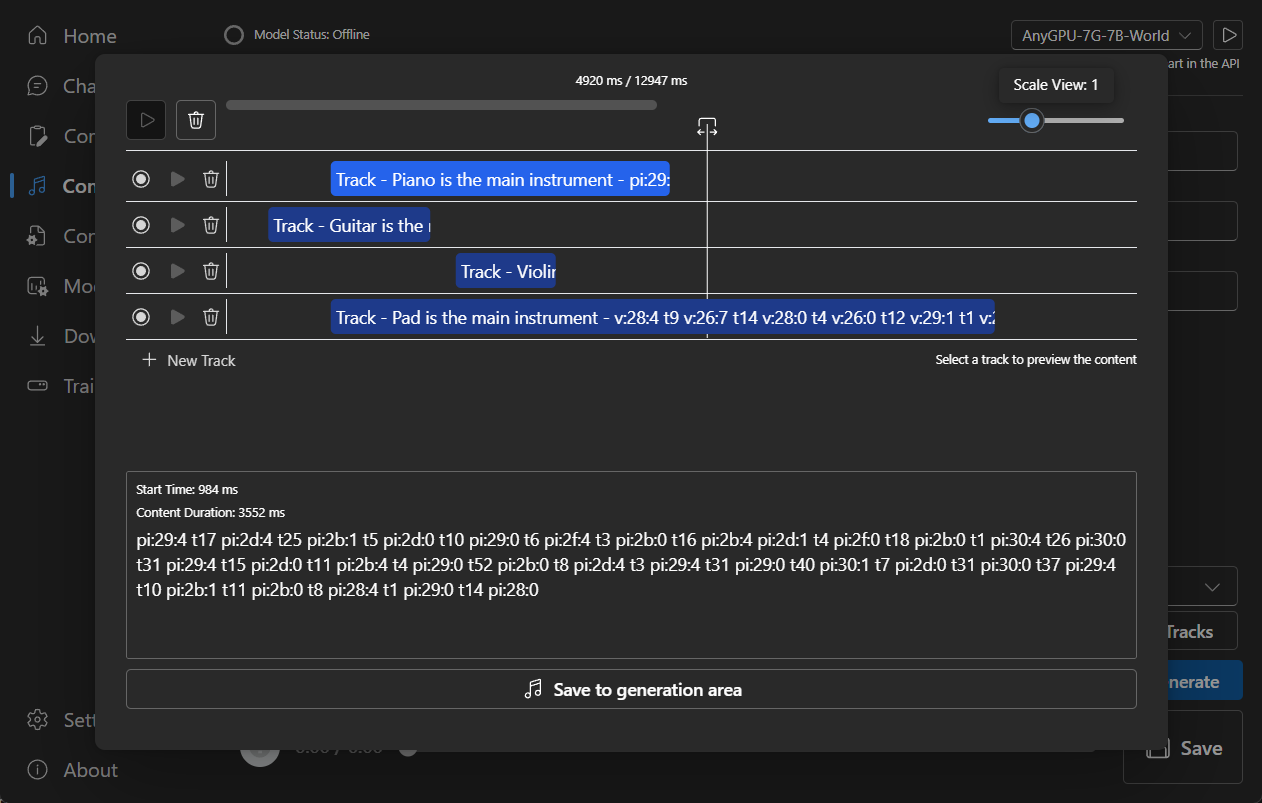

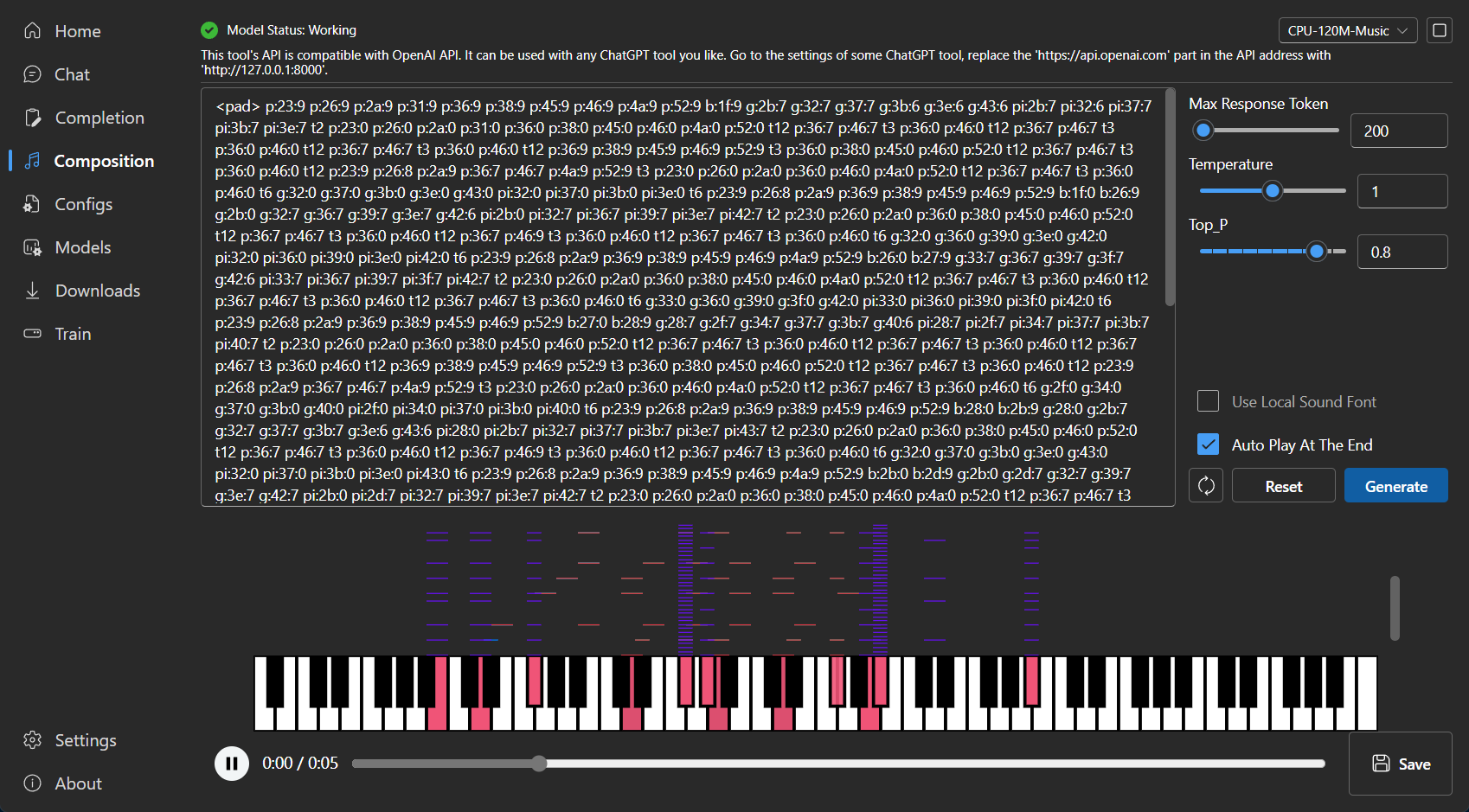

### Composition

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

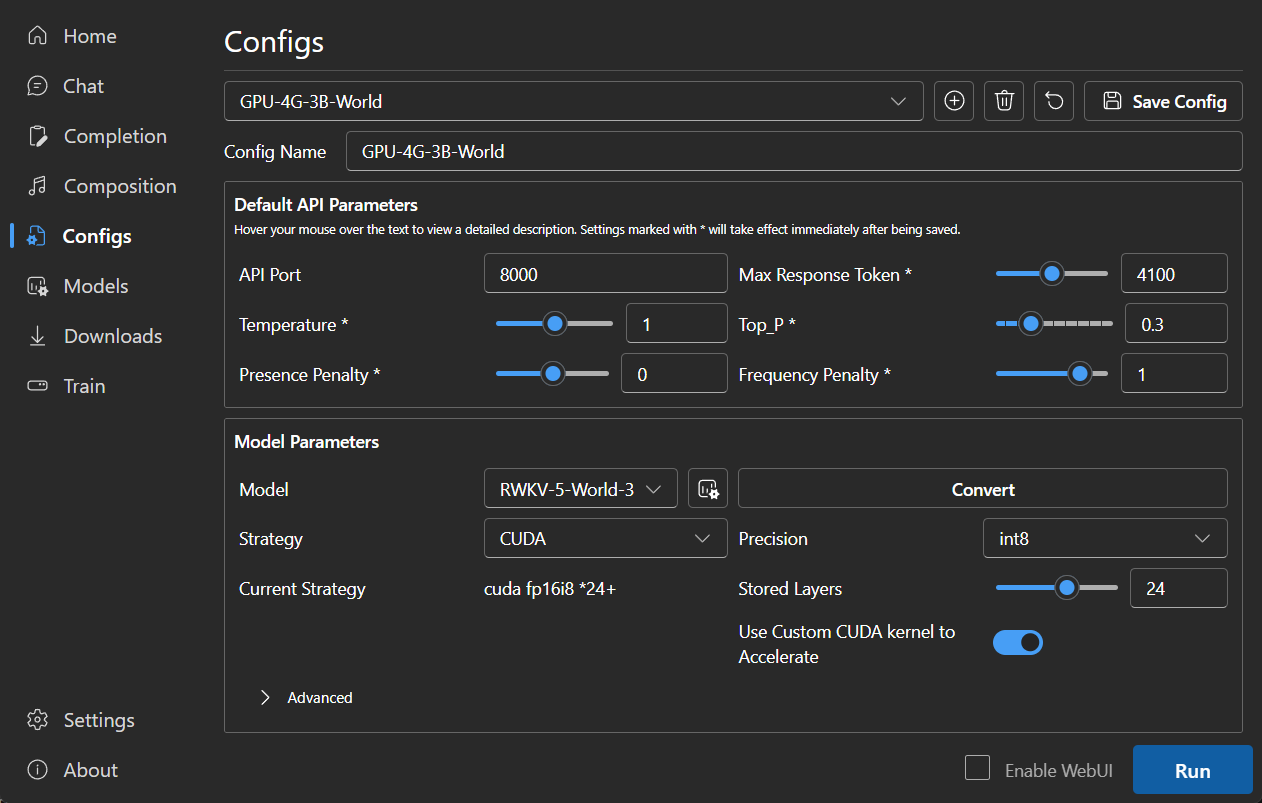

### Configuration

|

||||

|

||||

|

||||

|

||||

|

||||

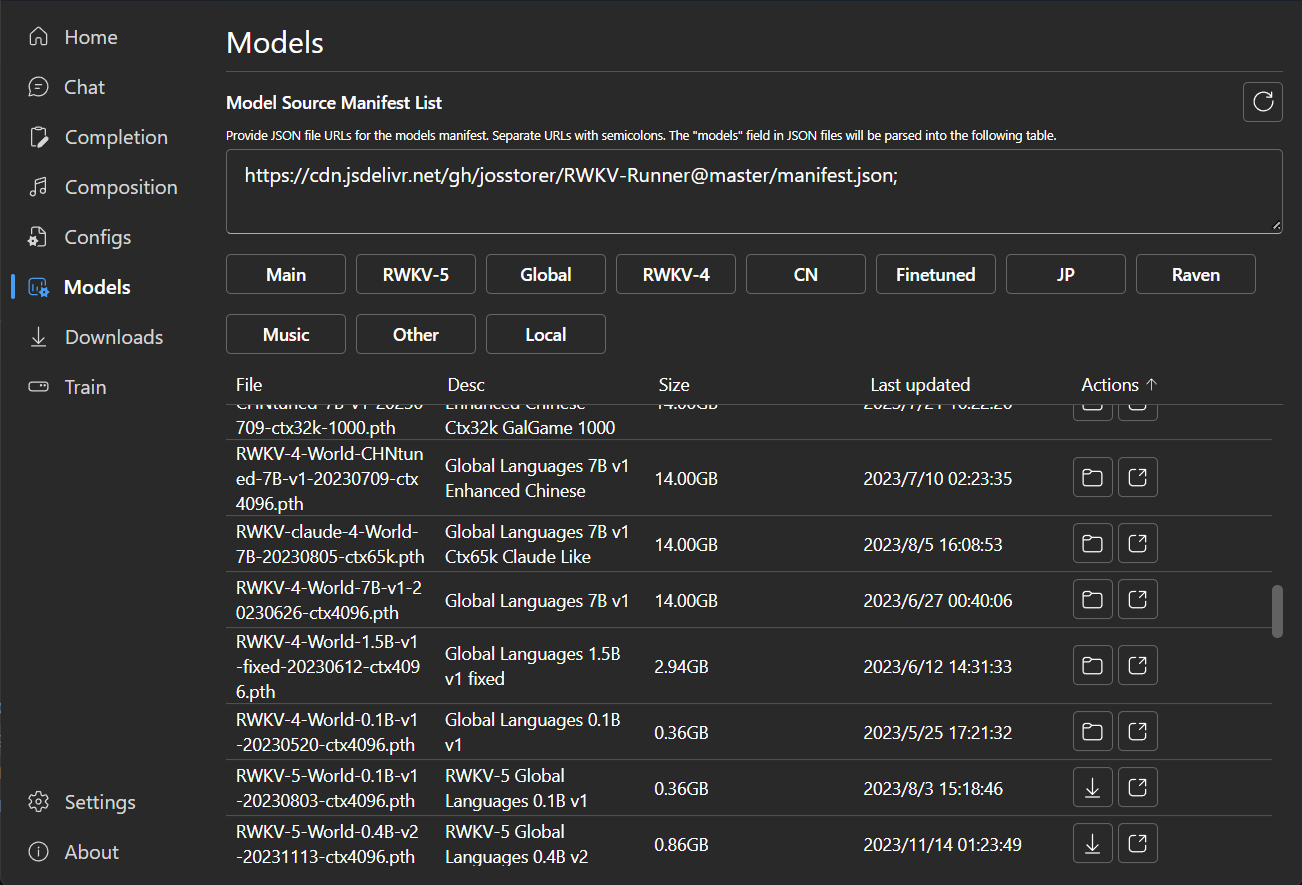

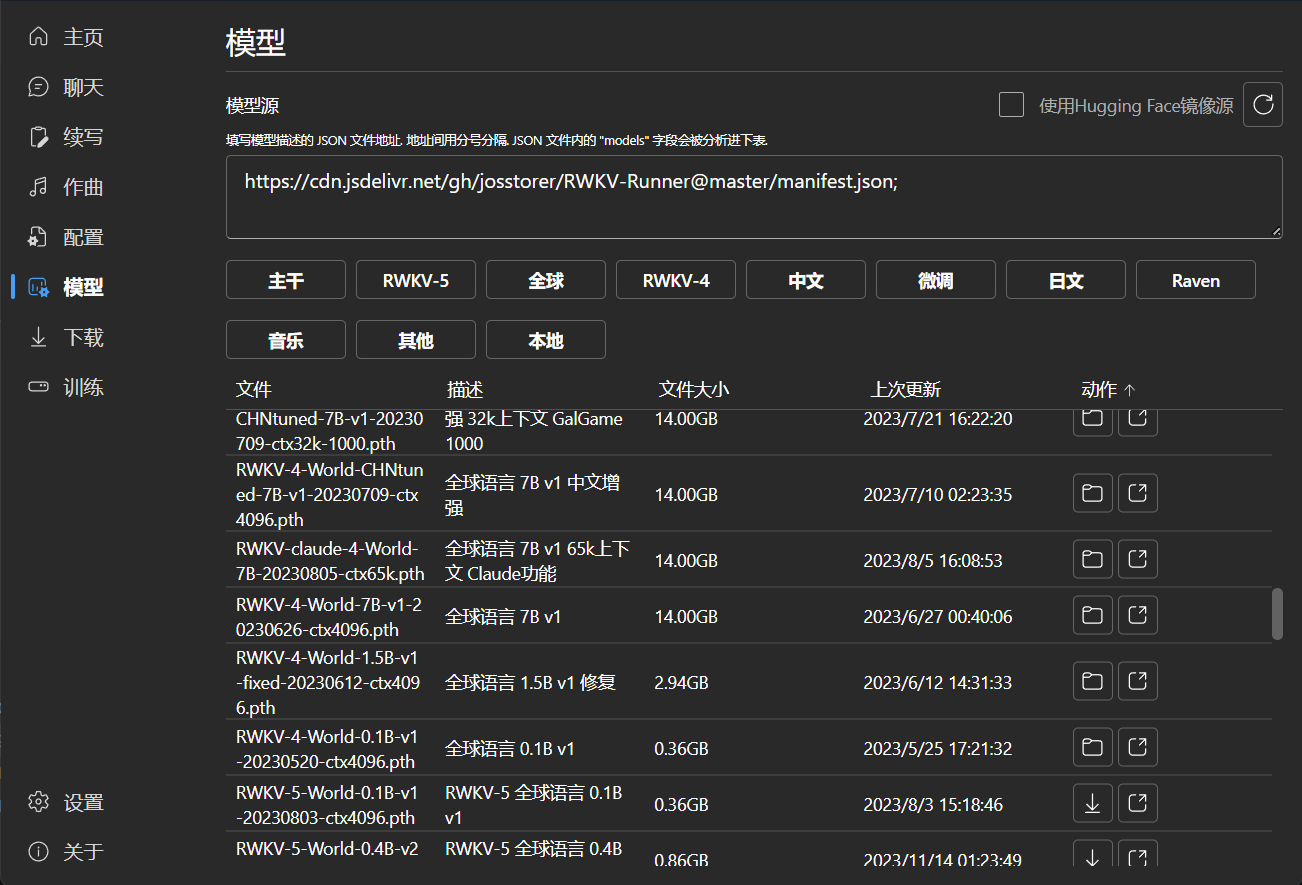

### Model Management

|

||||

|

||||

|

||||

|

||||

|

||||

### Download Management

|

||||

|

||||

|

||||

99

README_JA.md

99

README_JA.md

@@ -21,7 +21,7 @@

|

||||

[![MacOS][MacOS-image]][MacOS-url]

|

||||

[![Linux][Linux-image]][Linux-url]

|

||||

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [プレビュー](#Preview) | [ダウンロード][download-url] | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [プレビュー](#Preview) | [ダウンロード][download-url] | [シンプルなデプロイの例](#Simple-Deploy-Example) | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples) | [MIDIハードウェア入力](#MIDI-Input)

|

||||

|

||||

[license-image]: http://img.shields.io/badge/license-MIT-blue.svg

|

||||

|

||||

@@ -58,20 +58,47 @@

|

||||

## 特徴

|

||||

|

||||

- RWKV モデル管理とワンクリック起動

|

||||

- OpenAI API と完全に互換性があり、すべての ChatGPT クライアントを RWKV クライアントにします。モデル起動後、

|

||||

- フロントエンドとバックエンドの分離は、クライアントを使用しない場合でも、フロントエンドサービス、またはバックエンド推論サービス、またはWebUIを備えたバックエンド推論サービスを個別に展開することを可能にします。

|

||||

[シンプルなデプロイの例](#Simple-Deploy-Example) | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- OpenAI API と互換性があり、すべての ChatGPT クライアントを RWKV クライアントにします。モデル起動後、

|

||||

http://127.0.0.1:8000/docs を開いて詳細をご覧ください。

|

||||

- 依存関係の自動インストールにより、軽量な実行プログラムのみを必要とします

|

||||

- 2G から 32G の VRAM のコンフィグが含まれており、ほとんどのコンピュータで動作します

|

||||

- ユーザーフレンドリーなチャットと完成インタラクションインターフェースを搭載

|

||||

- 分かりやすく操作しやすいパラメータ設定

|

||||

- 事前設定された多段階のVRAM設定、ほとんどのコンピュータで動作します。配置ページで、ストラテジーをWebGPUに切り替えると、AMD、インテル、その他のグラフィックカードでも動作します

|

||||

- ユーザーフレンドリーなチャット、完成、および作曲インターフェイスが含まれています。また、チャットプリセット、添付ファイルのアップロード、MIDIハードウェア入力、トラック編集もサポートしています。

|

||||

[プレビュー](#Preview) | [MIDIハードウェア入力](#MIDI-Input)

|

||||

- 内蔵WebUIオプション、Webサービスのワンクリック開始、ハードウェアリソースの共有

|

||||

- 分かりやすく操作しやすいパラメータ設定、各種操作ガイダンスプロンプトとともに

|

||||

- 内蔵モデル変換ツール

|

||||

- ダウンロード管理とリモートモデル検査機能内蔵

|

||||

- 内蔵のLoRA微調整機能を搭載しています

|

||||

- このプログラムは、OpenAI ChatGPTとGPT Playgroundのクライアントとしても使用できます

|

||||

- 内蔵のLoRA微調整機能を搭載しています (Windowsのみ)

|

||||

- このプログラムは、OpenAI ChatGPTとGPT Playgroundのクライアントとしても使用できます(設定ページで `API URL` と `API Key`

|

||||

を入力してください)

|

||||

- 多言語ローカライズ

|

||||

- テーマ切り替え

|

||||

- 自動アップデート

|

||||

|

||||

## Simple Deploy Example

|

||||

|

||||

```bash

|

||||

git clone https://github.com/josStorer/RWKV-Runner

|

||||

|

||||

# Then

|

||||

cd RWKV-Runner

|

||||

python ./backend-python/main.py #The backend inference service has been started, request /switch-model API to load the model, refer to the API documentation: http://127.0.0.1:8000/docs

|

||||

|

||||

# Or

|

||||

cd RWKV-Runner/frontend

|

||||

npm ci

|

||||

npm run build #Compile the frontend

|

||||

cd ..

|

||||

python ./backend-python/webui_server.py #Start the frontend service separately

|

||||

# Or

|

||||

python ./backend-python/main.py --webui #Start the frontend and backend service at the same time

|

||||

|

||||

# Help Info

|

||||

python ./backend-python/main.py -h

|

||||

```

|

||||

|

||||

## API 同時実行ストレステスト

|

||||

|

||||

```bash

|

||||

@@ -134,6 +161,48 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")

|

||||

```

|

||||

|

||||

## MIDI Input

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

### USB MIDI Connection

|

||||

|

||||

- USB MIDI devices are plug-and-play, and you can select your input device in the Composition page

|

||||

-

|

||||

|

||||

### Mac MIDI Bluetooth Connection

|

||||

|

||||

- For Mac users who want to use Bluetooth input,

|

||||

please install [Bluetooth MIDI Connect](https://apps.apple.com/us/app/bluetooth-midi-connect/id1108321791), then click

|

||||

the tray icon to connect after launching,

|

||||

afterwards, you can select your input device in the Composition page.

|

||||

-

|

||||

|

||||

### Windows MIDI Bluetooth Connection

|

||||

|

||||

- Windows seems to have implemented Bluetooth MIDI support only for UWP (Universal Windows Platform) apps. Therefore, it

|

||||

requires multiple steps to establish a connection. We need to create a local virtual MIDI device and then launch a UWP

|

||||

application. Through this UWP application, we will redirect Bluetooth MIDI input to the virtual MIDI device, and then

|

||||

this software will listen to the input from the virtual MIDI device.

|

||||

- So, first, you need to

|

||||

download [loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip)

|

||||

to create a virtual MIDI device. Click the plus sign in the bottom left corner to create the device.

|

||||

-

|

||||

- Next, you need to download [Bluetooth LE Explorer](https://apps.microsoft.com/detail/9N0ZTKF1QD98) to discover and

|

||||

connect to Bluetooth MIDI devices. Click "Start" to search for devices, and then click "Pair" to bind the MIDI device.

|

||||

-

|

||||

- Finally, you need to install [MIDIberry](https://apps.microsoft.com/detail/9N39720H2M05),

|

||||

This UWP application can redirect Bluetooth MIDI input to the virtual MIDI device. After launching it, double-click

|

||||

your actual Bluetooth MIDI device name in the input field, and in the output field, double-click the virtual MIDI

|

||||

device name we created earlier.

|

||||

-

|

||||

- Now, you can select the virtual MIDI device as the input in the Composition page. Bluetooth LE Explorer no longer

|

||||

needs to run, and you can also close the loopMIDI window, it will run automatically in the background. Just keep

|

||||

MIDIberry open.

|

||||

-

|

||||

|

||||

## 関連リポジトリ:

|

||||

|

||||

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

|

||||

@@ -143,31 +212,39 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

- RWKV-LM-LoRA: https://github.com/Blealtan/RWKV-LM-LoRA

|

||||

- MIDI-LLM-tokenizer: https://github.com/briansemrau/MIDI-LLM-tokenizer

|

||||

|

||||

## プレビュー

|

||||

## Preview

|

||||

|

||||

### ホームページ

|

||||

|

||||

|

||||

|

||||

|

||||

### チャット

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 補完

|

||||

|

||||

|

||||

|

||||

### 作曲

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### コンフィグ

|

||||

|

||||

|

||||

|

||||

|

||||

### モデル管理

|

||||

|

||||

|

||||

|

||||

|

||||

### ダウンロード管理

|

||||

|

||||

|

||||

@@ -61,14 +61,14 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

[简明服务部署示例](#Simple-Deploy-Example) | [服务器部署示例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- 与OpenAI API兼容,一切ChatGPT客户端,都是RWKV客户端。启动模型后,打开 http://127.0.0.1:8000/docs 查看API文档

|

||||

- 全自动依赖安装,你只需要一个轻巧的可执行程序

|

||||

- 预设多级显存配置,几乎在各种电脑上工作良好。通过配置页面切换到WebGPU策略,还可以在AMD,Intel等显卡上运行

|

||||

- 预设多级显存配置,几乎在各种电脑上工作良好。通过配置页面切换Strategy到WebGPU,还可以在AMD,Intel等显卡上运行

|

||||

- 自带用户友好的聊天,续写,作曲交互页面。支持聊天预设,附件上传,MIDI硬件输入及音轨编辑。

|

||||

[预览](#Preview) | [MIDI硬件输入](#MIDI-Input)

|

||||

- 内置WebUI选项,一键启动Web服务,共享硬件资源

|

||||

- 易于理解和操作的参数配置,及各类操作引导提示

|

||||

- 内置模型转换工具

|

||||

- 内置下载管理和远程模型检视

|

||||

- 内置一键LoRA微调

|

||||

- 内置一键LoRA微调 (仅限Windows)

|

||||

- 也可用作 OpenAI ChatGPT 和 GPT Playground 客户端 (在设置内填写API URL和API Key)

|

||||

- 多语言本地化

|

||||

- 主题切换

|

||||

@@ -230,7 +230,7 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

|

||||

### 模型管理

|

||||

|

||||

|

||||

|

||||

|

||||

### 下载管理

|

||||

|

||||

|

||||

@@ -14,6 +14,13 @@ import (

|

||||

wruntime "github.com/wailsapp/wails/v2/pkg/runtime"

|

||||

)

|

||||

|

||||

func (a *App) SaveFile(path string, savedContent []byte) error {

|

||||

if err := os.WriteFile(a.exDir+path, savedContent, 0644); err != nil {

|

||||

return err

|

||||

}

|

||||

return nil

|

||||

}

|

||||

|

||||

func (a *App) SaveJson(fileName string, jsonData any) error {

|

||||

text, err := json.MarshalIndent(jsonData, "", " ")

|

||||

if err != nil {

|

||||

@@ -195,3 +202,8 @@ func (a *App) OpenFileFolder(path string, relative bool) error {

|

||||

}

|

||||

return errors.New("unsupported OS")

|

||||

}

|

||||

|

||||

func (a *App) StartFile(path string) error {

|

||||

_, err := CmdHelper(path)

|

||||

return err

|

||||

}

|

||||

|

||||

@@ -10,7 +10,7 @@ import (

|

||||

"strings"

|

||||

)

|

||||

|

||||

func (a *App) StartServer(python string, port int, host string, webui bool, rwkvBeta bool) (string, error) {

|

||||

func (a *App) StartServer(python string, port int, host string, webui bool, rwkvBeta bool, rwkvcpp bool) (string, error) {

|

||||

var err error

|

||||

if python == "" {

|

||||

python, err = GetPython()

|

||||

@@ -25,6 +25,9 @@ func (a *App) StartServer(python string, port int, host string, webui bool, rwkv

|

||||

if rwkvBeta {

|

||||

args = append(args, "--rwkv-beta")

|

||||

}

|

||||

if rwkvcpp {

|

||||

args = append(args, "--rwkv.cpp")

|

||||

}

|

||||

args = append(args, "--port", strconv.Itoa(port), "--host", host)

|

||||

return Cmd(args...)

|

||||

}

|

||||

@@ -52,6 +55,21 @@ func (a *App) ConvertSafetensors(modelPath string, outPath string) (string, erro

|

||||

return Cmd(args...)

|

||||

}

|

||||

|

||||

func (a *App) ConvertGGML(python string, modelPath string, outPath string, Q51 bool) (string, error) {

|

||||

var err error

|

||||

if python == "" {

|

||||

python, err = GetPython()

|

||||

}

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

dataType := "FP16"

|

||||

if Q51 {

|

||||

dataType = "Q5_1"

|

||||

}

|

||||

return Cmd(python, "./backend-python/convert_pytorch_to_ggml.py", modelPath, outPath, dataType)

|

||||

}

|

||||

|

||||

func (a *App) ConvertData(python string, input string, outputPrefix string, vocab string) (string, error) {

|

||||

var err error

|

||||

if python == "" {

|

||||

|

||||

@@ -15,33 +15,51 @@ import (

|

||||

"runtime"

|

||||

"strconv"

|

||||

"strings"

|

||||

"syscall"

|

||||

)

|

||||

|

||||

func CmdHelper(args ...string) (*exec.Cmd, error) {

|

||||

if runtime.GOOS != "windows" {

|

||||

return nil, errors.New("unsupported OS")

|

||||

}

|

||||

filename := "./cmd-helper.bat"

|

||||

_, err := os.Stat(filename)

|

||||

if err != nil {

|

||||

if err := os.WriteFile(filename, []byte("start %*"), 0644); err != nil {

|

||||

return nil, err

|

||||

}

|

||||

}

|

||||

cmdHelper, err := filepath.Abs(filename)

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

|

||||

if strings.Contains(cmdHelper, " ") {

|

||||

for _, arg := range args {

|

||||

if strings.Contains(arg, " ") {

|

||||

return nil, errors.New("path contains space") // golang bug https://github.com/golang/go/issues/17149#issuecomment-473976818

|

||||

}

|

||||

}

|

||||

}

|

||||

cmd := exec.Command(cmdHelper, args...)

|

||||

cmd.SysProcAttr = &syscall.SysProcAttr{}

|

||||

//go:custom_build windows cmd.SysProcAttr.HideWindow = true

|

||||

err = cmd.Start()

|

||||

if err != nil {

|

||||

return nil, err

|

||||

}

|

||||

return cmd, nil

|

||||

}

|

||||

|

||||

func Cmd(args ...string) (string, error) {

|

||||

switch platform := runtime.GOOS; platform {

|

||||

case "windows":

|

||||

if err := os.WriteFile("./cmd-helper.bat", []byte("start %*"), 0644); err != nil {

|

||||

return "", err

|

||||

}

|

||||

cmdHelper, err := filepath.Abs("./cmd-helper")

|

||||

cmd, err := CmdHelper(args...)

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

|

||||

if strings.Contains(cmdHelper, " ") {

|

||||

for _, arg := range args {

|

||||

if strings.Contains(arg, " ") {

|

||||

return "", errors.New("path contains space") // golang bug https://github.com/golang/go/issues/17149#issuecomment-473976818

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

cmd := exec.Command(cmdHelper, args...)

|

||||

out, err := cmd.CombinedOutput()

|

||||

if err != nil {

|

||||

return "", err

|

||||

}

|

||||

return string(out), nil

|

||||

cmd.Wait()

|

||||

return "", nil

|

||||

case "darwin":

|

||||

ex, err := os.Executable()

|

||||

if err != nil {

|

||||

|

||||

169

backend-python/convert_pytorch_to_ggml.py

vendored

Normal file

169

backend-python/convert_pytorch_to_ggml.py

vendored

Normal file

@@ -0,0 +1,169 @@

|

||||

# Converts an RWKV model checkpoint in PyTorch format to an rwkv.cpp compatible file.

|

||||

# Usage: python convert_pytorch_to_ggml.py C:\RWKV-4-Pile-169M-20220807-8023.pth C:\rwkv.cpp-169M-FP16.bin FP16

|

||||

# Get model checkpoints from https://huggingface.co/BlinkDL

|

||||

# See FILE_FORMAT.md for the documentation on the file format.

|

||||

|

||||

import argparse

|

||||

import struct

|

||||

import torch

|

||||

from typing import Dict

|

||||

|

||||

|

||||

def parse_args():

|

||||

parser = argparse.ArgumentParser(

|

||||

description="Convert an RWKV model checkpoint in PyTorch format to an rwkv.cpp compatible file"

|

||||

)

|

||||

parser.add_argument("src_path", help="Path to PyTorch checkpoint file")

|

||||

parser.add_argument(

|

||||

"dest_path", help="Path to rwkv.cpp checkpoint file, will be overwritten"

|

||||

)

|

||||

parser.add_argument(

|

||||

"data_type",

|

||||

help="Data type, FP16, Q4_0, Q4_1, Q5_0, Q5_1, Q8_0",

|

||||

type=str,

|

||||

choices=[

|

||||

"FP16",

|

||||

"Q4_0",

|

||||

"Q4_1",

|

||||

"Q5_0",

|

||||

"Q5_1",

|

||||

"Q8_0",

|

||||

],

|

||||

default="FP16",

|

||||

)

|

||||

return parser.parse_args()

|

||||

|

||||

|

||||

def get_layer_count(state_dict: Dict[str, torch.Tensor]) -> int:

|

||||

n_layer: int = 0

|

||||

|

||||

while f"blocks.{n_layer}.ln1.weight" in state_dict:

|

||||

n_layer += 1

|

||||

|

||||

assert n_layer > 0

|

||||

|

||||

return n_layer

|

||||

|

||||

|

||||

def write_state_dict(

|

||||

state_dict: Dict[str, torch.Tensor], dest_path: str, data_type: str

|

||||

) -> None:

|

||||

emb_weight: torch.Tensor = state_dict["emb.weight"]

|

||||

|

||||

n_layer: int = get_layer_count(state_dict)

|

||||

n_vocab: int = emb_weight.shape[0]

|

||||

n_embed: int = emb_weight.shape[1]

|

||||

|

||||

is_v5_1_or_2: bool = "blocks.0.att.ln_x.weight" in state_dict

|

||||

is_v5_2: bool = "blocks.0.att.gate.weight" in state_dict

|

||||

|

||||

if is_v5_2:

|

||||

print("Detected RWKV v5.2")

|

||||

elif is_v5_1_or_2:

|

||||

print("Detected RWKV v5.1")

|

||||

else:

|

||||

print("Detected RWKV v4")

|

||||

|

||||

with open(dest_path, "wb") as out_file:

|

||||

is_FP16: bool = data_type == "FP16" or data_type == "float16"

|

||||

|

||||

out_file.write(

|

||||

struct.pack(

|

||||

# Disable padding with '='

|

||||

"=iiiiii",

|

||||

# Magic: 'ggmf' in hex

|

||||

0x67676D66,

|

||||

101,

|

||||

n_vocab,

|

||||

n_embed,

|

||||

n_layer,

|

||||

1 if is_FP16 else 0,

|

||||

)

|

||||

)

|

||||

|

||||

for k in state_dict.keys():

|

||||

tensor: torch.Tensor = state_dict[k].float()

|

||||

|

||||

if ".time_" in k:

|

||||

tensor = tensor.squeeze()

|

||||

|

||||

if is_v5_1_or_2:

|

||||

if ".time_decay" in k:

|

||||

if is_v5_2:

|

||||

tensor = torch.exp(-torch.exp(tensor)).unsqueeze(-1)

|

||||

else:

|

||||

tensor = torch.exp(-torch.exp(tensor)).reshape(-1, 1, 1)

|

||||

|

||||

if ".time_first" in k:

|

||||

tensor = torch.exp(tensor).reshape(-1, 1, 1)

|

||||

|

||||

if ".time_faaaa" in k:

|

||||

tensor = tensor.unsqueeze(-1)

|

||||

else:

|

||||

if ".time_decay" in k:

|

||||

tensor = -torch.exp(tensor)

|

||||

|

||||

# Keep 1-dim vectors and small matrices in FP32

|

||||

if is_FP16 and len(tensor.shape) > 1 and ".time_" not in k:

|

||||

tensor = tensor.half()

|

||||

|

||||

shape = tensor.shape

|

||||

|

||||

print(f"Writing {k}, shape {shape}, type {tensor.dtype}")

|

||||

|

||||

k_encoded: bytes = k.encode("utf-8")

|

||||

|

||||

out_file.write(

|

||||

struct.pack(

|

||||

"=iii",

|

||||

len(shape),

|

||||

len(k_encoded),

|

||||

1 if tensor.dtype == torch.float16 else 0,

|

||||

)

|

||||

)

|

||||

|

||||

# Dimension order is reversed here:

|

||||

# * PyTorch shape is (x rows, y columns)

|

||||

# * ggml shape is (y elements in a row, x elements in a column)

|

||||

# Both shapes represent the same tensor.

|

||||

for dim in reversed(tensor.shape):

|

||||

out_file.write(struct.pack("=i", dim))

|

||||

|

||||

out_file.write(k_encoded)

|

||||

|

||||

tensor.numpy().tofile(out_file)

|

||||

|

||||

|

||||

def main() -> None:

|

||||

args = parse_args()

|

||||

|

||||

print(f"Reading {args.src_path}")

|

||||

|

||||

state_dict: Dict[str, torch.Tensor] = torch.load(args.src_path, map_location="cpu")

|

||||

|

||||

temp_output: str = args.dest_path

|

||||

if args.data_type.startswith("Q"):

|

||||

import re

|

||||

|

||||

temp_output = re.sub(r"Q[4,5,8]_[0,1]", "fp16", temp_output)

|

||||

write_state_dict(state_dict, temp_output, "FP16")

|

||||

if args.data_type.startswith("Q"):

|

||||

import sys

|

||||

import os

|

||||

|

||||

sys.path.append(os.path.dirname(os.path.realpath(__file__)))

|

||||

from rwkv_pip.cpp import rwkv_cpp_shared_library

|

||||

|

||||

library = rwkv_cpp_shared_library.load_rwkv_shared_library()

|

||||

library.rwkv_quantize_model_file(temp_output, args.dest_path, args.data_type)

|

||||

|

||||

print("Done")

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

try:

|

||||

main()

|

||||

except Exception as e:

|

||||

print(e)

|

||||

with open("error.txt", "w") as f:

|

||||

f.write(str(e))

|

||||

@@ -32,6 +32,11 @@ def get_args(args: Union[Sequence[str], None] = None):

|

||||

action="store_true",

|

||||

help="whether to use rwkv-beta (default: False)",

|

||||

)

|

||||

group.add_argument(

|

||||

"--rwkv.cpp",

|

||||

action="store_true",

|

||||

help="whether to use rwkv.cpp (default: False)",

|

||||

)

|

||||

args = parser.parse_args(args)

|

||||

|

||||

return args

|

||||

|

||||

@@ -49,19 +49,13 @@ def switch_model(body: SwitchModelBody, response: Response, request: Request):

|

||||

if body.model == "":

|

||||

return "success"

|

||||

|

||||

STRATEGY_REGEX = r"^(?:(?:^|->) *(?:cuda(?::[\d]+)?|cpu|mps|dml) (?:fp(?:16|32)|bf16)(?:i8|i4|i3)?(?: \*[\d]+\+?)? *)+$"

|

||||

if not re.match(STRATEGY_REGEX, body.strategy):

|

||||

raise HTTPException(

|

||||

Status.HTTP_400_BAD_REQUEST,

|

||||

"Invalid strategy. Please read https://pypi.org/project/rwkv/",

|

||||

)

|

||||

devices = set(

|

||||

[

|

||||

x.strip().split(" ")[0].replace("cuda:0", "cuda")

|

||||

for x in body.strategy.split("->")

|

||||

]

|

||||

)

|

||||

print(f"Devices: {devices}")

|

||||

print(f"Strategy Devices: {devices}")

|

||||

# if len(devices) > 1:

|

||||

# state_cache.disable_state_cache()

|

||||

# else:

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

import io

|

||||

import global_var

|

||||

from fastapi import APIRouter, HTTPException, status

|

||||

from fastapi import APIRouter, HTTPException, UploadFile, status

|

||||

from starlette.responses import StreamingResponse

|

||||

from pydantic import BaseModel

|

||||

from utils.midi import *

|

||||

@@ -33,6 +33,16 @@ def text_to_midi(body: TextToMidiBody):

|

||||

return StreamingResponse(mid_data, media_type="audio/midi")

|

||||

|

||||

|

||||

@router.post("/midi-to-text", tags=["MIDI"])

|

||||

async def midi_to_text(file_data: UploadFile):

|

||||

vocab_config = "backend-python/utils/midi_vocab_config.json"

|

||||

cfg = VocabConfig.from_json(vocab_config)

|

||||

mid = mido.MidiFile(file=file_data.file)

|

||||

text = convert_midi_to_str(cfg, mid)

|

||||

|

||||

return {"text": text}

|

||||

|

||||

|

||||

class TxtToMidiBody(BaseModel):

|

||||

txt_path: str

|

||||

midi_path: str

|

||||

|

||||

@@ -90,10 +90,15 @@ def add_state(body: AddStateBody):

|

||||

|

||||

try:

|

||||

id: int = trie.insert(body.prompt)

|

||||

devices: List[torch.device] = [tensor.device for tensor in body.state]

|

||||

devices: List[torch.device] = [

|

||||

(tensor.device if hasattr(tensor, "device") else torch.device("cpu"))

|

||||

for tensor in body.state

|

||||

]

|

||||

dtrie[id] = {

|

||||

"tokens": copy.deepcopy(body.tokens),

|

||||

"state": [tensor.cpu() for tensor in body.state],

|

||||

"state": [tensor.cpu() for tensor in body.state]

|

||||

if hasattr(body.state[0], "device")

|

||||

else copy.deepcopy(body.state),

|

||||

"logits": copy.deepcopy(body.logits),

|

||||

"devices": devices,

|

||||

}

|

||||

@@ -185,7 +190,9 @@ def longest_prefix_state(body: LongestPrefixStateBody, request: Request):

|

||||

return {

|

||||

"prompt": prompt,

|

||||

"tokens": v["tokens"],

|

||||

"state": [tensor.to(devices[i]) for i, tensor in enumerate(v["state"])],

|

||||

"state": [tensor.to(devices[i]) for i, tensor in enumerate(v["state"])]

|

||||

if hasattr(v["state"][0], "device")

|

||||

else v["state"],

|

||||

"logits": v["logits"],

|

||||

}

|

||||

else:

|

||||

|

||||

BIN

backend-python/rwkv_pip/cpp/librwkv.dylib

vendored

Normal file

BIN

backend-python/rwkv_pip/cpp/librwkv.dylib

vendored

Normal file

Binary file not shown.

BIN

backend-python/rwkv_pip/cpp/librwkv.so

vendored

Normal file

BIN

backend-python/rwkv_pip/cpp/librwkv.so

vendored

Normal file

Binary file not shown.

14

backend-python/rwkv_pip/cpp/model.py

vendored

Normal file

14

backend-python/rwkv_pip/cpp/model.py

vendored

Normal file

@@ -0,0 +1,14 @@

|

||||

from typing import Any, List

|

||||

from . import rwkv_cpp_model

|

||||

from . import rwkv_cpp_shared_library

|

||||

|

||||

|

||||

class RWKV:

|

||||

def __init__(self, model_path: str, strategy=None):

|

||||

self.library = rwkv_cpp_shared_library.load_rwkv_shared_library()

|

||||

self.model = rwkv_cpp_model.RWKVModel(self.library, model_path)

|

||||

self.w = {} # fake weight

|

||||

self.w["emb.weight"] = [0] * self.model.n_vocab

|

||||

|

||||

def forward(self, tokens: List[int], state: Any | None):

|

||||

return self.model.eval_sequence_in_chunks(tokens, state, use_numpy=True)

|

||||

BIN

backend-python/rwkv_pip/cpp/rwkv.dll

vendored

Normal file

BIN

backend-python/rwkv_pip/cpp/rwkv.dll

vendored

Normal file

Binary file not shown.

369

backend-python/rwkv_pip/cpp/rwkv_cpp_model.py

vendored

Normal file

369

backend-python/rwkv_pip/cpp/rwkv_cpp_model.py

vendored

Normal file

@@ -0,0 +1,369 @@

|

||||

import os

|

||||

import multiprocessing

|

||||

|

||||

# Pre-import PyTorch, if available.

|

||||

# This fixes "OSError: [WinError 127] The specified procedure could not be found".

|

||||

try:

|

||||

import torch

|

||||

except ModuleNotFoundError:

|

||||

pass

|

||||

|

||||

# I'm sure this is not strictly correct, but let's keep this crutch for now.

|

||||

try:

|

||||

import rwkv_cpp_shared_library

|

||||

except ModuleNotFoundError:

|

||||

from . import rwkv_cpp_shared_library

|

||||

|

||||

from typing import TypeVar, Optional, Tuple, List

|

||||

|

||||

# A value of this type is either a numpy's ndarray or a PyTorch's Tensor.

|

||||

NumpyArrayOrPyTorchTensor: TypeVar = TypeVar('NumpyArrayOrPyTorchTensor')

|

||||

|

||||

class RWKVModel:

|

||||

"""

|

||||

An RWKV model managed by rwkv.cpp library.

|

||||

"""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

shared_library: rwkv_cpp_shared_library.RWKVSharedLibrary,

|

||||

model_path: str,

|

||||

thread_count: int = max(1, multiprocessing.cpu_count() // 2),

|

||||

gpu_layer_count: int = 0,

|

||||

**kwargs

|

||||

) -> None:

|

||||

"""

|

||||

Loads the model and prepares it for inference.

|

||||

In case of any error, this method will throw an exception.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

shared_library : RWKVSharedLibrary

|

||||

rwkv.cpp shared library.

|

||||

model_path : str

|

||||

Path to RWKV model file in ggml format.

|

||||

thread_count : int

|

||||

Thread count to use. If not set, defaults to CPU count / 2.

|

||||

gpu_layer_count : int

|

||||

Count of layers to offload onto the GPU, must be >= 0.

|

||||

See documentation of `gpu_offload_layers` for details about layer offloading.

|

||||

"""

|

||||

|

||||

if 'gpu_layers_count' in kwargs:

|

||||

gpu_layer_count = kwargs['gpu_layers_count']

|

||||

|

||||

assert os.path.isfile(model_path), f'{model_path} is not a file'

|

||||

assert thread_count > 0, 'Thread count must be > 0'

|

||||

assert gpu_layer_count >= 0, 'GPU layer count must be >= 0'

|

||||

|

||||

self._library: rwkv_cpp_shared_library.RWKVSharedLibrary = shared_library

|

||||

|

||||

self._ctx: rwkv_cpp_shared_library.RWKVContext = self._library.rwkv_init_from_file(model_path, thread_count)

|

||||

|

||||

if gpu_layer_count > 0:

|

||||

self.gpu_offload_layers(gpu_layer_count)

|

||||

|

||||

self._state_buffer_element_count: int = self._library.rwkv_get_state_buffer_element_count(self._ctx)

|

||||

self._logits_buffer_element_count: int = self._library.rwkv_get_logits_buffer_element_count(self._ctx)

|

||||

|

||||

self._valid: bool = True

|

||||

|

||||

def gpu_offload_layers(self, layer_count: int) -> bool:

|

||||

"""

|

||||

Offloads specified count of model layers onto the GPU. Offloaded layers are evaluated using cuBLAS or CLBlast.

|

||||

For the purposes of this function, model head (unembedding matrix) is treated as an additional layer:

|

||||

- pass `model.n_layer` to offload all layers except model head

|

||||

- pass `model.n_layer + 1` to offload all layers, including model head

|

||||

|

||||

Returns true if at least one layer was offloaded.

|

||||

If rwkv.cpp was compiled without cuBLAS and CLBlast support, this function is a no-op and always returns false.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

layer_count : int

|

||||

Count of layers to offload onto the GPU, must be >= 0.

|

||||

"""

|

||||

|

||||

assert layer_count >= 0, 'Layer count must be >= 0'

|

||||

|

||||

return self._library.rwkv_gpu_offload_layers(self._ctx, layer_count)

|

||||

|

||||

@property

|

||||

def n_vocab(self) -> int:

|

||||

return self._library.rwkv_get_n_vocab(self._ctx)

|

||||

|

||||

@property

|

||||

def n_embed(self) -> int:

|

||||

return self._library.rwkv_get_n_embed(self._ctx)

|

||||

|

||||

@property

|

||||

def n_layer(self) -> int:

|

||||

return self._library.rwkv_get_n_layer(self._ctx)

|

||||

|

||||

def eval(

|

||||

self,

|

||||

token: int,

|

||||

state_in: Optional[NumpyArrayOrPyTorchTensor],

|

||||

state_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

logits_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

use_numpy: bool = False

|

||||

) -> Tuple[NumpyArrayOrPyTorchTensor, NumpyArrayOrPyTorchTensor]:

|

||||

"""

|

||||

Evaluates the model for a single token.

|

||||

In case of any error, this method will throw an exception.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

token : int

|

||||

Index of next token to be seen by the model. Must be in range 0 <= token < n_vocab.

|

||||

state_in : Optional[NumpyArrayOrTorchTensor]

|

||||

State from previous call of this method. If this is a first pass, set it to None.

|

||||

state_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for state. If provided, must be of type float32, contiguous and of shape (state_buffer_element_count).

|

||||

logits_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for logits. If provided, must be of type float32, contiguous and of shape (logits_buffer_element_count).

|

||||

use_numpy : bool

|

||||

If set to True, numpy's ndarrays will be created instead of PyTorch's Tensors.

|

||||

This parameter is ignored if any tensor parameter is not None; in such case,

|

||||

type of returned tensors will match the type of received tensors.

|

||||

|

||||

Returns

|

||||

-------

|

||||

logits, state

|

||||

Logits vector of shape (n_vocab); state for the next step.

|

||||

"""

|

||||

|

||||

assert self._valid, 'Model was freed'

|

||||

|

||||

use_numpy = self._detect_numpy_usage([state_in, state_out, logits_out], use_numpy)

|

||||

|

||||

if state_in is not None:

|

||||

self._validate_tensor(state_in, 'state_in', self._state_buffer_element_count)

|

||||

|

||||

state_in_ptr = self._get_data_ptr(state_in)

|

||||

else:

|

||||

state_in_ptr = 0

|

||||

|

||||

if state_out is not None:

|

||||

self._validate_tensor(state_out, 'state_out', self._state_buffer_element_count)

|

||||

else:

|

||||

state_out = self._zeros_float32(self._state_buffer_element_count, use_numpy)

|

||||

|

||||

if logits_out is not None:

|

||||

self._validate_tensor(logits_out, 'logits_out', self._logits_buffer_element_count)

|

||||

else:

|

||||

logits_out = self._zeros_float32(self._logits_buffer_element_count, use_numpy)

|

||||

|

||||

self._library.rwkv_eval(

|

||||

self._ctx,

|

||||

token,

|

||||

state_in_ptr,

|

||||

self._get_data_ptr(state_out),

|

||||

self._get_data_ptr(logits_out)

|

||||

)

|

||||

|

||||

return logits_out, state_out

|

||||

|

||||

def eval_sequence(

|

||||

self,

|

||||

tokens: List[int],

|

||||

state_in: Optional[NumpyArrayOrPyTorchTensor],

|

||||

state_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

logits_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

use_numpy: bool = False

|

||||

) -> Tuple[NumpyArrayOrPyTorchTensor, NumpyArrayOrPyTorchTensor]:

|

||||

"""

|

||||

Evaluates the model for a sequence of tokens.

|

||||

|

||||

NOTE ON GGML NODE LIMIT

|

||||

|

||||

ggml has a hard-coded limit on max amount of nodes in a computation graph. The sequence graph is built in a way that quickly exceedes

|

||||

this limit when using large models and/or large sequence lengths.

|

||||

Fortunately, rwkv.cpp's fork of ggml has increased limit which was tested to work for sequence lengths up to 64 for 14B models.

|

||||

|

||||

If you get `GGML_ASSERT: ...\\ggml.c:16941: cgraph->n_nodes < GGML_MAX_NODES`, this means you've exceeded the limit.

|

||||

To get rid of the assertion failure, reduce the model size and/or sequence length.

|

||||

|

||||

In case of any error, this method will throw an exception.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

tokens : List[int]

|

||||

Indices of the next tokens to be seen by the model. Must be in range 0 <= token < n_vocab.

|

||||

state_in : Optional[NumpyArrayOrTorchTensor]

|

||||

State from previous call of this method. If this is a first pass, set it to None.

|

||||

state_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for state. If provided, must be of type float32, contiguous and of shape (state_buffer_element_count).

|

||||

logits_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for logits. If provided, must be of type float32, contiguous and of shape (logits_buffer_element_count).

|

||||

use_numpy : bool

|

||||

If set to True, numpy's ndarrays will be created instead of PyTorch's Tensors.

|

||||

This parameter is ignored if any tensor parameter is not None; in such case,

|

||||

type of returned tensors will match the type of received tensors.

|

||||

|

||||

Returns

|

||||

-------

|

||||

logits, state

|

||||

Logits vector of shape (n_vocab); state for the next step.

|

||||

"""

|

||||

|

||||

assert self._valid, 'Model was freed'

|

||||

|

||||

use_numpy = self._detect_numpy_usage([state_in, state_out, logits_out], use_numpy)

|

||||

|

||||

if state_in is not None:

|

||||

self._validate_tensor(state_in, 'state_in', self._state_buffer_element_count)

|

||||

|

||||

state_in_ptr = self._get_data_ptr(state_in)

|

||||

else:

|

||||

state_in_ptr = 0

|

||||

|

||||

if state_out is not None:

|

||||

self._validate_tensor(state_out, 'state_out', self._state_buffer_element_count)

|

||||

else:

|

||||

state_out = self._zeros_float32(self._state_buffer_element_count, use_numpy)

|

||||

|

||||

if logits_out is not None:

|

||||

self._validate_tensor(logits_out, 'logits_out', self._logits_buffer_element_count)

|

||||

else:

|

||||

logits_out = self._zeros_float32(self._logits_buffer_element_count, use_numpy)

|

||||

|

||||

self._library.rwkv_eval_sequence(

|

||||

self._ctx,

|

||||

tokens,

|

||||

state_in_ptr,

|

||||

self._get_data_ptr(state_out),

|

||||

self._get_data_ptr(logits_out)

|

||||

)

|

||||

|

||||

return logits_out, state_out

|

||||

|

||||

def eval_sequence_in_chunks(

|

||||

self,

|

||||

tokens: List[int],

|

||||

state_in: Optional[NumpyArrayOrPyTorchTensor],

|

||||

state_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

logits_out: Optional[NumpyArrayOrPyTorchTensor] = None,

|

||||

chunk_size: int = 16,

|

||||

use_numpy: bool = False

|

||||

) -> Tuple[NumpyArrayOrPyTorchTensor, NumpyArrayOrPyTorchTensor]:

|

||||

"""

|

||||

Evaluates the model for a sequence of tokens using `eval_sequence`, splitting a potentially long sequence into fixed-length chunks.

|

||||

This function is useful for processing complete prompts and user input in chat & role-playing use-cases.

|

||||

It is recommended to use this function instead of `eval_sequence` to avoid mistakes and get maximum performance.

|

||||

|

||||

Chunking allows processing sequences of thousands of tokens, while not reaching the ggml's node limit and not consuming too much memory.

|

||||

A reasonable and recommended value of chunk size is 16. If you want maximum performance, try different chunk sizes in range [2..64]

|

||||

and choose one that works the best in your use case.

|

||||

|

||||

In case of any error, this method will throw an exception.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

tokens : List[int]

|

||||

Indices of the next tokens to be seen by the model. Must be in range 0 <= token < n_vocab.

|

||||

chunk_size : int

|

||||

Size of each chunk in tokens, must be positive.

|

||||

state_in : Optional[NumpyArrayOrTorchTensor]

|

||||

State from previous call of this method. If this is a first pass, set it to None.

|

||||

state_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for state. If provided, must be of type float32, contiguous and of shape (state_buffer_element_count).

|

||||

logits_out : Optional[NumpyArrayOrTorchTensor]

|

||||

Optional output tensor for logits. If provided, must be of type float32, contiguous and of shape (logits_buffer_element_count).

|

||||

use_numpy : bool

|

||||

If set to True, numpy's ndarrays will be created instead of PyTorch's Tensors.

|

||||

This parameter is ignored if any tensor parameter is not None; in such case,

|

||||

type of returned tensors will match the type of received tensors.

|

||||

|

||||

Returns

|

||||

-------

|

||||

logits, state

|

||||

Logits vector of shape (n_vocab); state for the next step.

|

||||

"""

|

||||

|

||||

assert self._valid, 'Model was freed'

|

||||

|

||||

use_numpy = self._detect_numpy_usage([state_in, state_out, logits_out], use_numpy)

|

||||

|

||||

if state_in is not None:

|

||||

self._validate_tensor(state_in, 'state_in', self._state_buffer_element_count)

|

||||

|

||||

state_in_ptr = self._get_data_ptr(state_in)

|

||||

else:

|

||||

state_in_ptr = 0

|

||||

|

||||

if state_out is not None:

|

||||

self._validate_tensor(state_out, 'state_out', self._state_buffer_element_count)

|

||||

else:

|

||||

state_out = self._zeros_float32(self._state_buffer_element_count, use_numpy)

|

||||

|

||||

if logits_out is not None:

|

||||

self._validate_tensor(logits_out, 'logits_out', self._logits_buffer_element_count)

|

||||

else:

|

||||

logits_out = self._zeros_float32(self._logits_buffer_element_count, use_numpy)

|

||||

|

||||

self._library.rwkv_eval_sequence_in_chunks(

|

||||

self._ctx,

|

||||

tokens,

|

||||

chunk_size,

|

||||

state_in_ptr,

|

||||

self._get_data_ptr(state_out),

|

||||

self._get_data_ptr(logits_out)

|

||||

)

|

||||

|

||||

return logits_out, state_out

|

||||

|

||||

def free(self) -> None:

|

||||

"""

|

||||

Frees all allocated resources.

|

||||

In case of any error, this method will throw an exception.

|

||||

The object must not be used anymore after calling this method.

|

||||

"""

|

||||

|

||||

assert self._valid, 'Already freed'

|

||||

|

||||

self._valid = False

|

||||

|

||||

self._library.rwkv_free(self._ctx)

|

||||

|

||||

def __del__(self) -> None:

|

||||

# Free the context on GC in case user forgot to call free() explicitly.

|

||||

if hasattr(self, '_valid') and self._valid:

|

||||

self.free()

|

||||

|

||||

def _is_pytorch_tensor(self, tensor: NumpyArrayOrPyTorchTensor) -> bool:

|

||||

return hasattr(tensor, '__module__') and tensor.__module__ == 'torch'

|

||||

|

||||

def _detect_numpy_usage(self, tensors: List[Optional[NumpyArrayOrPyTorchTensor]], use_numpy_by_default: bool) -> bool:

|

||||

for tensor in tensors:

|

||||

if tensor is not None:

|

||||

return False if self._is_pytorch_tensor(tensor) else True

|

||||

|

||||

return use_numpy_by_default

|

||||

|

||||

def _validate_tensor(self, tensor: NumpyArrayOrPyTorchTensor, name: str, size: int) -> None:

|

||||

if self._is_pytorch_tensor(tensor):

|

||||

tensor: torch.Tensor = tensor

|

||||

assert tensor.device == torch.device('cpu'), f'{name} is not on CPU'

|

||||

assert tensor.dtype == torch.float32, f'{name} is not of type float32'

|

||||

assert tensor.shape == (size,), f'{name} has invalid shape {tensor.shape}, expected ({size})'

|

||||

assert tensor.is_contiguous(), f'{name} is not contiguous'

|

||||

else:

|

||||

import numpy as np

|

||||

tensor: np.ndarray = tensor

|

||||

assert tensor.dtype == np.float32, f'{name} is not of type float32'

|

||||

assert tensor.shape == (size,), f'{name} has invalid shape {tensor.shape}, expected ({size})'

|

||||

assert tensor.data.contiguous, f'{name} is not contiguous'

|

||||

|

||||

def _get_data_ptr(self, tensor: NumpyArrayOrPyTorchTensor):

|

||||

if self._is_pytorch_tensor(tensor):

|

||||

return tensor.data_ptr()

|

||||

else:

|

||||

return tensor.ctypes.data

|

||||

|

||||

def _zeros_float32(self, element_count: int, use_numpy: bool) -> NumpyArrayOrPyTorchTensor:

|

||||

if use_numpy:

|

||||

import numpy as np

|

||||

return np.zeros(element_count, dtype=np.float32)

|

||||

else:

|

||||

return torch.zeros(element_count, dtype=torch.float32, device='cpu')

|

||||

444

backend-python/rwkv_pip/cpp/rwkv_cpp_shared_library.py

vendored

Normal file

444

backend-python/rwkv_pip/cpp/rwkv_cpp_shared_library.py

vendored

Normal file

@@ -0,0 +1,444 @@

|

||||

import os

|

||||

import sys

|

||||

import ctypes

|

||||

import pathlib

|

||||

import platform

|

||||

from typing import Optional, List, Tuple, Callable

|

||||

|

||||

QUANTIZED_FORMAT_NAMES: Tuple[str, str, str, str, str] = (

|

||||

'Q4_0',

|

||||

'Q4_1',

|

||||

'Q5_0',

|

||||

'Q5_1',

|

||||

'Q8_0'

|

||||

)

|

||||

|

||||

P_FLOAT = ctypes.POINTER(ctypes.c_float)

|

||||

P_INT = ctypes.POINTER(ctypes.c_int32)

|

||||

|

||||

class RWKVContext:

|

||||

|

||||

def __init__(self, ptr: ctypes.pointer) -> None:

|

||||

self.ptr: ctypes.pointer = ptr

|

||||

|

||||

class RWKVSharedLibrary:

|

||||

"""

|

||||

Python wrapper around rwkv.cpp shared library.

|

||||

"""

|

||||

|

||||

def __init__(self, shared_library_path: str) -> None:

|

||||

"""

|

||||

Loads the shared library from specified file.

|

||||

In case of any error, this method will throw an exception.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

shared_library_path : str

|

||||

Path to rwkv.cpp shared library. On Windows, it would look like 'rwkv.dll'. On UNIX, 'rwkv.so'.

|

||||

"""

|

||||

# When Python is greater than 3.8, we need to reprocess the custom dll

|

||||

# according to the documentation to prevent loading failure errors.

|

||||

# https://docs.python.org/3/whatsnew/3.8.html#ctypes

|

||||

if platform.system().lower() == 'windows':

|

||||

self.library = ctypes.CDLL(shared_library_path, winmode=0)

|

||||

else:

|

||||

self.library = ctypes.cdll.LoadLibrary(shared_library_path)

|

||||

|

||||

self.library.rwkv_init_from_file.argtypes = [ctypes.c_char_p, ctypes.c_uint32]

|

||||

self.library.rwkv_init_from_file.restype = ctypes.c_void_p

|

||||

|

||||

self.library.rwkv_gpu_offload_layers.argtypes = [ctypes.c_void_p, ctypes.c_uint32]

|

||||

self.library.rwkv_gpu_offload_layers.restype = ctypes.c_bool

|

||||

|

||||

self.library.rwkv_eval.argtypes = [

|

||||

ctypes.c_void_p, # ctx

|

||||

ctypes.c_int32, # token

|

||||

P_FLOAT, # state_in

|

||||

P_FLOAT, # state_out

|

||||

P_FLOAT # logits_out

|

||||

]

|

||||

self.library.rwkv_eval.restype = ctypes.c_bool

|

||||

|

||||

self.library.rwkv_eval_sequence.argtypes = [

|

||||

ctypes.c_void_p, # ctx

|

||||

P_INT, # tokens

|

||||

ctypes.c_size_t, # token count

|

||||

P_FLOAT, # state_in

|

||||

P_FLOAT, # state_out

|

||||

P_FLOAT # logits_out

|

||||

]

|

||||

self.library.rwkv_eval_sequence.restype = ctypes.c_bool

|

||||

|

||||

self.library.rwkv_eval_sequence_in_chunks.argtypes = [

|

||||

ctypes.c_void_p, # ctx

|

||||

P_INT, # tokens

|

||||

ctypes.c_size_t, # token count

|

||||

ctypes.c_size_t, # chunk size

|

||||

P_FLOAT, # state_in

|

||||

P_FLOAT, # state_out

|

||||

P_FLOAT # logits_out

|

||||

]

|

||||

self.library.rwkv_eval_sequence_in_chunks.restype = ctypes.c_bool

|

||||

|

||||

self.library.rwkv_get_n_vocab.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_get_n_vocab.restype = ctypes.c_size_t

|

||||

|

||||

self.library.rwkv_get_n_embed.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_get_n_embed.restype = ctypes.c_size_t

|

||||

|

||||

self.library.rwkv_get_n_layer.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_get_n_layer.restype = ctypes.c_size_t

|

||||

|

||||

self.library.rwkv_get_state_buffer_element_count.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_get_state_buffer_element_count.restype = ctypes.c_uint32

|

||||

|

||||

self.library.rwkv_get_logits_buffer_element_count.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_get_logits_buffer_element_count.restype = ctypes.c_uint32

|

||||

|

||||

self.library.rwkv_free.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_free.restype = None

|

||||

|

||||

self.library.rwkv_free.argtypes = [ctypes.c_void_p]

|

||||

self.library.rwkv_free.restype = None

|

||||

|

||||

self.library.rwkv_quantize_model_file.argtypes = [ctypes.c_char_p, ctypes.c_char_p, ctypes.c_char_p]

|

||||

self.library.rwkv_quantize_model_file.restype = ctypes.c_bool

|

||||

|

||||

self.library.rwkv_get_system_info_string.argtypes = []

|

||||

self.library.rwkv_get_system_info_string.restype = ctypes.c_char_p

|

||||

|

||||

self.nullptr = ctypes.cast(0, ctypes.c_void_p)

|

||||

|

||||

def rwkv_init_from_file(self, model_file_path: str, thread_count: int) -> RWKVContext:

|

||||

"""

|

||||

Loads the model from a file and prepares it for inference.

|

||||

Throws an exception in case of any error. Error messages would be printed to stderr.

|

||||

|

||||

Parameters

|

||||

----------

|

||||

model_file_path : str

|

||||

Path to model file in ggml format.

|

||||

thread_count : int

|

||||

Count of threads to use, must be positive.

|

||||

"""

|

||||

|

||||

ptr = self.library.rwkv_init_from_file(model_file_path.encode('utf-8'), ctypes.c_uint32(thread_count))

|

||||

|

||||

assert ptr is not None, 'rwkv_init_from_file failed, check stderr'

|

||||

|

||||

return RWKVContext(ptr)

|

||||

|

||||

def rwkv_gpu_offload_layers(self, ctx: RWKVContext, layer_count: int) -> bool:

|

||||

"""

|

||||

Offloads specified count of model layers onto the GPU. Offloaded layers are evaluated using cuBLAS or CLBlast.

|

||||

For the purposes of this function, model head (unembedding matrix) is treated as an additional layer:

|

||||

- pass `rwkv_get_n_layer(ctx)` to offload all layers except model head

|

||||

- pass `rwkv_get_n_layer(ctx) + 1` to offload all layers, including model head

|

||||