Compare commits

No commits in common. "master" and "v1.4.0" have entirely different histories.

4

.gitattributes

vendored

4

.gitattributes

vendored

@ -1,11 +1,7 @@

|

||||

* text=auto eol=lf

|

||||

|

||||

backend-python/rwkv_pip/** linguist-vendored

|

||||

backend-python/wkv_cuda_utils/** linguist-vendored

|

||||

backend-python/get-pip.py linguist-vendored

|

||||

backend-python/convert_model.py linguist-vendored

|

||||

backend-python/convert_safetensors.py linguist-vendored

|

||||

backend-python/convert_pytorch_to_ggml.py linguist-vendored

|

||||

backend-python/utils/midi.py linguist-vendored

|

||||

build/** linguist-vendored

|

||||

finetune/lora/** linguist-vendored

|

||||

|

||||

9

.github/dependabot.yml

vendored

9

.github/dependabot.yml

vendored

@ -1,9 +0,0 @@

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "weekly"

|

||||

commit-message:

|

||||

prefix: "chore"

|

||||

include: "scope"

|

||||

171

.github/workflows/docker.yml

vendored

171

.github/workflows/docker.yml

vendored

@ -1,171 +0,0 @@

|

||||

name: Publish Docker Image

|

||||

on: [push]

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.ref }}-${{ github.workflow }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

docker_build:

|

||||

name: Build ${{ matrix.arch }} Image

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

strategy:

|

||||

matrix:

|

||||

include:

|

||||

- arch: amd64

|

||||

name: amd64

|

||||

# - arch: arm64

|

||||

# name: arm64

|

||||

|

||||

steps:

|

||||

- name: Free up disk spaces

|

||||

run: |

|

||||

sudo rm -rf /usr/share/dotnet || true

|

||||

sudo rm -rf /opt/ghc || true

|

||||

sudo rm -rf "/usr/local/share/boost" || true

|

||||

sudo rm -rf "$AGENT_TOOLSDIRECTORY" || true

|

||||

|

||||

- name: Get lowercase string for the repository name

|

||||

id: lowercase-repo-name

|

||||

uses: ASzc/change-string-case-action@v2

|

||||

with:

|

||||

string: ${{ github.event.repository.name }}

|

||||

|

||||

- name: Checkout base

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Cache Docker layers

|

||||

uses: actions/cache@v2

|

||||

with:

|

||||

path: /tmp/.buildx-cache

|

||||

key: ${{ github.ref }}-${{ matrix.arch }}

|

||||

restore-keys: |

|

||||

${{ github.ref }}-${{ matrix.arch }}

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

with:

|

||||

platforms: linux/${{ matrix.arch }}

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

- name: Docker login

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKER_USERNAME }}

|

||||

password: ${{ secrets.DOCKER_PASSWORD }}

|

||||

|

||||

- name: Get commit SHA

|

||||

id: vars

|

||||

run: echo "::set-output name=sha_short::$(git rev-parse --short HEAD)"

|

||||

|

||||

- name: Build and export

|

||||

id: build

|

||||

if: github.ref == 'refs/heads/master'

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

push: true

|

||||

platforms: linux/${{ matrix.arch }}

|

||||

tags: ${{ secrets.DOCKER_USERNAME }}/${{ steps.lowercase-repo-name.outputs.lowercase }}:${{ matrix.name }}-latest

|

||||

build-args: |

|

||||

SHA=${{ steps.vars.outputs.sha_short }}

|

||||

outputs: type=image,push=true

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

|

||||

- name: Replace tag without `v`

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

uses: actions/github-script@v1

|

||||

id: version

|

||||

with:

|

||||

script: |

|

||||

return context.payload.ref.replace(/\/?refs\/tags\/v/, '')

|

||||

result-encoding: string

|

||||

|

||||

- name: Build release and export

|

||||

id: build_rel

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

push: true

|

||||

platforms: linux/${{ matrix.arch }}

|

||||

tags: ${{ secrets.DOCKER_USERNAME }}/${{ steps.lowercase-repo-name.outputs.lowercase }}:${{ matrix.name }}-${{steps.version.outputs.result}}

|

||||

build-args: |

|

||||

SHA=${{ steps.version.outputs.result }}

|

||||

outputs: type=image,push=true

|

||||

cache-from: type=local,src=/tmp/.buildx-cache

|

||||

cache-to: type=local,dest=/tmp/.buildx-cache

|

||||

|

||||

- name: Save digest

|

||||

if: github.ref == 'refs/heads/master'

|

||||

run: echo ${{ steps.build.outputs.digest }} > /tmp/digest.txt

|

||||

|

||||

- name: Save release digest

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

run: echo ${{ steps.build_rel.outputs.digest }} > /tmp/digest.txt

|

||||

|

||||

- name: Upload artifact

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: digest_${{ matrix.name }}

|

||||

path: /tmp/digest.txt

|

||||

|

||||

manifests:

|

||||

name: Build manifests

|

||||

needs: [docker_build]

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Get lowercase string for the repository name

|

||||

id: lowercase-repo-name

|

||||

uses: ASzc/change-string-case-action@v2

|

||||

with:

|

||||

string: ${{ github.event.repository.name }}

|

||||

|

||||

- name: Checkout base

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

# https://github.com/docker/setup-qemu-action

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v2

|

||||

|

||||

# https://github.com/docker/setup-buildx-action

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

with:

|

||||

config-inline: |

|

||||

[worker.oci]

|

||||

max-parallelism = 1

|

||||

|

||||

- name: Download artifact

|

||||

uses: actions/download-artifact@v3

|

||||

with:

|

||||

path: /tmp/images/

|

||||

|

||||

- name: Docker login

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKER_USERNAME }}

|

||||

password: ${{ secrets.DOCKER_PASSWORD }}

|

||||

|

||||

- name: Replace tag without `v`

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

uses: actions/github-script@v1

|

||||

id: version

|

||||

with:

|

||||

script: |

|

||||

return context.payload.ref.replace(/\/?refs\/tags\/v/, '')

|

||||

result-encoding: string

|

||||

|

||||

- name: Merge and push manifest on master branch

|

||||

if: github.ref == 'refs/heads/master'

|

||||

run: python scripts/merge_manifest.py "${{ secrets.DOCKER_USERNAME }}/${{ steps.lowercase-repo-name.outputs.lowercase }}"

|

||||

|

||||

- name: Merge and push manifest on release

|

||||

if: startsWith(github.ref, 'refs/tags/')

|

||||

run: python scripts/merge_manifest.py "${{ secrets.DOCKER_USERNAME }}/${{ steps.lowercase-repo-name.outputs.lowercase }}" ${{steps.version.outputs.result}}

|

||||

114

.github/workflows/pre-release.yml

vendored

114

.github/workflows/pre-release.yml

vendored

@ -1,114 +0,0 @@

|

||||

name: pre-release

|

||||

on:

|

||||

workflow_dispatch:

|

||||

push:

|

||||

branches:

|

||||

- master

|

||||

paths:

|

||||

- "backend-python/**"

|

||||

tags-ignore:

|

||||

- "v*"

|

||||

|

||||

jobs:

|

||||

windows:

|

||||

runs-on: windows-2022

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

- uses: actions/setup-python@v5

|

||||

id: cp310

|

||||

with:

|

||||

python-version: "3.10"

|

||||

- uses: crazy-max/ghaction-chocolatey@v3

|

||||

with:

|

||||

args: install upx

|

||||

- run: |

|

||||

Start-BitsTransfer https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_windows_x86_64.exe ./backend-rust/webgpu_server.exe

|

||||

Start-BitsTransfer https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_windows_x86_64.exe ./backend-rust/web-rwkv-converter.exe

|

||||

Start-BitsTransfer https://github.com/josStorer/LibreHardwareMonitor.Console/releases/latest/download/LibreHardwareMonitor.Console.zip ./LibreHardwareMonitor.Console.zip

|

||||

Expand-Archive ./LibreHardwareMonitor.Console.zip -DestinationPath ./components/LibreHardwareMonitor.Console

|

||||

Start-BitsTransfer https://www.python.org/ftp/python/3.10.11/python-3.10.11-embed-amd64.zip ./python-3.10.11-embed-amd64.zip

|

||||

Expand-Archive ./python-3.10.11-embed-amd64.zip -DestinationPath ./py310

|

||||

$content=Get-Content "./py310/python310._pth"; $content | ForEach-Object {if ($_.ReadCount -eq 3) {"Lib\\site-packages"} else {$_}} | Set-Content ./py310/python310._pth

|

||||

./py310/python ./backend-python/get-pip.py

|

||||

./py310/python -m pip install Cython==3.0.4

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../include" -Destination "py310/include" -Recurse

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../libs" -Destination "py310/libs" -Recurse

|

||||

./py310/python -m pip install cyac==1.9

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

(Get-Content -Path ./backend-golang/app.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/app.go

|

||||

(Get-Content -Path ./backend-golang/utils.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/utils.go

|

||||

make

|

||||

Rename-Item -Path "build/bin/RWKV-Runner.exe" -NewName "RWKV-Runner_windows_x64.exe"

|

||||

|

||||

- uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: RWKV-Runner_windows_x64.exe

|

||||

path: build/bin/RWKV-Runner_windows_x64.exe

|

||||

|

||||

linux:

|

||||

runs-on: ubuntu-20.04

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_linux_x86_64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_linux_x86_64 -O ./backend-rust/web-rwkv-converter

|

||||

sudo apt-get update

|

||||

sudo apt-get install upx

|

||||

sudo apt-get install build-essential libgtk-3-dev libwebkit2gtk-4.0-dev libasound2-dev

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/webgpu/web_rwkv_py.cp310-win_amd64.pyd

|

||||

make

|

||||

mv build/bin/RWKV-Runner build/bin/RWKV-Runner_linux_x64

|

||||

|

||||

- uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: RWKV-Runner_linux_x64

|

||||

path: build/bin/RWKV-Runner_linux_x64

|

||||

|

||||

macos:

|

||||

runs-on: macos-13

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_darwin_aarch64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_darwin_aarch64 -O ./backend-rust/web-rwkv-converter

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

rm ./backend-python/rwkv_pip/webgpu/web_rwkv_py.cp310-win_amd64.pyd

|

||||

make

|

||||

cp build/darwin/Readme_Install.txt build/bin/Readme_Install.txt

|

||||

cp build/bin/RWKV-Runner.app/Contents/MacOS/RWKV-Runner build/bin/RWKV-Runner_darwin_universal

|

||||

cd build/bin && zip -r RWKV-Runner_macos_universal.zip RWKV-Runner.app Readme_Install.txt

|

||||

|

||||

- uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: RWKV-Runner_macos_universal.zip

|

||||

path: build/bin/RWKV-Runner_macos_universal.zip

|

||||

80

.github/workflows/release.yml

vendored

80

.github/workflows/release.yml

vendored

@ -11,14 +11,14 @@ env:

|

||||

|

||||

jobs:

|

||||

create-draft:

|

||||

runs-on: ubuntu-22.04

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- run: echo "VERSION=${GITHUB_REF_NAME#v}" >> $GITHUB_ENV

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: master

|

||||

|

||||

- uses: jossef/action-set-json-field@v2.2

|

||||

- uses: jossef/action-set-json-field@v2.1

|

||||

with:

|

||||

file: manifest.json

|

||||

field: version

|

||||

@ -35,40 +35,32 @@ jobs:

|

||||

gh release create ${{github.ref_name}} -d -F CURRENT_CHANGE.md -t ${{github.ref_name}}

|

||||

|

||||

windows:

|

||||

runs-on: windows-2022

|

||||

runs-on: windows-latest

|

||||

needs: create-draft

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

- uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

- uses: actions/setup-python@v5

|

||||

go-version: '1.20.5'

|

||||

- uses: actions/setup-python@v4

|

||||

id: cp310

|

||||

with:

|

||||

python-version: "3.10"

|

||||

- uses: crazy-max/ghaction-chocolatey@v3

|

||||

python-version: '3.10'

|

||||

- uses: crazy-max/ghaction-chocolatey@v2

|

||||

with:

|

||||

args: install upx

|

||||

- run: |

|

||||

Start-BitsTransfer https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_windows_x86_64.exe ./backend-rust/webgpu_server.exe

|

||||

Start-BitsTransfer https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_windows_x86_64.exe ./backend-rust/web-rwkv-converter.exe

|

||||

Start-BitsTransfer https://github.com/josStorer/LibreHardwareMonitor.Console/releases/latest/download/LibreHardwareMonitor.Console.zip ./LibreHardwareMonitor.Console.zip

|

||||

Expand-Archive ./LibreHardwareMonitor.Console.zip -DestinationPath ./components/LibreHardwareMonitor.Console

|

||||

Start-BitsTransfer https://www.python.org/ftp/python/3.10.11/python-3.10.11-embed-amd64.zip ./python-3.10.11-embed-amd64.zip

|

||||

Expand-Archive ./python-3.10.11-embed-amd64.zip -DestinationPath ./py310

|

||||

$content=Get-Content "./py310/python310._pth"; $content | ForEach-Object {if ($_.ReadCount -eq 3) {"Lib\\site-packages"} else {$_}} | Set-Content ./py310/python310._pth

|

||||

./py310/python ./backend-python/get-pip.py

|

||||

./py310/python -m pip install Cython==3.0.4

|

||||

./py310/python -m pip install Cython

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../include" -Destination "py310/include" -Recurse

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../libs" -Destination "py310/libs" -Recurse

|

||||

./py310/python -m pip install cyac==1.9

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

(Get-Content -Path ./backend-golang/app.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/app.go

|

||||

(Get-Content -Path ./backend-golang/utils.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/utils.go

|

||||

./py310/python -m pip install cyac

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

make

|

||||

Rename-Item -Path "build/bin/RWKV-Runner.exe" -NewName "RWKV-Runner_windows_x64.exe"

|

||||

|

||||

@ -78,26 +70,22 @@ jobs:

|

||||

runs-on: ubuntu-20.04

|

||||

needs: create-draft

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

- uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_linux_x86_64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_linux_x86_64 -O ./backend-rust/web-rwkv-converter

|

||||

sudo apt-get update

|

||||

sudo apt-get install upx

|

||||

sudo apt-get install build-essential libgtk-3-dev libwebkit2gtk-4.0-dev libasound2-dev

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

sudo apt-get install build-essential libgtk-3-dev libwebkit2gtk-4.0-dev

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm -rf ./backend-python/wkv_cuda_utils

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/webgpu/web_rwkv_py.cp310-win_amd64.pyd

|

||||

sed -i '1,2d' ./backend-golang/wsl_not_windows.go

|

||||

rm ./backend-golang/wsl.go

|

||||

mv ./backend-golang/wsl_not_windows.go ./backend-golang/wsl.go

|

||||

make

|

||||

mv build/bin/RWKV-Runner build/bin/RWKV-Runner_linux_x64

|

||||

|

||||

@ -107,23 +95,19 @@ jobs:

|

||||

runs-on: macos-13

|

||||

needs: create-draft

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

with:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

- uses: actions/setup-go@v4

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_darwin_aarch64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_darwin_aarch64 -O ./backend-rust/web-rwkv-converter

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm -rf ./backend-python/wkv_cuda_utils

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

rm ./backend-python/rwkv_pip/webgpu/web_rwkv_py.cp310-win_amd64.pyd

|

||||

sed -i '' '1,2d' ./backend-golang/wsl_not_windows.go

|

||||

rm ./backend-golang/wsl.go

|

||||

mv ./backend-golang/wsl_not_windows.go ./backend-golang/wsl.go

|

||||

make

|

||||

cp build/darwin/Readme_Install.txt build/bin/Readme_Install.txt

|

||||

cp build/bin/RWKV-Runner.app/Contents/MacOS/RWKV-Runner build/bin/RWKV-Runner_darwin_universal

|

||||

@ -132,8 +116,8 @@ jobs:

|

||||

- run: gh release upload ${{github.ref_name}} build/bin/RWKV-Runner_macos_universal.zip build/bin/RWKV-Runner_darwin_universal

|

||||

|

||||

publish-release:

|

||||

runs-on: ubuntu-22.04

|

||||

runs-on: ubuntu-latest

|

||||

needs: [ windows, linux, macos ]

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- uses: actions/checkout@v3

|

||||

- run: gh release edit ${{github.ref_name}} --draft=false

|

||||

|

||||

4

.gitignore

vendored

4

.gitignore

vendored

@ -5,10 +5,7 @@ __pycache__

|

||||

.idea

|

||||

.vs

|

||||

*.pth

|

||||

*.st

|

||||

*.safetensors

|

||||

*.bin

|

||||

*.mid

|

||||

/config.json

|

||||

/cache.json

|

||||

/presets.json

|

||||

@ -27,4 +24,3 @@ __pycache__

|

||||

train_log.txt

|

||||

finetune/json2binidx_tool/data

|

||||

/wsl.state

|

||||

/components

|

||||

|

||||

@ -1,31 +1,21 @@

|

||||

## v1.8.4

|

||||

## Changes

|

||||

|

||||

- fix f05a4a, __init__.py is not embedded

|

||||

|

||||

## v1.8.3

|

||||

|

||||

### Deprecations

|

||||

|

||||

- rwkv-beta is deprecated

|

||||

|

||||

### Upgrades

|

||||

|

||||

- bump webgpu(python) (https://github.com/cryscan/web-rwkv-py)

|

||||

- sync https://github.com/JL-er/RWKV-PEFT (LoRA)

|

||||

|

||||

### Improvements

|

||||

|

||||

- improve default LoRA fine-tune params

|

||||

|

||||

### Fixes

|

||||

|

||||

- fix #342, #345: cannot import name 'packaging' from 'pkg_resources'

|

||||

- fix the huge error prompt that pops up when running in webgpu mode

|

||||

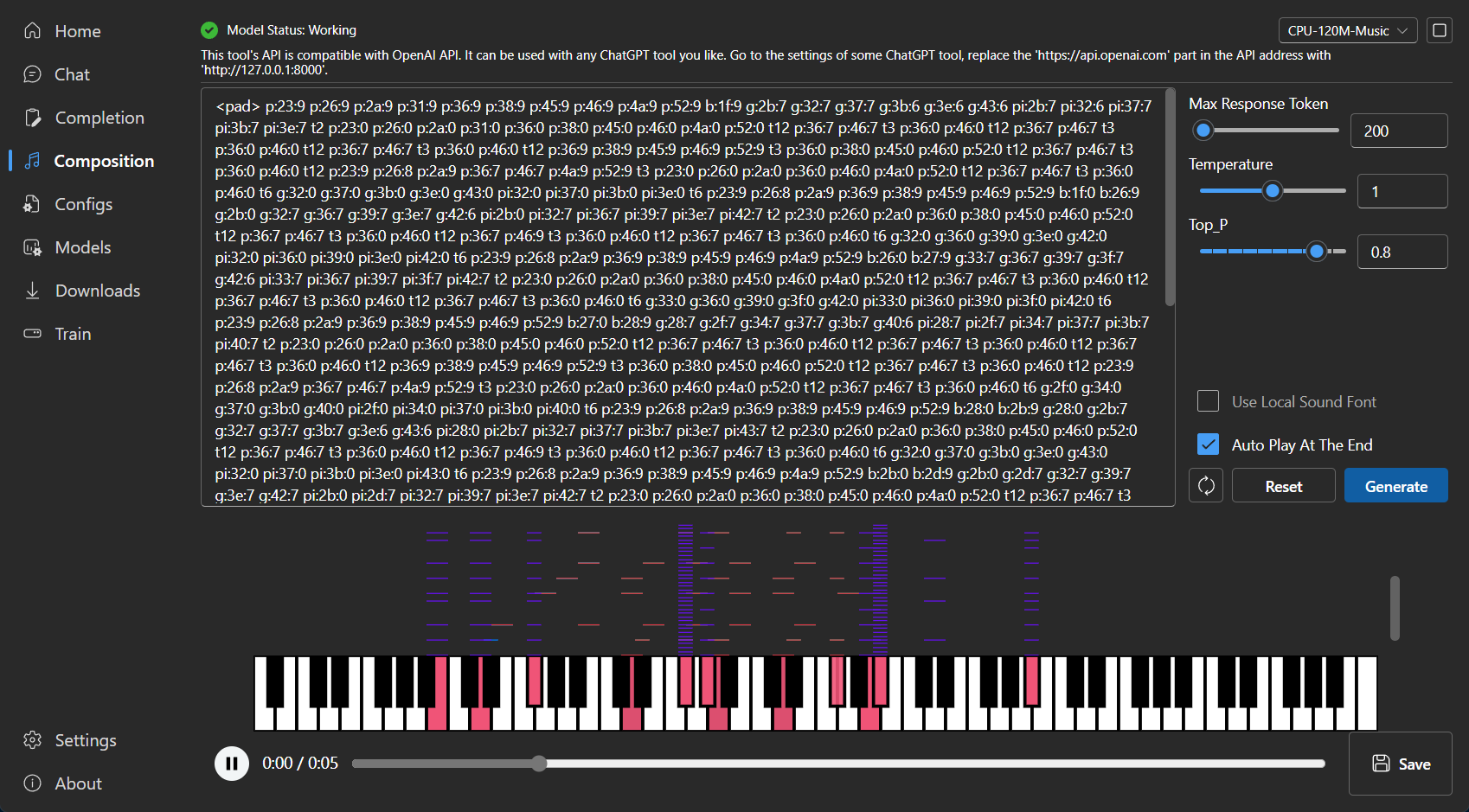

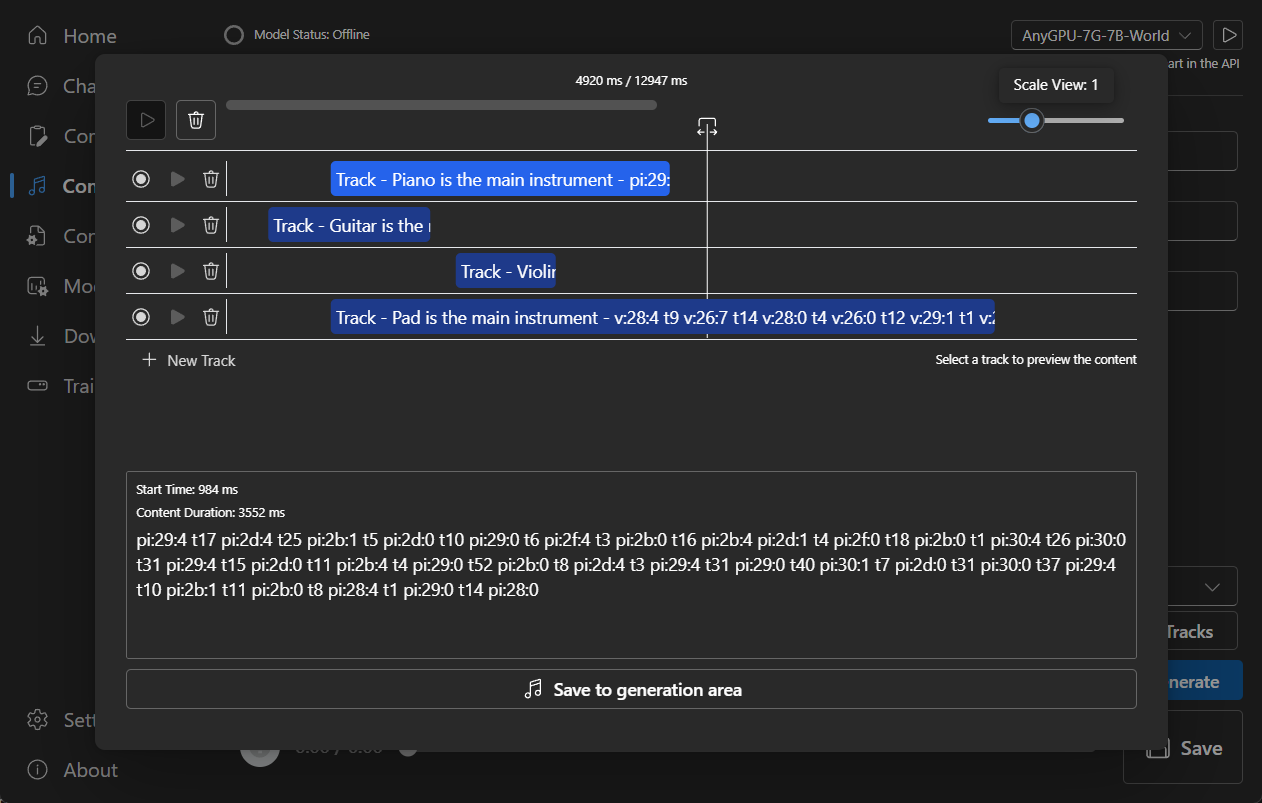

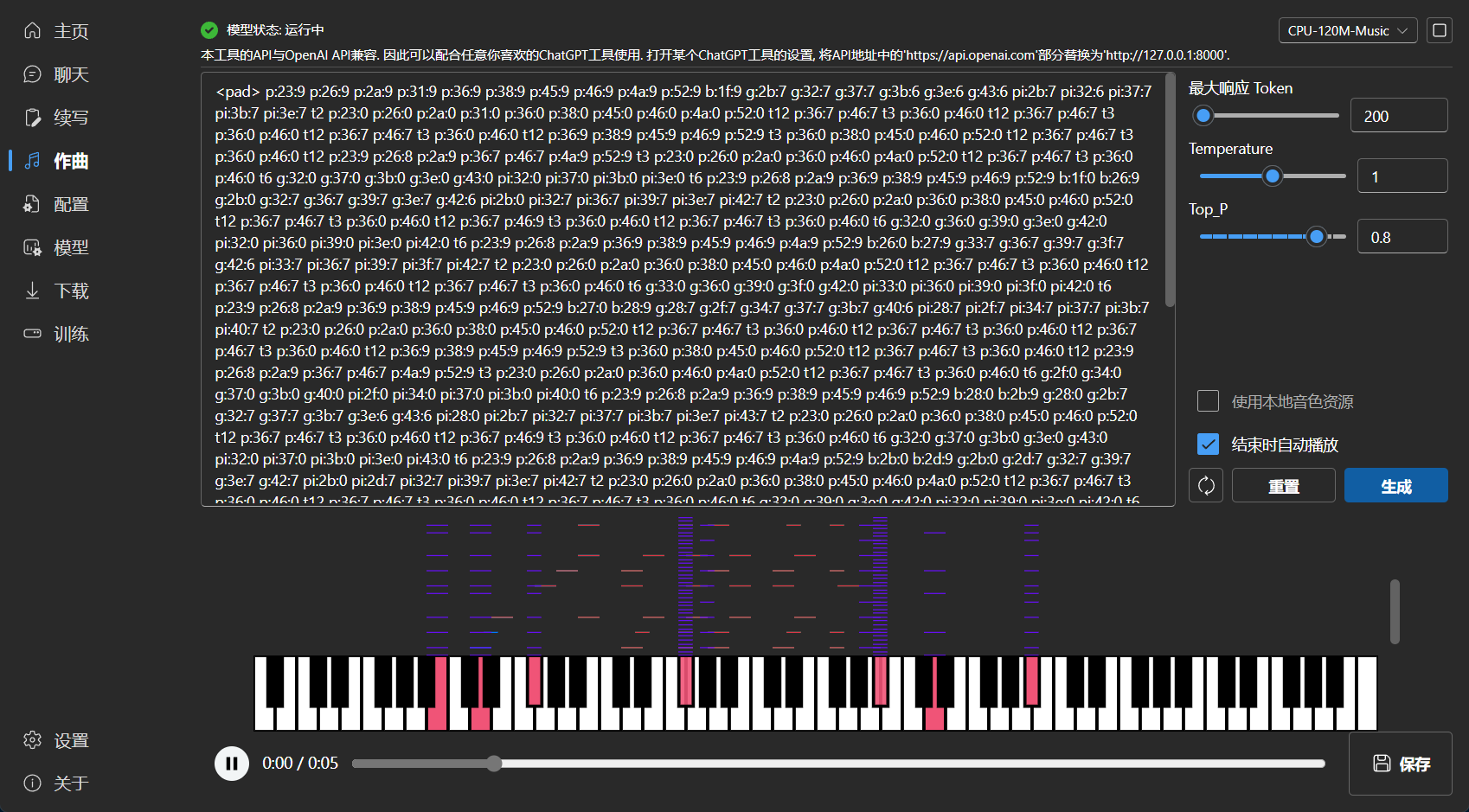

- add Composition Page (RWKV-Music)

|

||||

- improve RunButton prompt

|

||||

- support for `stop` array api params

|

||||

- improve embeddings API results

|

||||

- improve python backend startup speed

|

||||

- add support for MIDI RWKV

|

||||

- add midi api

|

||||

- add CPU-120M-Music config

|

||||

- improve sse fetch

|

||||

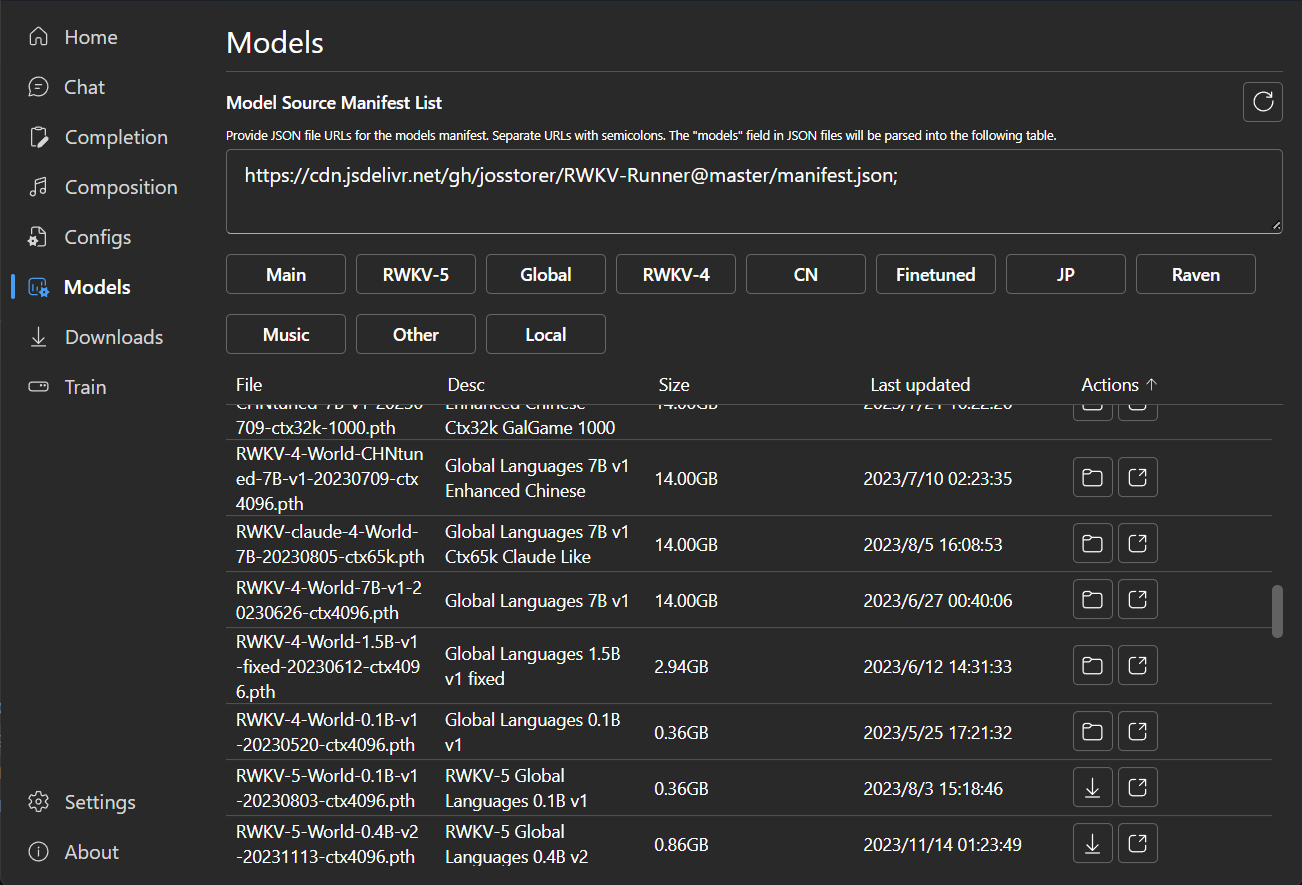

- update manifest (a lot of new models)

|

||||

- update presets

|

||||

- remove LoraFinetunePrecision fp32

|

||||

|

||||

## Install

|

||||

|

||||

- Windows: https://github.com/josStorer/RWKV-Runner/blob/master/build/windows/Readme_Install.txt

|

||||

- MacOS: https://github.com/josStorer/RWKV-Runner/blob/master/build/darwin/Readme_Install.txt

|

||||

- Linux: https://github.com/josStorer/RWKV-Runner/blob/master/build/linux/Readme_Install.txt

|

||||

- Simple Deploy Example: https://github.com/josStorer/RWKV-Runner/blob/master/README.md#simple-deploy-example

|

||||

- Server Deploy Examples: https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples

|

||||

- Server-Deploy-Examples: https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples

|

||||

55

Dockerfile

55

Dockerfile

@ -1,55 +0,0 @@

|

||||

FROM node:21-slim AS frontend

|

||||

|

||||

RUN echo "registry=https://registry.npmmirror.com/" > ~/.npmrc

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY manifest.json manifest.json

|

||||

COPY frontend frontend

|

||||

|

||||

WORKDIR /app/frontend

|

||||

|

||||

RUN npm ci

|

||||

RUN npm run build

|

||||

|

||||

FROM nvidia/cuda:11.6.1-devel-ubuntu20.04 AS runtime

|

||||

|

||||

ENV DEBIAN_FRONTEND=noninteractive

|

||||

|

||||

RUN apt update && \

|

||||

apt install -yq git curl wget build-essential ninja-build aria2 jq software-properties-common

|

||||

|

||||

RUN add-apt-repository -y ppa:deadsnakes/ppa && \

|

||||

add-apt-repository -y ppa:ubuntu-toolchain-r/test && \

|

||||

apt install -y g++-11 python3.10 python3.10-distutils python3.10-dev && \

|

||||

curl -sS http://mirrors.aliyun.com/pypi/get-pip.py | python3.10

|

||||

|

||||

RUN python3.10 -m pip install cmake

|

||||

|

||||

FROM runtime AS librwkv

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

RUN git clone https://github.com/RWKV/rwkv.cpp.git && \

|

||||

cd rwkv.cpp && \

|

||||

git submodule update --init --recursive && \

|

||||

mkdir -p build && \

|

||||

cd build && \

|

||||

cmake -G Ninja .. && \

|

||||

cmake --build .

|

||||

|

||||

FROM runtime AS final

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY ./backend-python/requirements.txt ./backend-python/requirements.txt

|

||||

|

||||

RUN python3.10 -m pip install --quiet -r ./backend-python/requirements.txt

|

||||

|

||||

COPY . .

|

||||

COPY --from=frontend /app/frontend/dist /app/frontend/dist

|

||||

COPY --from=librwkv /app/rwkv.cpp/build/librwkv.so /app/backend-python/rwkv_pip/cpp/librwkv.so

|

||||

|

||||

EXPOSE 27777

|

||||

|

||||

CMD ["python3.10", "./backend-python/main.py", "--port", "27777", "--host", "0.0.0.0", "--webui"]

|

||||

18

Makefile

18

Makefile

@ -8,28 +8,16 @@ endif

|

||||

|

||||

build-windows:

|

||||

@echo ---- build for windows

|

||||

wails build -ldflags '-s -w -extldflags "-static"' -platform windows/amd64

|

||||

upx -9 --lzma ./build/bin/RWKV-Runner.exe

|

||||

wails build -upx -ldflags "-s -w" -platform windows/amd64

|

||||

|

||||

build-macos:

|

||||

@echo ---- build for macos

|

||||

wails build -ldflags '-s -w' -platform darwin/universal

|

||||

wails build -ldflags "-s -w" -platform darwin/universal

|

||||

|

||||

build-linux:

|

||||

@echo ---- build for linux

|

||||

wails build -ldflags '-s -w' -platform linux/amd64

|

||||

upx -9 --lzma ./build/bin/RWKV-Runner

|

||||

|

||||

build-web:

|

||||

@echo ---- build for web

|

||||

cd frontend && npm run build

|

||||

wails build -upx -ldflags "-s -w" -platform linux/amd64

|

||||

|

||||

dev:

|

||||

wails dev

|

||||

|

||||

dev-web:

|

||||

cd frontend && npm run dev

|

||||

|

||||

preview:

|

||||

cd frontend && npm run preview

|

||||

|

||||

|

||||

158

README.md

158

README.md

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -12,7 +12,6 @@ compatible with the OpenAI API, which means that every ChatGPT client is an RWKV

|

||||

|

||||

[![license][license-image]][license-url]

|

||||

[![release][release-image]][release-url]

|

||||

[![py-version][py-version-image]][py-version-url]

|

||||

|

||||

English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

|

||||

@ -22,7 +21,7 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

[![MacOS][MacOS-image]][MacOS-url]

|

||||

[![Linux][Linux-image]][Linux-url]

|

||||

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [Preview](#Preview) | [Download][download-url] | [Simple Deploy Example](#Simple-Deploy-Example) | [Server Deploy Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples) | [MIDI Hardware Input](#MIDI-Input)

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [Preview](#Preview) | [Download][download-url] | [Server-Deploy-Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

|

||||

[license-image]: http://img.shields.io/badge/license-MIT-blue.svg

|

||||

|

||||

@ -32,10 +31,6 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

|

||||

[release-url]: https://github.com/josStorer/RWKV-Runner/releases/latest

|

||||

|

||||

[py-version-image]: https://img.shields.io/pypi/pyversions/fastapi.svg

|

||||

|

||||

[py-version-url]: https://github.com/josStorer/RWKV-Runner/tree/master/backend-python

|

||||

|

||||

[download-url]: https://github.com/josStorer/RWKV-Runner/releases

|

||||

|

||||

[Windows-image]: https://img.shields.io/badge/-Windows-blue?logo=windows

|

||||

@ -52,75 +47,28 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

|

||||

</div>

|

||||

|

||||

## Tips

|

||||

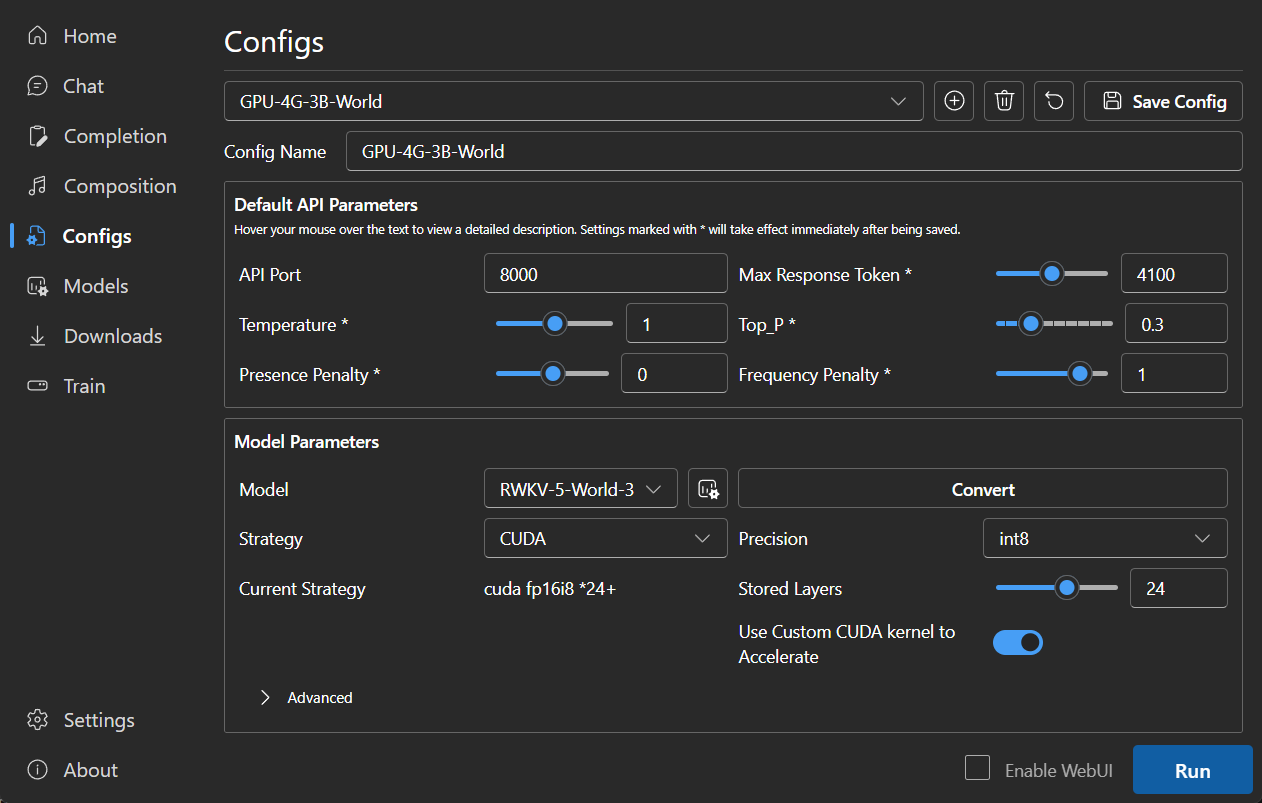

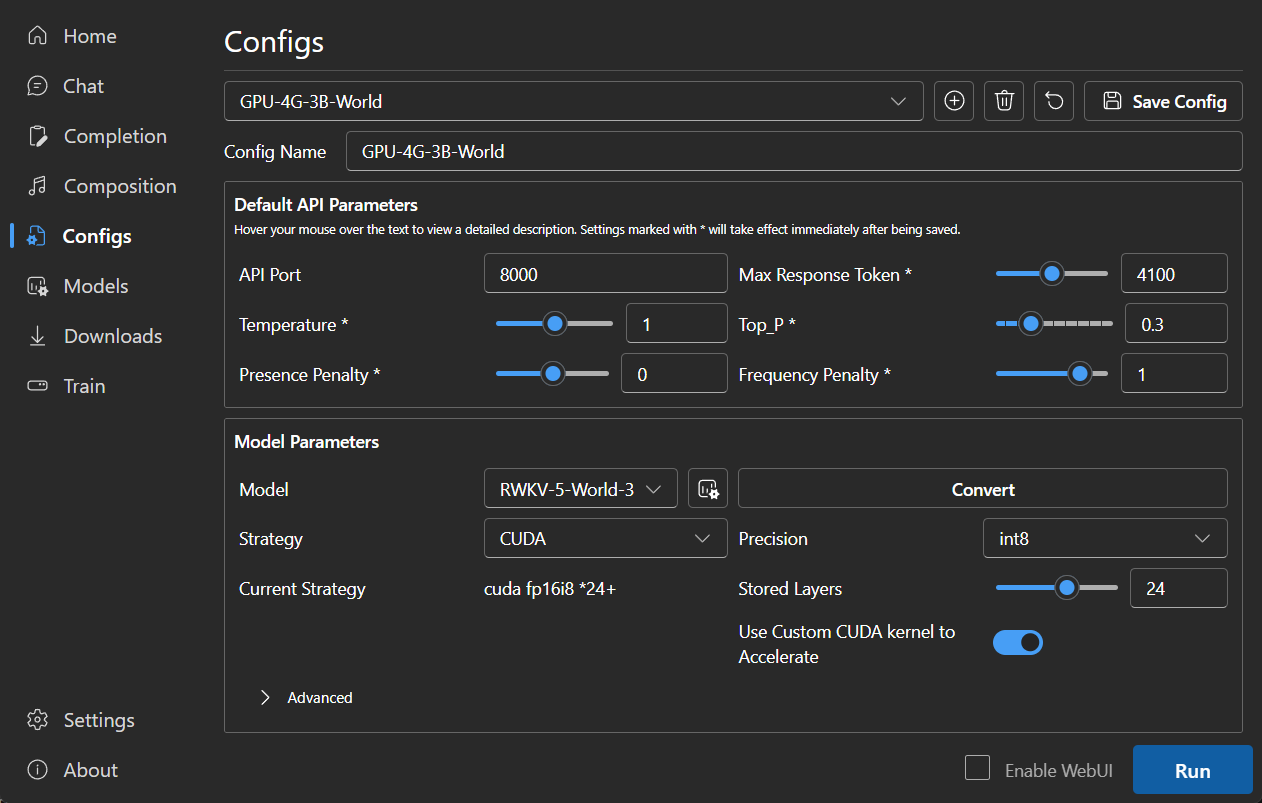

#### Default configs has enabled custom CUDA kernel acceleration, which is much faster and consumes much less VRAM. If you encounter possible compatibility issues, go to the Configs page and turn off `Use Custom CUDA kernel to Accelerate`.

|

||||

|

||||

- You can deploy [backend-python](./backend-python/) on a server and use this program as a client only. Fill in

|

||||

your server address in the Settings `API URL`.

|

||||

#### If Windows Defender claims this is a virus, you can try downloading [v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip) and letting it update automatically to the latest version, or add it to the trusted list (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`).

|

||||

|

||||

- If you are deploying and providing public services, please limit the request size through API gateway to prevent

|

||||

excessive resource usage caused by submitting overly long prompts. Additionally, please restrict the upper limit of

|

||||

requests' max_tokens based on your actual

|

||||

situation: https://github.com/josStorer/RWKV-Runner/blob/master/backend-python/utils/rwkv.py#L567, the default is set

|

||||

as le=102400, which may result in significant resource consumption for individual responses in extreme cases.

|

||||

|

||||

- Default configs has enabled custom CUDA kernel acceleration, which is much faster and consumes much less VRAM. If you

|

||||

encounter possible compatibility issues (output garbled), go to the Configs page and turn

|

||||

off `Use Custom CUDA kernel to Accelerate`, or try to upgrade your gpu driver.

|

||||

|

||||

- If Windows Defender claims this is a virus, you can try

|

||||

downloading [v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip)

|

||||

and letting it update automatically to the latest version, or add it to the trusted

|

||||

list (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`).

|

||||

|

||||

- For different tasks, adjusting API parameters can achieve better results. For example, for translation tasks, you can

|

||||

try setting Temperature to 1 and Top_P to 0.3.

|

||||

#### For different tasks, adjusting API parameters can achieve better results. For example, for translation tasks, you can try setting Temperature to 1 and Top_P to 0.3.

|

||||

|

||||

## Features

|

||||

|

||||

- RWKV model management and one-click startup.

|

||||

- Front-end and back-end separation, if you don't want to use the client, also allows for separately deploying the

|

||||

front-end service, or the back-end inference service, or the back-end inference service with a WebUI.

|

||||

[Simple Deploy Example](#Simple-Deploy-Example) | [Server Deploy Examples](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- Compatible with the OpenAI API, making every ChatGPT client an RWKV client. After starting the model,

|

||||

- RWKV model management and one-click startup

|

||||

- Fully compatible with the OpenAI API, making every ChatGPT client an RWKV client. After starting the model,

|

||||

open http://127.0.0.1:8000/docs to view more details.

|

||||

- Automatic dependency installation, requiring only a lightweight executable program.

|

||||

- Pre-set multi-level VRAM configs, works well on almost all computers. In Configs page, switch Strategy to WebGPU, it

|

||||

can also run on AMD, Intel, and other graphics cards.

|

||||

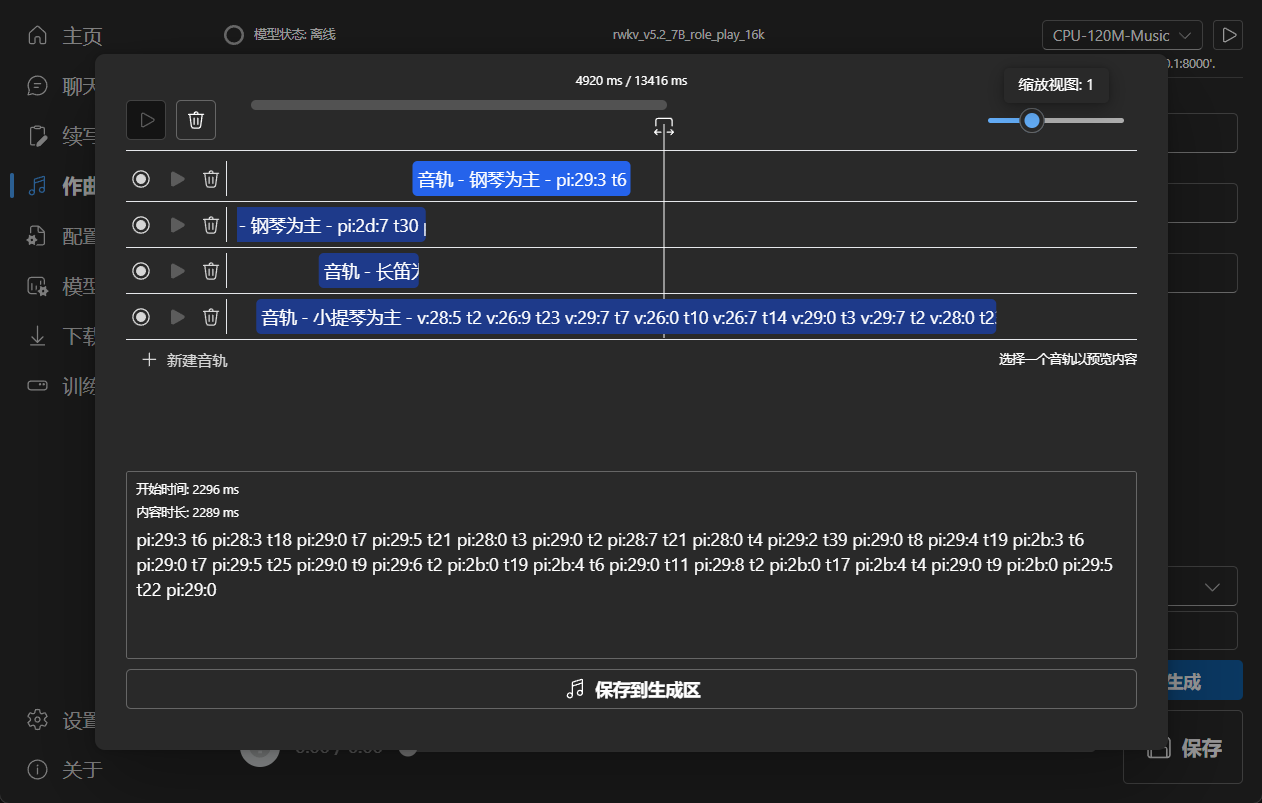

- User-friendly chat, completion, and composition interaction interface included. Also supports chat presets, attachment

|

||||

uploads, MIDI hardware input, and track editing.

|

||||

[Preview](#Preview) | [MIDI Hardware Input](#MIDI-Input)

|

||||

- Built-in WebUI option, one-click start of Web service, sharing your hardware resources.

|

||||

- Easy-to-understand and operate parameter configuration, along with various operation guidance prompts.

|

||||

- Built-in model conversion tool.

|

||||

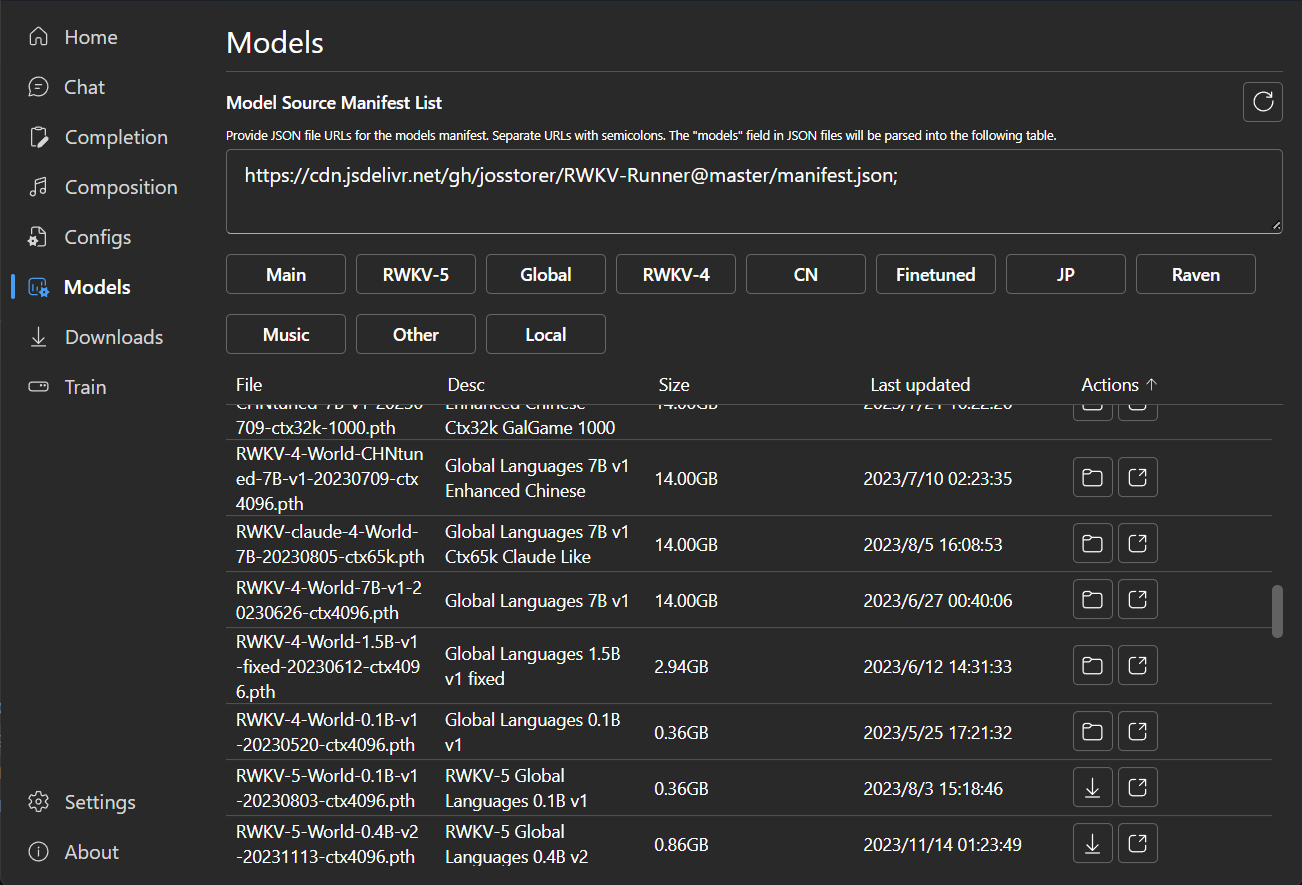

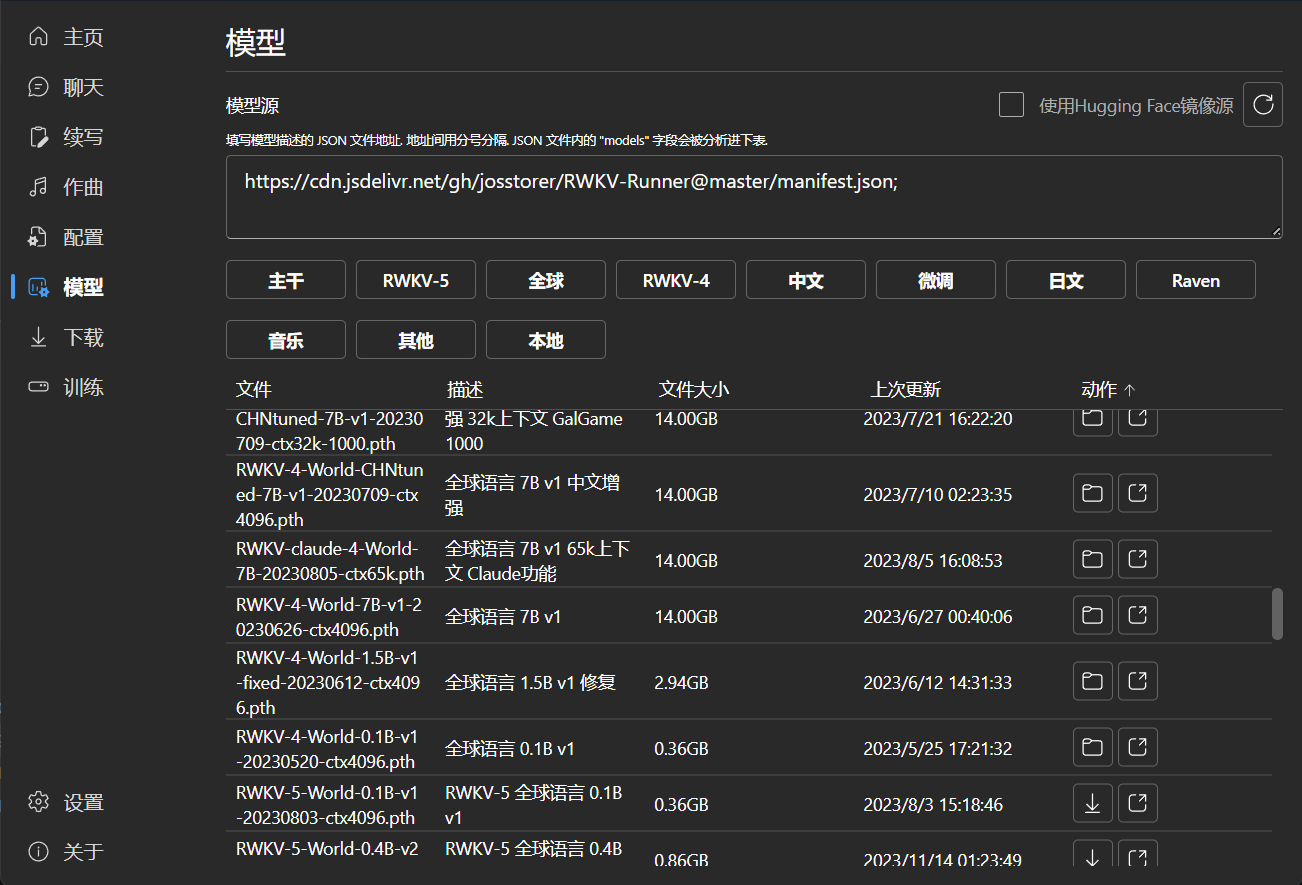

- Built-in download management and remote model inspection.

|

||||

- Built-in one-click LoRA Finetune. (Windows Only)

|

||||

- Can also be used as an OpenAI ChatGPT, GPT-Playground, Ollama and more clients. (Fill in the API URL and API Key in

|

||||

Settings page)

|

||||

- Multilingual localization.

|

||||

- Theme switching.

|

||||

- Automatic updates.

|

||||

|

||||

## Simple Deploy Example

|

||||

|

||||

```bash

|

||||

git clone https://github.com/josStorer/RWKV-Runner

|

||||

|

||||

# Then

|

||||

cd RWKV-Runner

|

||||

python ./backend-python/main.py #The backend inference service has been started, request /switch-model API to load the model, refer to the API documentation: http://127.0.0.1:8000/docs

|

||||

|

||||

# Or

|

||||

cd RWKV-Runner/frontend

|

||||

npm ci

|

||||

npm run build #Compile the frontend

|

||||

cd ..

|

||||

python ./backend-python/webui_server.py #Start the frontend service separately

|

||||

# Or

|

||||

python ./backend-python/main.py --webui #Start the frontend and backend service at the same time

|

||||

|

||||

# Help Info

|

||||

python ./backend-python/main.py -h

|

||||

```

|

||||

- Automatic dependency installation, requiring only a lightweight executable program

|

||||

- Configs with 2G to 32G VRAM are included, works well on almost all computers

|

||||

- User-friendly chat and completion interaction interface included

|

||||

- Easy-to-understand and operate parameter configuration

|

||||

- Built-in model conversion tool

|

||||

- Built-in download management and remote model inspection

|

||||

- Built-in one-click LoRA Finetune

|

||||

- Can also be used as an OpenAI ChatGPT and GPT-Playground client

|

||||

- Multilingual localization

|

||||

- Theme switching

|

||||

- Automatic updates

|

||||

|

||||

## API Concurrency Stress Testing

|

||||

|

||||

@ -183,100 +131,36 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")

|

||||

```

|

||||

|

||||

## MIDI Input

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

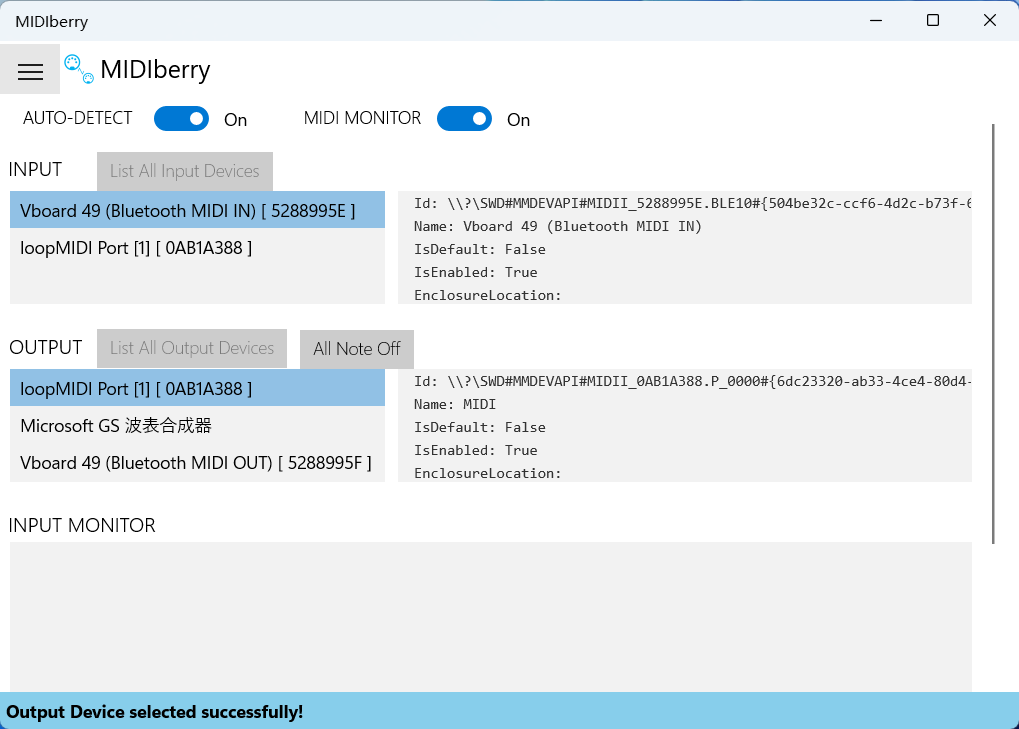

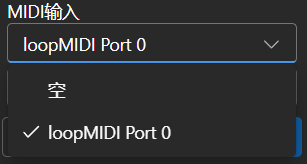

If you don't have a MIDI keyboard, you can use virtual MIDI input software like `Virtual Midi Controller 3 LE`, along

|

||||

with [loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip), to use a regular

|

||||

computer keyboard as MIDI input.

|

||||

|

||||

### USB MIDI Connection

|

||||

|

||||

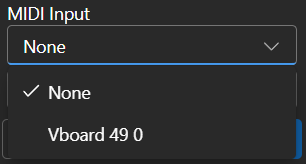

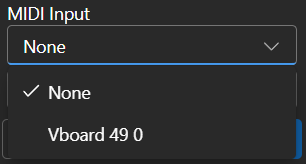

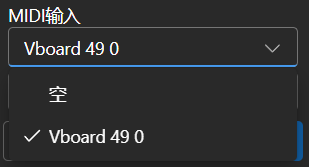

- USB MIDI devices are plug-and-play, and you can select your input device in the Composition page

|

||||

-

|

||||

|

||||

### Mac MIDI Bluetooth Connection

|

||||

|

||||

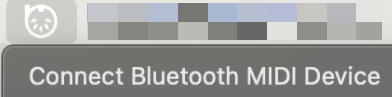

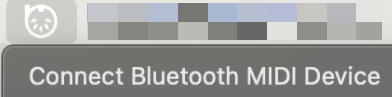

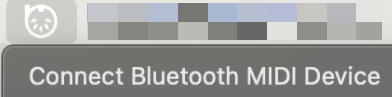

- For Mac users who want to use Bluetooth input,

|

||||

please install [Bluetooth MIDI Connect](https://apps.apple.com/us/app/bluetooth-midi-connect/id1108321791), then click

|

||||

the tray icon to connect after launching,

|

||||

afterwards, you can select your input device in the Composition page.

|

||||

-

|

||||

|

||||

### Windows MIDI Bluetooth Connection

|

||||

|

||||

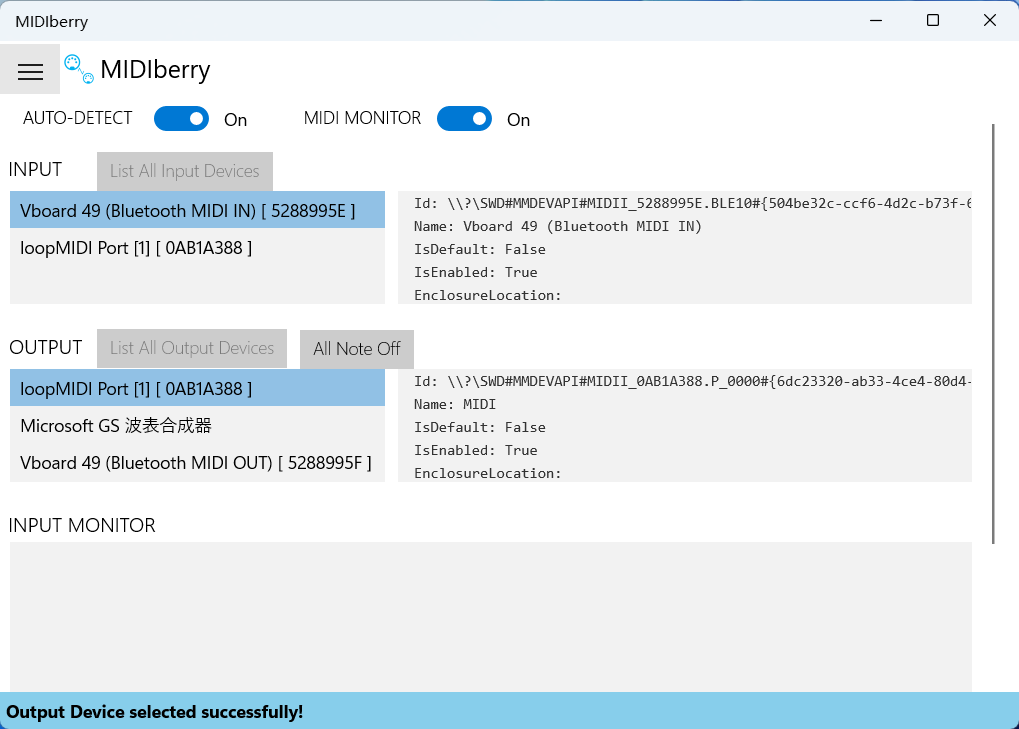

- Windows seems to have implemented Bluetooth MIDI support only for UWP (Universal Windows Platform) apps. Therefore, it

|

||||

requires multiple steps to establish a connection. We need to create a local virtual MIDI device and then launch a UWP

|

||||

application. Through this UWP application, we will redirect Bluetooth MIDI input to the virtual MIDI device, and then

|

||||

this software will listen to the input from the virtual MIDI device.

|

||||

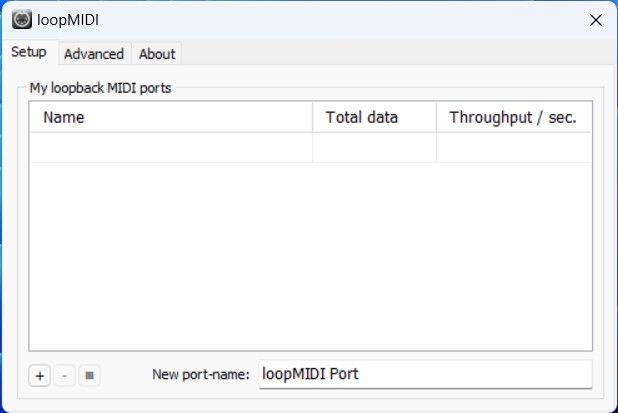

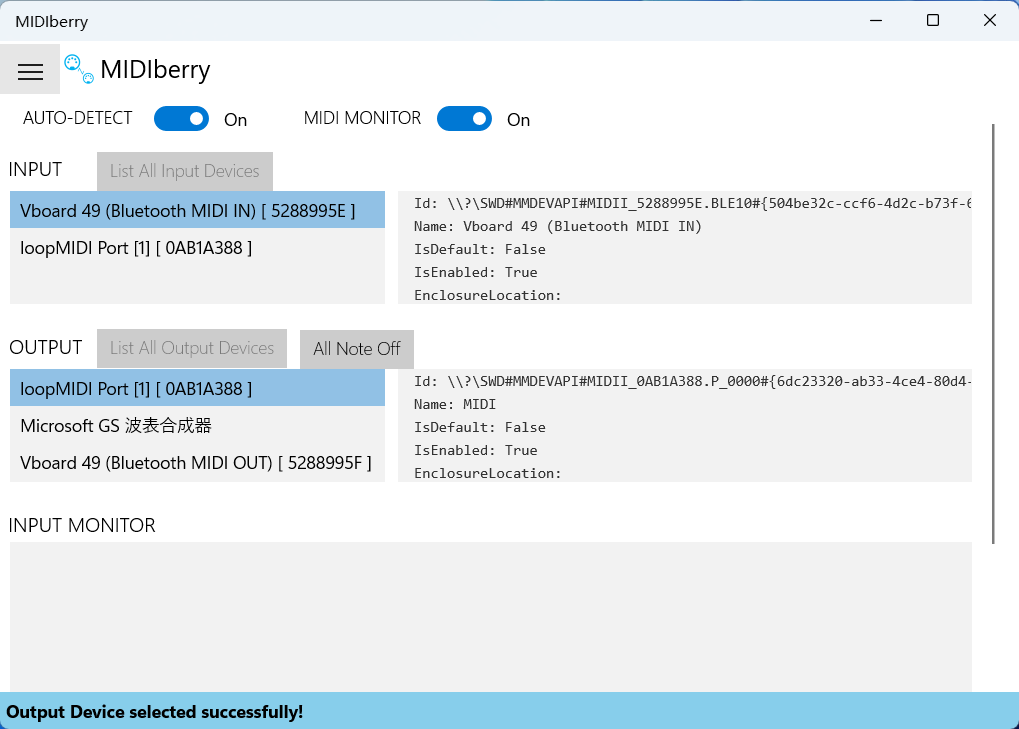

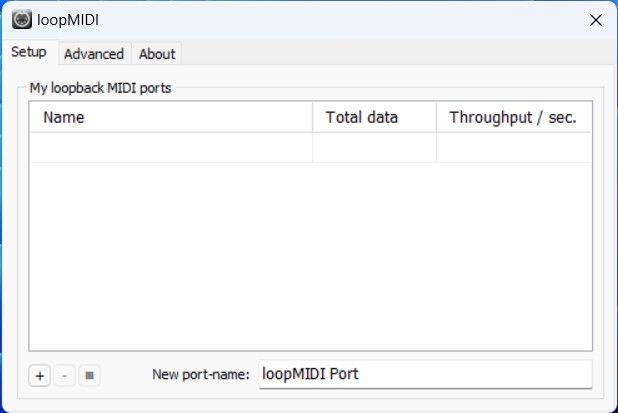

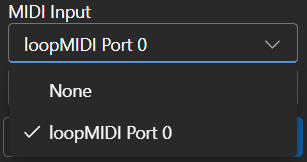

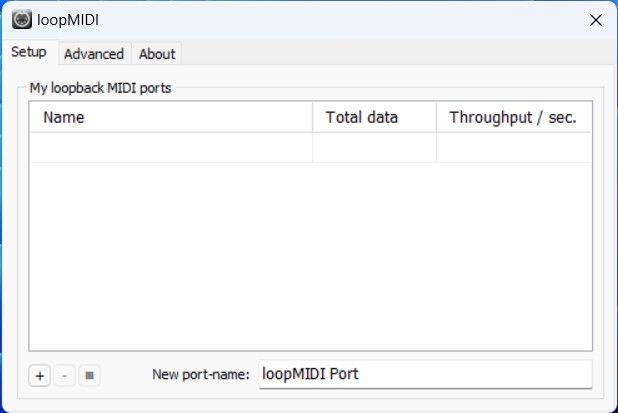

- So, first, you need to

|

||||

download [loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip)

|

||||

to create a virtual MIDI device. Click the plus sign in the bottom left corner to create the device.

|

||||

-

|

||||

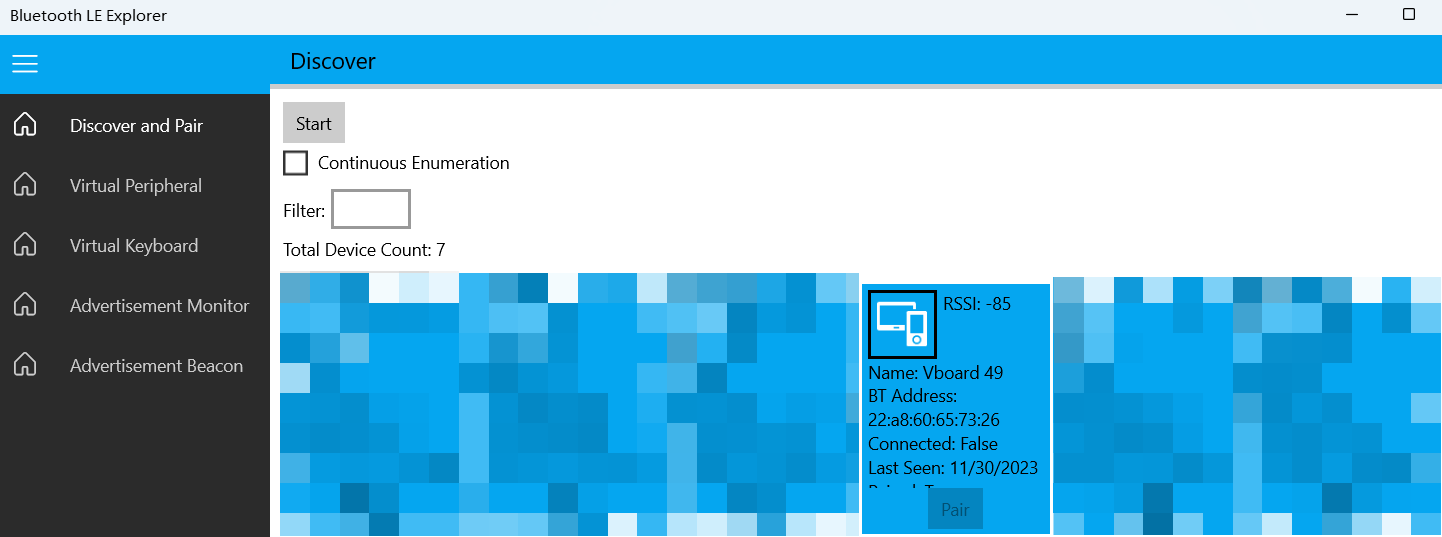

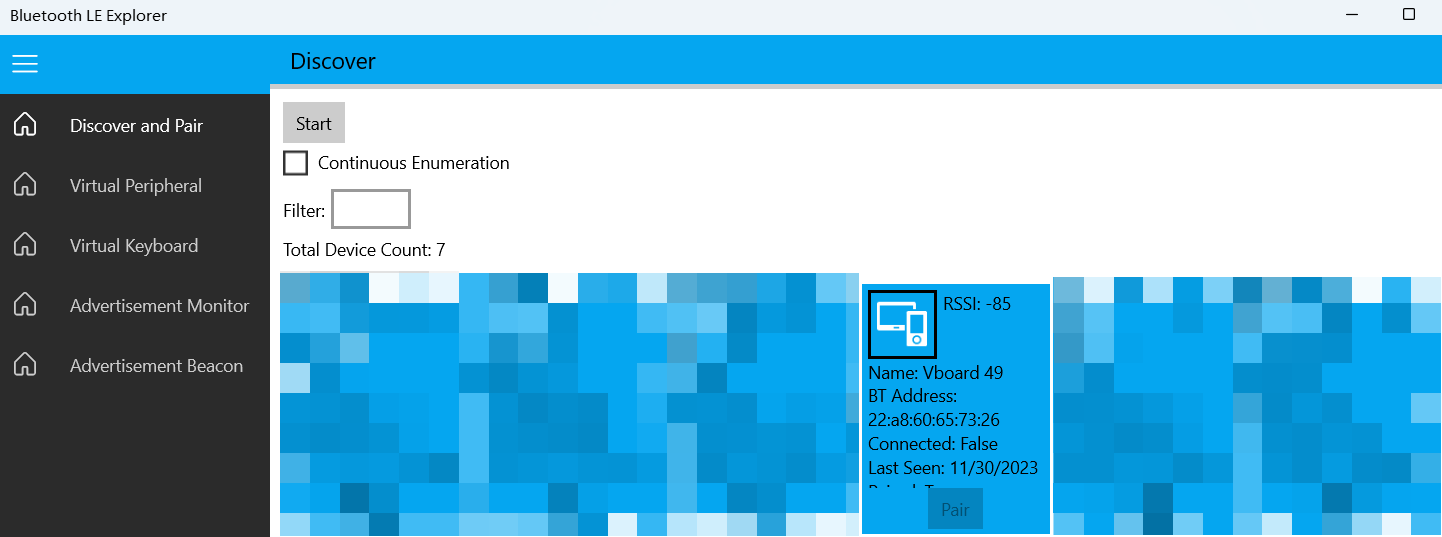

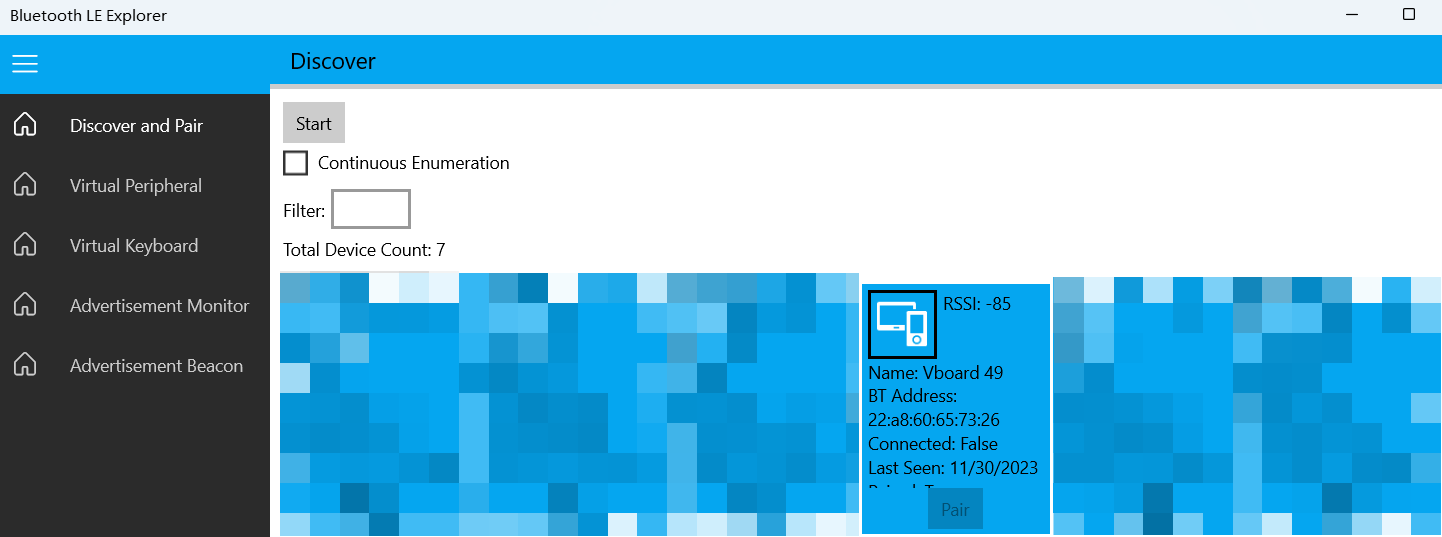

- Next, you need to download [Bluetooth LE Explorer](https://apps.microsoft.com/detail/9N0ZTKF1QD98) to discover and

|

||||

connect to Bluetooth MIDI devices. Click "Start" to search for devices, and then click "Pair" to bind the MIDI device.

|

||||

-

|

||||

- Finally, you need to install [MIDIberry](https://apps.microsoft.com/detail/9N39720H2M05),

|

||||

This UWP application can redirect Bluetooth MIDI input to the virtual MIDI device. After launching it, double-click

|

||||

your actual Bluetooth MIDI device name in the input field, and in the output field, double-click the virtual MIDI

|

||||

device name we created earlier.

|

||||

-

|

||||

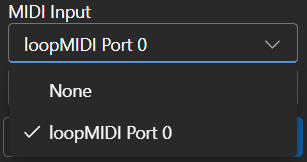

- Now, you can select the virtual MIDI device as the input in the Composition page. Bluetooth LE Explorer no longer

|

||||

needs to run, and you can also close the loopMIDI window, it will run automatically in the background. Just keep

|

||||

MIDIberry open.

|

||||

-

|

||||

|

||||

## Related Repositories:

|

||||

|

||||

- RWKV-5-World: https://huggingface.co/BlinkDL/rwkv-5-world/tree/main

|

||||

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

|

||||

- RWKV-4-Raven: https://huggingface.co/BlinkDL/rwkv-4-raven/tree/main

|

||||

- ChatRWKV: https://github.com/BlinkDL/ChatRWKV

|

||||

- RWKV-LM: https://github.com/BlinkDL/RWKV-LM

|

||||

- RWKV-LM-LoRA: https://github.com/Blealtan/RWKV-LM-LoRA

|

||||

- RWKV-v5-lora: https://github.com/JL-er/RWKV-v5-lora

|

||||

- MIDI-LLM-tokenizer: https://github.com/briansemrau/MIDI-LLM-tokenizer

|

||||

- ai00_rwkv_server: https://github.com/cgisky1980/ai00_rwkv_server

|

||||

- rwkv.cpp: https://github.com/saharNooby/rwkv.cpp

|

||||

- web-rwkv-py: https://github.com/cryscan/web-rwkv-py

|

||||

- web-rwkv: https://github.com/cryscan/web-rwkv

|

||||

|

||||

## Preview

|

||||

|

||||

### Homepage

|

||||

|

||||

|

||||

|

||||

|

||||

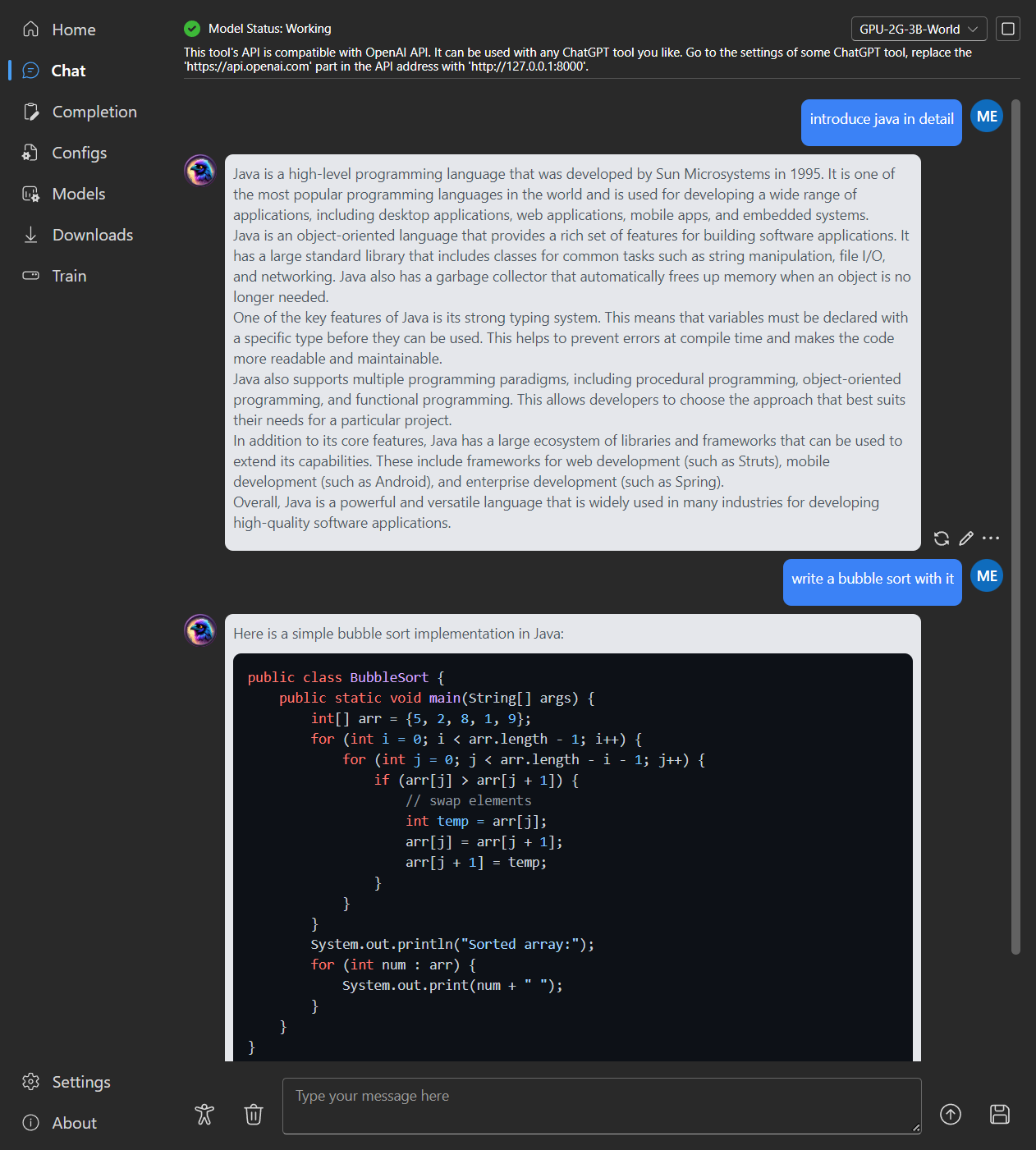

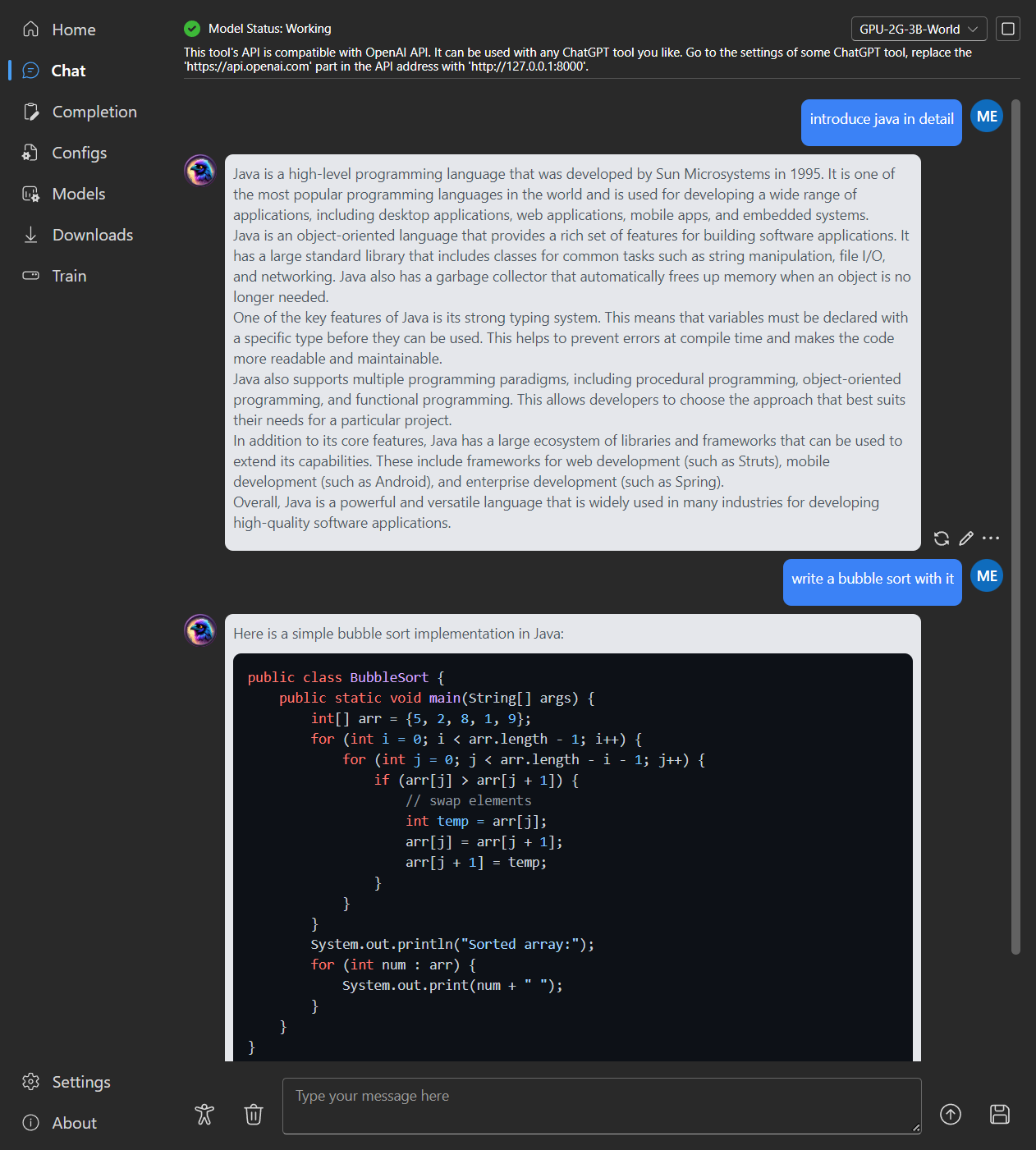

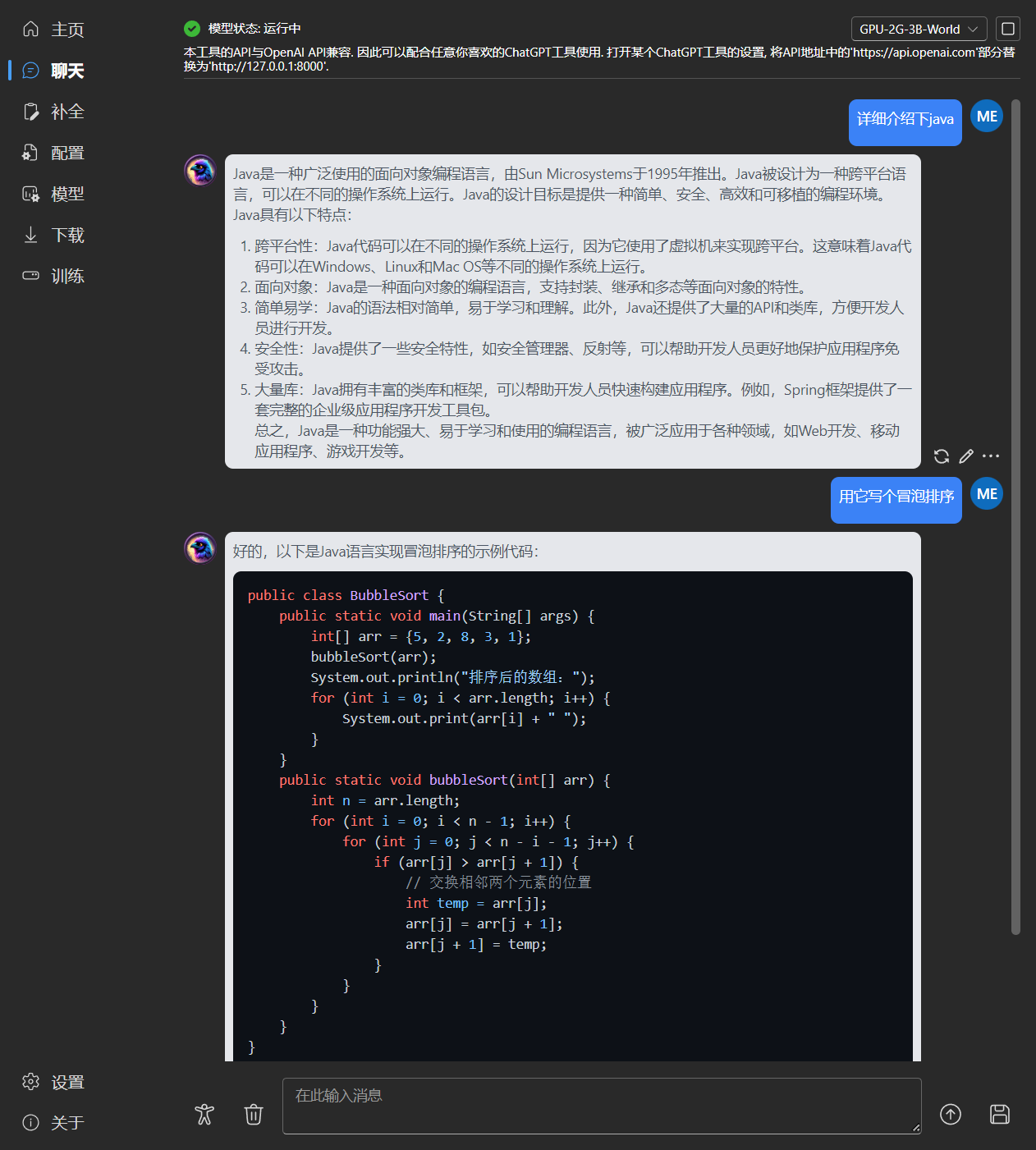

### Chat

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

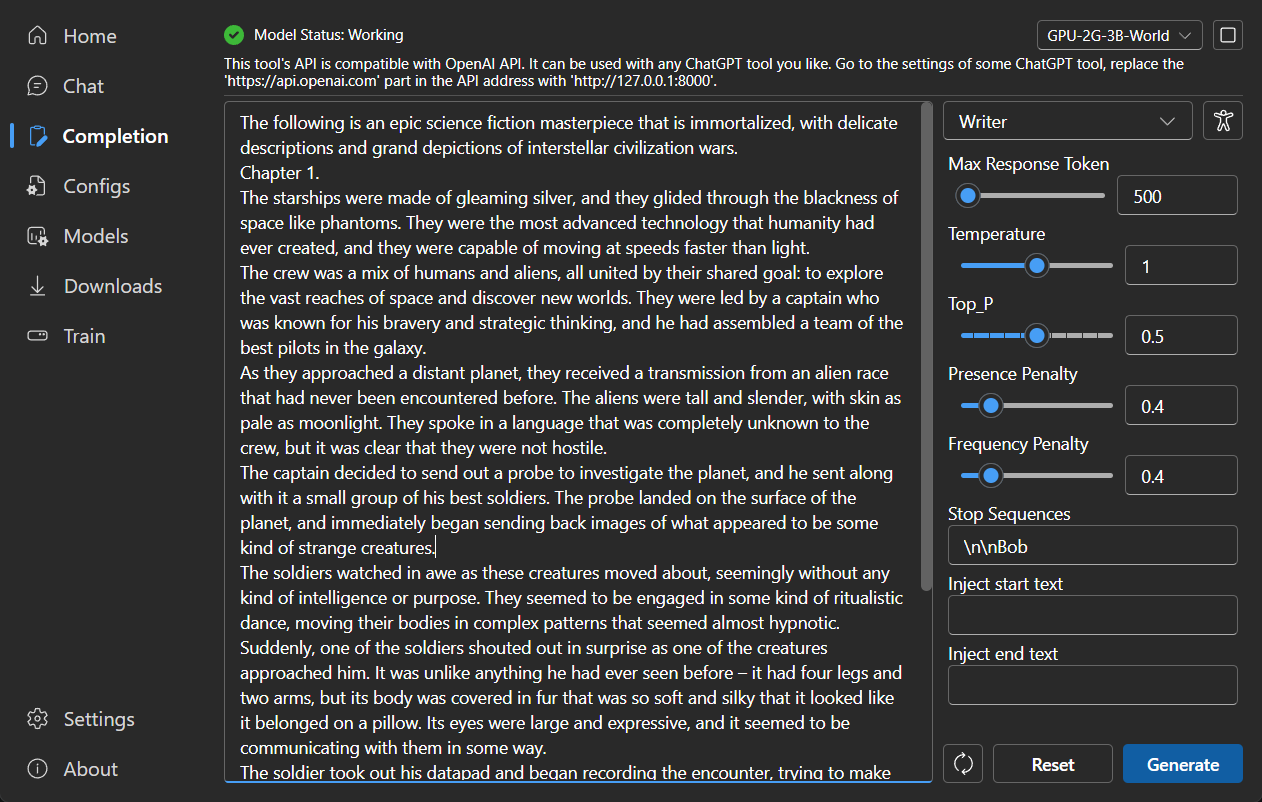

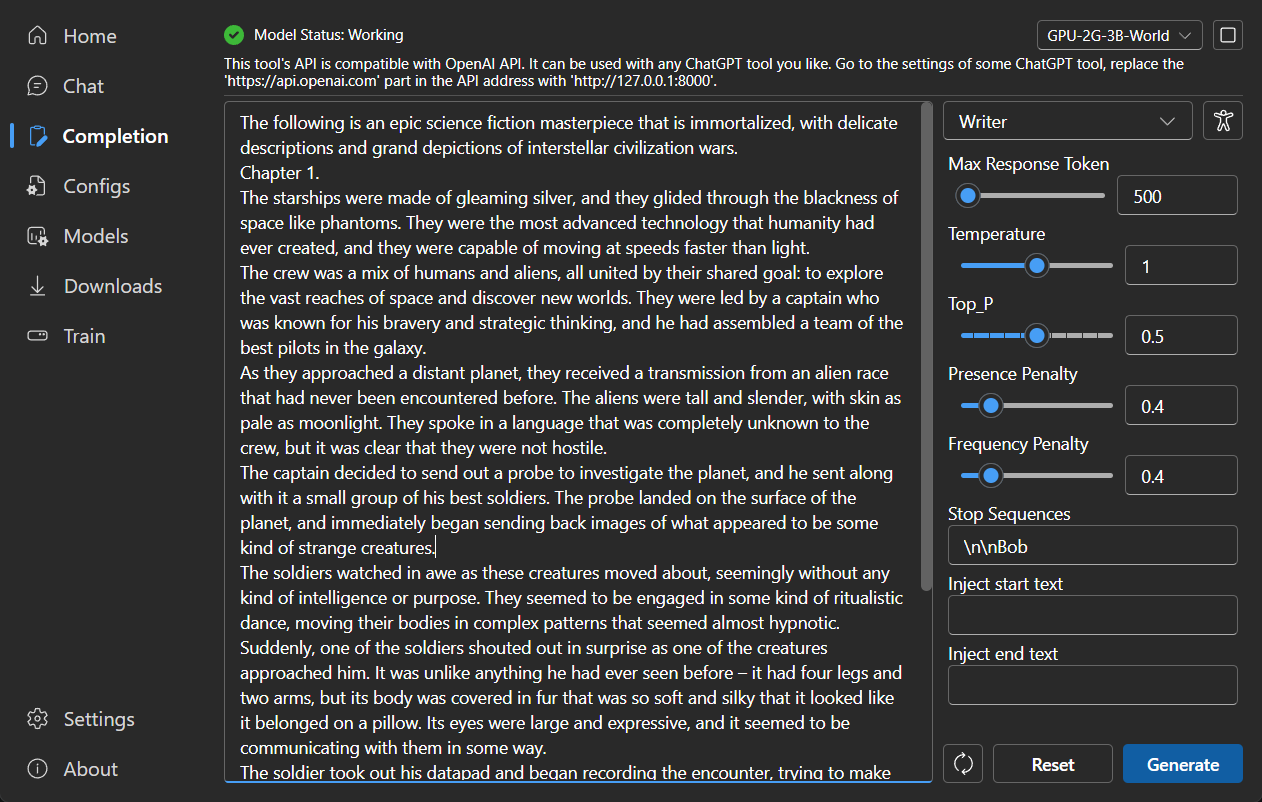

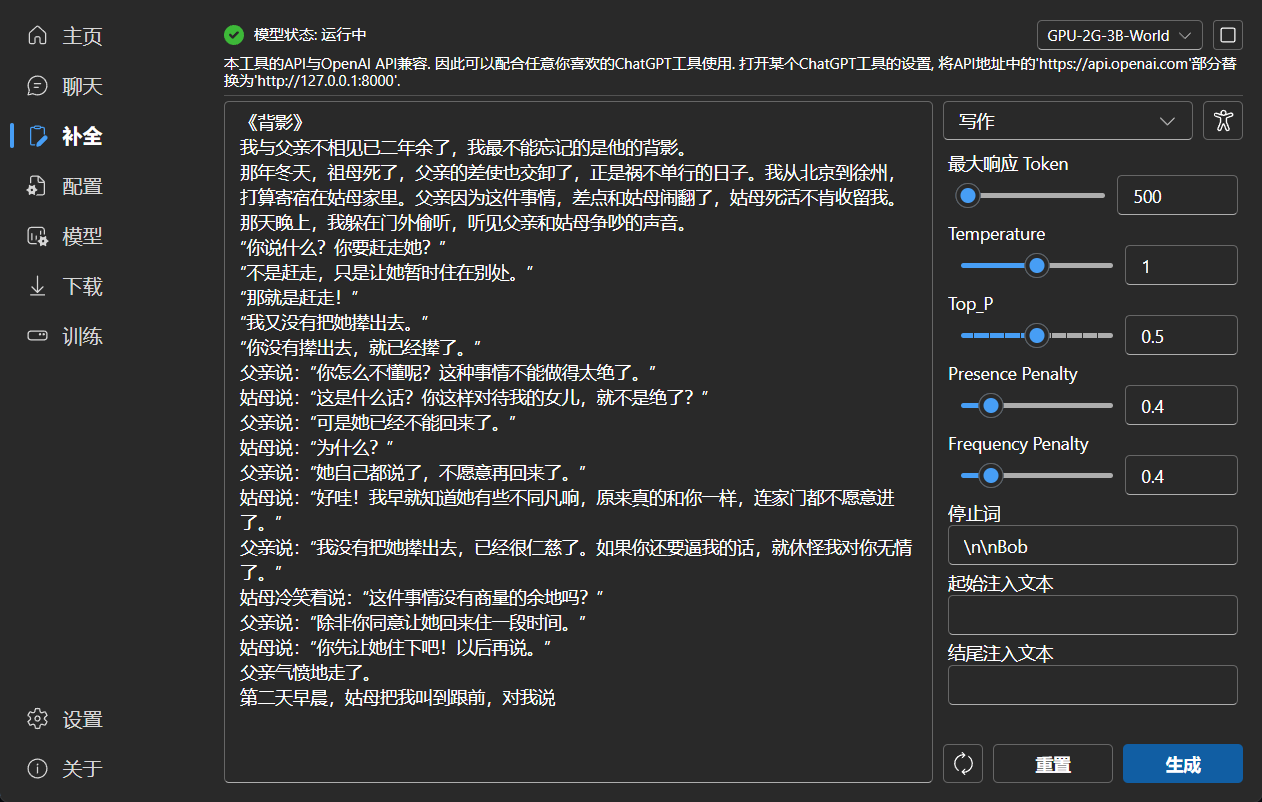

### Completion

|

||||

|

||||

|

||||

|

||||

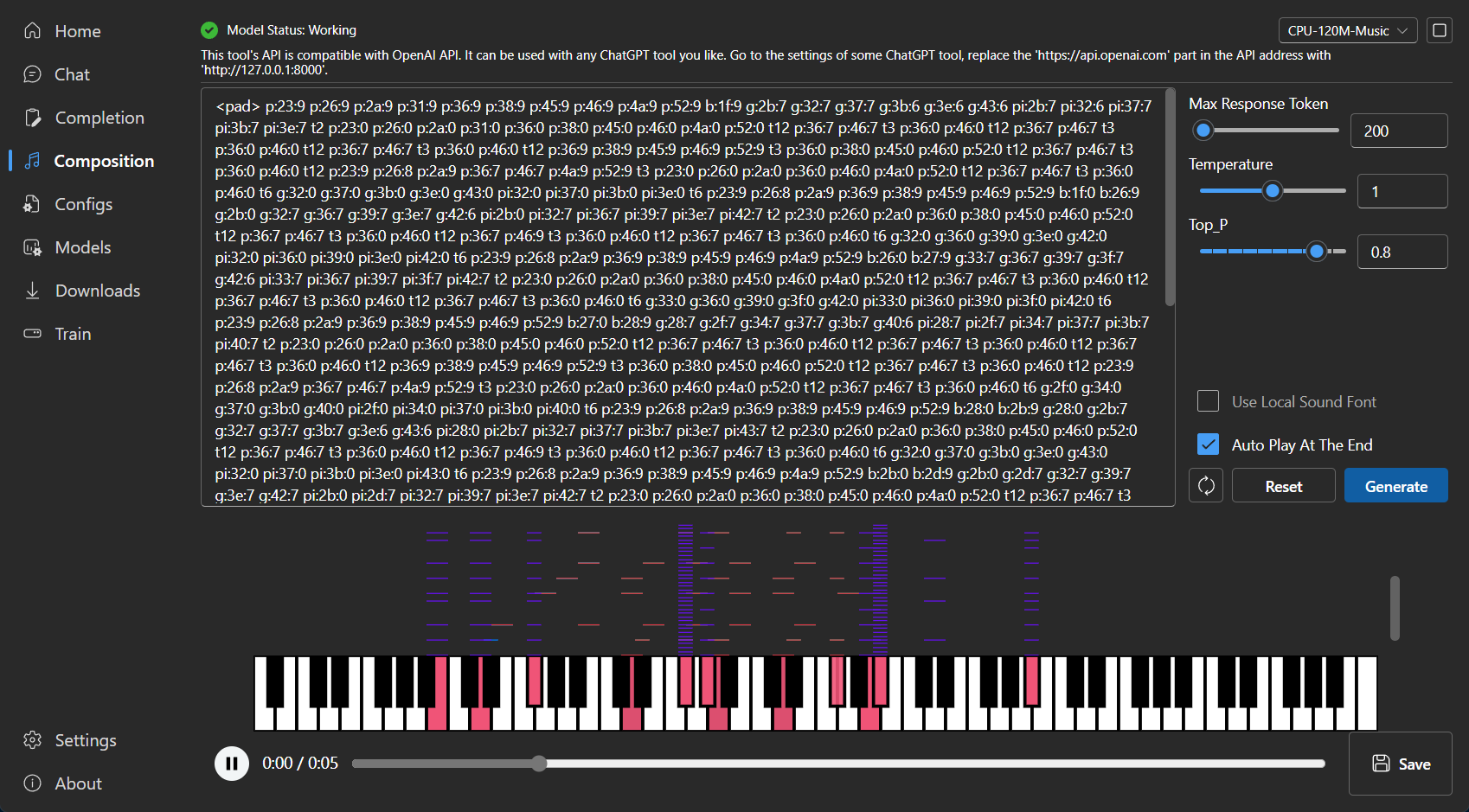

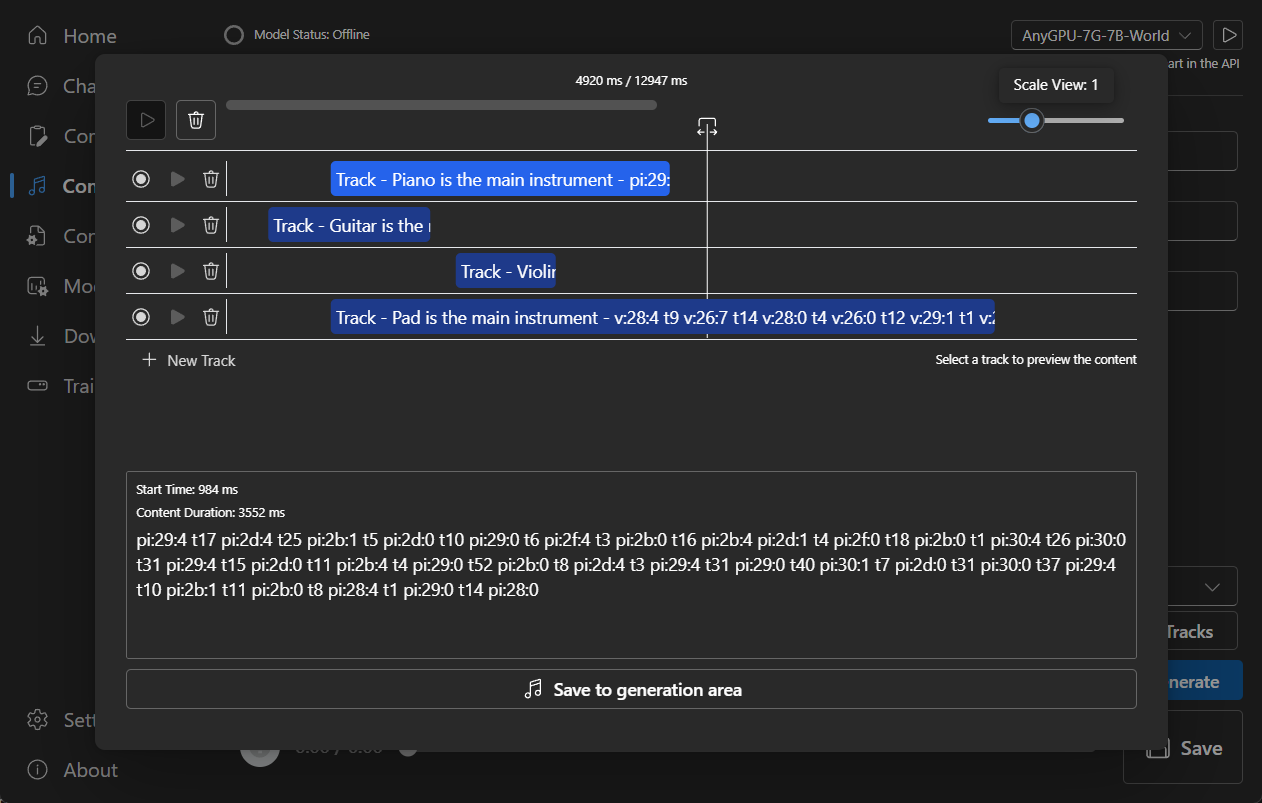

### Composition

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Configuration

|

||||

|

||||

|

||||

|

||||

|

||||

### Model Management

|

||||

|

||||

|

||||

|

||||

|

||||

### Download Management

|

||||

|

||||

|

||||

145

README_JA.md

145

README_JA.md

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -12,7 +12,6 @@

|

||||

|

||||

[![license][license-image]][license-url]

|

||||

[![release][release-image]][release-url]

|

||||

[![py-version][py-version-image]][py-version-url]

|

||||

|

||||

[English](README.md) | [简体中文](README_ZH.md) | 日本語

|

||||

|

||||

@ -22,7 +21,7 @@

|

||||

[![MacOS][MacOS-image]][MacOS-url]

|

||||

[![Linux][Linux-image]][Linux-url]

|

||||

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [プレビュー](#Preview) | [ダウンロード][download-url] | [シンプルなデプロイの例](#Simple-Deploy-Example) | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples) | [MIDIハードウェア入力](#MIDI-Input)

|

||||

[FAQs](https://github.com/josStorer/RWKV-Runner/wiki/FAQs) | [プレビュー](#Preview) | [ダウンロード][download-url] | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

|

||||

[license-image]: http://img.shields.io/badge/license-MIT-blue.svg

|

||||

|

||||

@ -32,10 +31,6 @@

|

||||

|

||||

[release-url]: https://github.com/josStorer/RWKV-Runner/releases/latest

|

||||

|

||||

[py-version-image]: https://img.shields.io/pypi/pyversions/fastapi.svg

|

||||

|

||||

[py-version-url]: https://github.com/josStorer/RWKV-Runner/tree/master/backend-python

|

||||

|

||||

[download-url]: https://github.com/josStorer/RWKV-Runner/releases

|

||||

|

||||

[Windows-image]: https://img.shields.io/badge/-Windows-blue?logo=windows

|

||||

@ -52,71 +47,29 @@

|

||||

|

||||

</div>

|

||||

|

||||

## ヒント

|

||||

#### デフォルトの設定はカスタム CUDA カーネルアクセラレーションを有効にしています。互換性の問題が発生する可能性がある場合は、コンフィグページに移動し、`Use Custom CUDA kernel to Accelerate` をオフにしてください。

|

||||

|

||||

- サーバーに [backend-python](./backend-python/)

|

||||

をデプロイし、このプログラムをクライアントとして使用することができます。設定された`API URL`にサーバーアドレスを入力してください。

|

||||

#### Windows Defender がこれをウイルスだと主張する場合は、[v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip) をダウンロードして最新版に自動更新させるか、信頼済みリストに追加してみてください (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`)。

|

||||

|

||||

- もし、あなたがデプロイし、外部に公開するサービスを提供している場合、APIゲートウェイを使用してリクエストのサイズを制限し、

|

||||

長すぎるプロンプトの提出がリソースを占有しないようにしてください。さらに、実際の状況に応じて、リクエストの max_tokens

|

||||

の上限を制限してください:https://github.com/josStorer/RWKV-Runner/blob/master/backend-python/utils/rwkv.py#L567

|

||||

、デフォルトは le=102400 ですが、極端な場合には単一の応答が大量のリソースを消費する可能性があります。

|

||||

|

||||

- デフォルトの設定はカスタム CUDA カーネルアクセラレーションを有効にしています。互換性の問題 (文字化けを出力する)

|

||||

が発生する可能性がある場合は、コンフィグページに移動し、`Use Custom CUDA kernel to Accelerate`

|

||||

をオフにしてください、あるいは、GPUドライバーをアップグレードしてみてください。

|

||||

|

||||

- Windows Defender

|

||||

がこれをウイルスだと主張する場合は、[v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip)

|

||||

をダウンロードして最新版に自動更新させるか、信頼済みリストに追加してみてください (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`)。

|

||||

|

||||

- 異なるタスクについては、API パラメータを調整することで、より良い結果を得ることができます。例えば、翻訳タスクの場合、Temperature

|

||||

を 1 に、Top_P を 0.3 に設定してみてください。

|

||||

#### 異なるタスクについては、API パラメータを調整することで、より良い結果を得ることができます。例えば、翻訳タスクの場合、Temperature を 1 に、Top_P を 0.3 に設定してみてください。

|

||||

|

||||

## 特徴

|

||||

|

||||

- RWKV モデル管理とワンクリック起動

|

||||

- フロントエンドとバックエンドの分離は、クライアントを使用しない場合でも、フロントエンドサービス、またはバックエンド推論サービス、またはWebUIを備えたバックエンド推論サービスを個別に展開することを可能にします。

|

||||

[シンプルなデプロイの例](#Simple-Deploy-Example) | [サーバーデプロイ例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- OpenAI API と互換性があり、すべての ChatGPT クライアントを RWKV クライアントにします。モデル起動後、

|

||||

- OpenAI API と完全に互換性があり、すべての ChatGPT クライアントを RWKV クライアントにします。モデル起動後、

|

||||

http://127.0.0.1:8000/docs を開いて詳細をご覧ください。

|

||||

- 依存関係の自動インストールにより、軽量な実行プログラムのみを必要とします

|

||||

- 事前設定された多段階のVRAM設定、ほとんどのコンピュータで動作します。配置ページで、ストラテジーをWebGPUに切り替えると、AMD、インテル、その他のグラフィックカードでも動作します

|

||||

- ユーザーフレンドリーなチャット、完成、および作曲インターフェイスが含まれています。また、チャットプリセット、添付ファイルのアップロード、MIDIハードウェア入力、トラック編集もサポートしています。

|

||||

[プレビュー](#Preview) | [MIDIハードウェア入力](#MIDI-Input)

|

||||

- 内蔵WebUIオプション、Webサービスのワンクリック開始、ハードウェアリソースの共有

|

||||

- 分かりやすく操作しやすいパラメータ設定、各種操作ガイダンスプロンプトとともに

|

||||

- 2G から 32G の VRAM のコンフィグが含まれており、ほとんどのコンピュータで動作します

|

||||

- ユーザーフレンドリーなチャットと完成インタラクションインターフェースを搭載

|

||||

- 分かりやすく操作しやすいパラメータ設定

|

||||

- 内蔵モデル変換ツール

|

||||

- ダウンロード管理とリモートモデル検査機能内蔵

|

||||

- 内蔵のLoRA微調整機能を搭載しています (Windowsのみ)

|

||||

- このプログラムは、OpenAI ChatGPT、GPT Playground、Ollama などのクライアントとしても使用できます(設定ページで `API URL`

|

||||

と `API Key` を入力してください)

|

||||

- 内蔵のLoRA微調整機能を搭載しています

|

||||

- このプログラムは、OpenAI ChatGPTとGPT Playgroundのクライアントとしても使用できます

|

||||

- 多言語ローカライズ

|

||||

- テーマ切り替え

|

||||

- 自動アップデート

|

||||

|

||||

## Simple Deploy Example

|

||||

|

||||

```bash

|

||||

git clone https://github.com/josStorer/RWKV-Runner

|

||||

|

||||

# Then

|

||||

cd RWKV-Runner

|

||||

python ./backend-python/main.py #The backend inference service has been started, request /switch-model API to load the model, refer to the API documentation: http://127.0.0.1:8000/docs

|

||||

|

||||

# Or

|

||||

cd RWKV-Runner/frontend

|

||||

npm ci

|

||||

npm run build #Compile the frontend

|

||||

cd ..

|

||||

python ./backend-python/webui_server.py #Start the frontend service separately

|

||||

# Or

|

||||

python ./backend-python/main.py --webui #Start the frontend and backend service at the same time

|

||||

|

||||

# Help Info

|

||||

python ./backend-python/main.py -h

|

||||

```

|

||||

|

||||

## API 同時実行ストレステスト

|

||||

|

||||

```bash

|

||||

@ -138,8 +91,8 @@ body.json:

|

||||

|

||||

## 埋め込み API の例

|

||||

|

||||

注意: v1.4.0 では、埋め込み API の品質が向上しました。生成される結果は、以前のバージョンとは互換性がありません。

|

||||

もし、embeddings API を使って知識ベースなどを生成している場合は、再生成してください。

|

||||

Note: v1.4.0 has improved the quality of embeddings API. The generated results are not compatible

|

||||

with previous versions. If you are using embeddings API to generate knowledge bases or similar, please regenerate.

|

||||

|

||||

LangChain を使用している場合は、`OpenAIEmbeddings(openai_api_base="http://127.0.0.1:8000", openai_api_key="sk-")`

|

||||

を使用してください

|

||||

@ -179,100 +132,36 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")

|

||||

```

|

||||

|

||||

## MIDI Input

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

MIDIキーボードをお持ちでない場合、`Virtual Midi Controller 3 LE`

|

||||

などの仮想MIDI入力ソフトウェアを使用することができます。[loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip)

|

||||

を組み合わせて、通常のコンピュータキーボードをMIDI入力として使用できます。

|

||||

|

||||

### USB MIDI Connection

|

||||

|

||||

- USB MIDI devices are plug-and-play, and you can select your input device in the Composition page

|

||||

-

|

||||

|

||||

### Mac MIDI Bluetooth Connection

|

||||

|

||||

- For Mac users who want to use Bluetooth input,

|

||||

please install [Bluetooth MIDI Connect](https://apps.apple.com/us/app/bluetooth-midi-connect/id1108321791), then click

|

||||

the tray icon to connect after launching,

|

||||

afterwards, you can select your input device in the Composition page.

|

||||

-

|

||||

|

||||

### Windows MIDI Bluetooth Connection

|

||||

|

||||

- Windows seems to have implemented Bluetooth MIDI support only for UWP (Universal Windows Platform) apps. Therefore, it

|

||||

requires multiple steps to establish a connection. We need to create a local virtual MIDI device and then launch a UWP

|

||||

application. Through this UWP application, we will redirect Bluetooth MIDI input to the virtual MIDI device, and then

|

||||

this software will listen to the input from the virtual MIDI device.

|

||||

- So, first, you need to

|

||||

download [loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip)

|

||||

to create a virtual MIDI device. Click the plus sign in the bottom left corner to create the device.

|

||||

-

|

||||

- Next, you need to download [Bluetooth LE Explorer](https://apps.microsoft.com/detail/9N0ZTKF1QD98) to discover and

|

||||

connect to Bluetooth MIDI devices. Click "Start" to search for devices, and then click "Pair" to bind the MIDI device.

|

||||

-

|

||||

- Finally, you need to install [MIDIberry](https://apps.microsoft.com/detail/9N39720H2M05),

|

||||

This UWP application can redirect Bluetooth MIDI input to the virtual MIDI device. After launching it, double-click

|

||||

your actual Bluetooth MIDI device name in the input field, and in the output field, double-click the virtual MIDI

|

||||

device name we created earlier.

|

||||

-

|

||||

- Now, you can select the virtual MIDI device as the input in the Composition page. Bluetooth LE Explorer no longer

|

||||

needs to run, and you can also close the loopMIDI window, it will run automatically in the background. Just keep

|

||||

MIDIberry open.

|

||||

-

|

||||

|

||||

## 関連リポジトリ:

|

||||

|

||||

- RWKV-5-World: https://huggingface.co/BlinkDL/rwkv-5-world/tree/main

|

||||

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

|

||||

- RWKV-4-Raven: https://huggingface.co/BlinkDL/rwkv-4-raven/tree/main

|

||||

- ChatRWKV: https://github.com/BlinkDL/ChatRWKV

|

||||

- RWKV-LM: https://github.com/BlinkDL/RWKV-LM

|

||||

- RWKV-LM-LoRA: https://github.com/Blealtan/RWKV-LM-LoRA

|

||||

- RWKV-v5-lora: https://github.com/JL-er/RWKV-v5-lora

|

||||

- MIDI-LLM-tokenizer: https://github.com/briansemrau/MIDI-LLM-tokenizer

|

||||

- ai00_rwkv_server: https://github.com/cgisky1980/ai00_rwkv_server

|

||||

- rwkv.cpp: https://github.com/saharNooby/rwkv.cpp

|

||||

- web-rwkv-py: https://github.com/cryscan/web-rwkv-py

|

||||

- web-rwkv: https://github.com/cryscan/web-rwkv

|

||||

|

||||

## Preview

|

||||

## プレビュー

|

||||

|

||||

### ホームページ

|

||||

|

||||

|

||||

|

||||

|

||||

### チャット

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 補完

|

||||

|

||||

|

||||

|

||||

### 作曲

|

||||

|

||||

Tip: You can download https://github.com/josStorer/sgm_plus and unzip it to the program's `assets/sound-font` directory

|

||||

to use it as an offline sound source. Please note that if you are compiling the program from source code, do not place

|

||||

it in the source code directory.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### コンフィグ

|

||||

|

||||

|

||||

|

||||

|

||||

### モデル管理

|

||||

|

||||

|

||||

|

||||

|

||||

### ダウンロード管理

|

||||

|

||||

|

||||

124

README_ZH.md

124

README_ZH.md

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -11,7 +11,6 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

|

||||

[![license][license-image]][license-url]

|

||||

[![release][release-image]][release-url]

|

||||

[![py-version][py-version-image]][py-version-url]

|

||||

|

||||

[English](README.md) | 简体中文 | [日本語](README_JA.md)

|

||||

|

||||

@ -21,7 +20,7 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

[![MacOS][MacOS-image]][MacOS-url]

|

||||

[![Linux][Linux-image]][Linux-url]

|

||||

|

||||

[视频演示](https://www.bilibili.com/video/BV1hM4y1v76R) | [疑难解答](https://www.bilibili.com/read/cv23921171) | [预览](#Preview) | [下载][download-url] | [懒人包](https://pan.baidu.com/s/1zdzZ_a0uM3gDqi6pXIZVAA?pwd=1111) | [简明服务部署示例](#Simple-Deploy-Example) | [服务器部署示例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples) | [MIDI硬件输入](#MIDI-Input)

|

||||

[视频演示](https://www.bilibili.com/video/BV1hM4y1v76R) | [疑难解答](https://www.bilibili.com/read/cv23921171) | [预览](#Preview) | [下载][download-url] | [懒人包](https://pan.baidu.com/s/1zdzZ_a0uM3gDqi6pXIZVAA?pwd=1111) | [服务器部署示例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

|

||||

[license-image]: http://img.shields.io/badge/license-MIT-blue.svg

|

||||

|

||||

@ -31,10 +30,6 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

|

||||

[release-url]: https://github.com/josStorer/RWKV-Runner/releases/latest

|

||||

|

||||

[py-version-image]: https://img.shields.io/pypi/pyversions/fastapi.svg

|

||||

|

||||

[py-version-url]: https://github.com/josStorer/RWKV-Runner/tree/master/backend-python

|

||||

|

||||

[download-url]: https://github.com/josStorer/RWKV-Runner/releases

|

||||

|

||||

[Windows-image]: https://img.shields.io/badge/-Windows-blue?logo=windows

|

||||

@ -51,65 +46,28 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

|

||||

</div>

|

||||

|

||||

## 小贴士

|

||||

#### 预设配置已经开启自定义CUDA算子加速,速度更快,且显存消耗更少。如果你遇到可能的兼容性问题,前往配置页面,关闭`使用自定义CUDA算子加速`

|

||||

|

||||

- 你可以在服务器部署[backend-python](./backend-python/),然后将此程序仅用作客户端,在设置的`API URL`中填入你的服务器地址

|

||||

#### 如果Windows Defender说这是一个病毒,你可以尝试下载[v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip),然后让其自动更新到最新版,或添加信任 (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`)

|

||||

|

||||

- 如果你正在部署并对外提供公开服务,请通过API网关限制请求大小,避免过长的prompt提交占用资源。此外,请根据你的实际情况,限制请求的

|

||||

max_tokens 上限: https://github.com/josStorer/RWKV-Runner/blob/master/backend-python/utils/rwkv.py#L567,

|

||||

默认le=102400, 这可能导致极端情况下单个响应消耗大量资源

|

||||

|

||||

- 预设配置已经开启自定义CUDA算子加速,速度更快,且显存消耗更少。如果你遇到可能的兼容性(输出乱码)

|

||||

问题,前往配置页面,关闭`使用自定义CUDA算子加速`,或更新你的显卡驱动

|

||||

|

||||

- 如果 Windows Defender

|

||||

说这是一个病毒,你可以尝试下载[v1.3.7_win.zip](https://github.com/josStorer/RWKV-Runner/releases/download/v1.3.7/RWKV-Runner_win.zip),

|

||||

然后让其自动更新到最新版,或添加信任 (`Windows Security` -> `Virus & threat protection` -> `Manage settings` -> `Exclusions` -> `Add or remove exclusions` -> `Add an exclusion` -> `Folder` -> `RWKV-Runner`)

|

||||

|

||||

- 对于不同的任务,调整API参数会获得更好的效果,例如对于翻译任务,你可以尝试设置Temperature为1,Top_P为0.3

|

||||

#### 对于不同的任务,调整API参数会获得更好的效果,例如对于翻译任务,你可以尝试设置Temperature为1,Top_P为0.3

|

||||

|

||||

## 功能

|

||||

|

||||

- RWKV模型管理,一键启动

|

||||

- 前后端分离,如果你不想使用客户端,也允许单独部署前端服务,或后端推理服务,或具有WebUI的后端推理服务。

|

||||

[简明服务部署示例](#Simple-Deploy-Example) | [服务器部署示例](https://github.com/josStorer/RWKV-Runner/tree/master/deploy-examples)

|

||||

- 与OpenAI API兼容,一切ChatGPT客户端,都是RWKV客户端。启动模型后,打开 http://127.0.0.1:8000/docs 查看API文档

|

||||

- 与OpenAI API完全兼容,一切ChatGPT客户端,都是RWKV客户端。启动模型后,打开 http://127.0.0.1:8000/docs 查看详细内容

|

||||

- 全自动依赖安装,你只需要一个轻巧的可执行程序

|

||||

- 预设多级显存配置,几乎在各种电脑上工作良好。通过配置页面切换Strategy到WebGPU,还可以在AMD,Intel等显卡上运行

|

||||

- 自带用户友好的聊天,续写,作曲交互页面。支持聊天预设,附件上传,MIDI硬件输入及音轨编辑。

|

||||

[预览](#Preview) | [MIDI硬件输入](#MIDI-Input)

|

||||

- 内置WebUI选项,一键启动Web服务,共享硬件资源

|

||||

- 易于理解和操作的参数配置,及各类操作引导提示

|

||||

- 预设了2G至32G显存的配置,几乎在各种电脑上工作良好

|

||||

- 自带用户友好的聊天和续写交互页面

|

||||

- 易于理解和操作的参数配置

|

||||

- 内置模型转换工具

|

||||

- 内置下载管理和远程模型检视

|

||||

- 内置一键LoRA微调 (仅限Windows)

|

||||

- 也可用作 OpenAI ChatGPT, GPT Playground, Ollama 等服务的客户端 (在设置内填写API URL和API Key)

|

||||

- 内置一键LoRA微调

|

||||

- 也可用作 OpenAI ChatGPT 和 GPT Playground 客户端

|

||||

- 多语言本地化

|

||||

- 主题切换

|

||||

- 自动更新

|

||||

|

||||

## Simple Deploy Example

|

||||

|

||||

```bash

|

||||

git clone https://github.com/josStorer/RWKV-Runner

|

||||

|

||||

# 然后

|

||||

cd RWKV-Runner

|

||||

python ./backend-python/main.py #后端推理服务已启动, 调用/switch-model载入模型, 参考API文档: http://127.0.0.1:8000/docs

|

||||

|

||||

# 或者

|

||||

cd RWKV-Runner/frontend

|

||||

npm ci

|

||||

npm run build #编译前端

|

||||

cd ..

|

||||

python ./backend-python/webui_server.py #单独启动前端服务

|

||||

# 或者

|

||||

python ./backend-python/main.py --webui #同时启动前后端服务

|

||||

|

||||

# 帮助参数

|

||||

python ./backend-python/main.py -h

|

||||

```

|

||||

|

||||

## API并发压力测试

|

||||

|

||||

```bash

|

||||

@ -170,90 +128,36 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

print(f"{embeddings_cos_sim[i]:.10f} - {values[i]}")

|

||||

```

|

||||

|

||||

## MIDI Input

|

||||

|

||||

小贴士: 你可以下载 https://github.com/josStorer/sgm_plus, 并解压到程序的`assets/sound-font`目录, 以使用离线音源. 注意,

|

||||

如果你正在从源码编译程序, 请不要将其放置在源码目录中

|

||||

|

||||

如果你没有MIDI键盘, 你可以使用像 `Virtual Midi Controller 3 LE` 这样的虚拟MIDI输入软件,

|

||||

配合[loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip), 使用普通电脑键盘作为MIDI输入

|

||||

|

||||

### USB MIDI 连接

|

||||

|

||||

- USB MIDI设备是即插即用的, 你能够在作曲页面选择你的输入设备

|

||||

-

|

||||

|

||||

### Mac MIDI 蓝牙连接

|

||||

|

||||

- 对于想要使用蓝牙输入的Mac用户,

|

||||

请安装[Bluetooth MIDI Connect](https://apps.apple.com/us/app/bluetooth-midi-connect/id1108321791), 启动后点击托盘连接,

|

||||

之后你可以在作曲页面选择你的输入设备

|

||||

-

|

||||

|

||||

### Windows MIDI 蓝牙连接

|

||||

|

||||

- Windows似乎只为UWP实现了蓝牙MIDI支持, 因此需要多个步骤进行连接, 我们需要创建一个本地的虚拟MIDI设备, 然后启动一个UWP应用,

|

||||

通过此UWP应用将蓝牙MIDI输入重定向到虚拟MIDI设备, 然后本软件监听虚拟MIDI设备的输入

|

||||

- 因此, 首先你需要下载[loopMIDI](https://www.tobias-erichsen.de/wp-content/uploads/2020/01/loopMIDISetup_1_0_16_27.zip),

|

||||

用于创建虚拟MIDI设备, 点击左下角的加号创建设备

|

||||

-

|

||||

- 然后, 你需要下载[Bluetooth LE Explorer](https://apps.microsoft.com/detail/9N0ZTKF1QD98), 以发现并连接蓝牙MIDI设备,

|

||||

点击Start搜索设备, 然后点击Pair绑定MIDI设备

|

||||

-

|

||||

- 最后, 你需要安装[MIDIberry](https://apps.microsoft.com/detail/9N39720H2M05), 这个UWP应用能将MIDI蓝牙输入重定向到虚拟MIDI设备,

|

||||

启动后, 在输入栏, 双击你实际的蓝牙MIDI设备名称, 在输出栏, 双击我们先前创建的虚拟MIDI设备名称

|

||||

-

|

||||

- 现在, 你可以在作曲页面选择虚拟MIDI设备作为输入. Bluetooth LE Explorer不再需要运行, loopMIDI窗口也可以退出, 它会自动在后台运行,

|

||||

仅保持MIDIberry打开即可

|

||||

-

|

||||

|

||||

## 相关仓库:

|

||||

|

||||

- RWKV-5-World: https://huggingface.co/BlinkDL/rwkv-5-world/tree/main

|

||||

- RWKV-4-World: https://huggingface.co/BlinkDL/rwkv-4-world/tree/main

|

||||

- RWKV-4-Raven: https://huggingface.co/BlinkDL/rwkv-4-raven/tree/main

|

||||

- ChatRWKV: https://github.com/BlinkDL/ChatRWKV

|

||||

- RWKV-LM: https://github.com/BlinkDL/RWKV-LM

|

||||

- RWKV-LM-LoRA: https://github.com/Blealtan/RWKV-LM-LoRA

|

||||

- RWKV-v5-lora: https://github.com/JL-er/RWKV-v5-lora

|

||||

- MIDI-LLM-tokenizer: https://github.com/briansemrau/MIDI-LLM-tokenizer

|

||||

- ai00_rwkv_server: https://github.com/cgisky1980/ai00_rwkv_server

|

||||

- rwkv.cpp: https://github.com/saharNooby/rwkv.cpp

|

||||

- web-rwkv-py: https://github.com/cryscan/web-rwkv-py

|

||||

- web-rwkv: https://github.com/cryscan/web-rwkv

|

||||

|

||||

## Preview

|

||||

|

||||

### 主页

|

||||

|

||||

|

||||

|

||||

|

||||

### 聊天

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 续写

|

||||

|

||||

|

||||

|

||||

### 作曲

|

||||

|

||||

小贴士: 你可以下载 https://github.com/josStorer/sgm_plus, 并解压到程序的`assets/sound-font`目录, 以使用离线音源. 注意,

|

||||

如果你正在从源码编译程序, 请不要将其放置在源码目录中

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 配置

|

||||

|

||||

|

||||

|

||||

|

||||

### 模型管理

|

||||

|

||||

|

||||

|

||||

|

||||

### 下载管理

|

||||

|

||||

|

||||

@ -1,24 +1,13 @@

|

||||

package backend_golang

|

||||

|

||||

import (

|

||||