Compare commits

1 Commits

master

...

dependabot

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1ee7519f01 |

4

.github/workflows/docker.yml

vendored

4

.github/workflows/docker.yml

vendored

@ -28,7 +28,7 @@ jobs:

|

||||

|

||||

- name: Get lowercase string for the repository name

|

||||

id: lowercase-repo-name

|

||||

uses: ASzc/change-string-case-action@v2

|

||||

uses: ASzc/change-string-case-action@v6

|

||||

with:

|

||||

string: ${{ github.event.repository.name }}

|

||||

|

||||

@ -121,7 +121,7 @@ jobs:

|

||||

steps:

|

||||

- name: Get lowercase string for the repository name

|

||||

id: lowercase-repo-name

|

||||

uses: ASzc/change-string-case-action@v2

|

||||

uses: ASzc/change-string-case-action@v6

|

||||

with:

|

||||

string: ${{ github.event.repository.name }}

|

||||

|

||||

|

||||

17

.github/workflows/pre-release.yml

vendored

17

.github/workflows/pre-release.yml

vendored

@ -18,11 +18,11 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- uses: actions/setup-python@v5

|

||||

id: cp310

|

||||

with:

|

||||

python-version: "3.10"

|

||||

python-version: '3.10'

|

||||

- uses: crazy-max/ghaction-chocolatey@v3

|

||||

with:

|

||||

args: install upx

|

||||

@ -39,7 +39,7 @@ jobs:

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../include" -Destination "py310/include" -Recurse

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../libs" -Destination "py310/libs" -Recurse

|

||||

./py310/python -m pip install cyac==1.9

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

(Get-Content -Path ./backend-golang/app.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/app.go

|

||||

@ -60,17 +60,18 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_linux_x86_64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_linux_x86_64 -O ./backend-rust/web-rwkv-converter

|

||||

sudo apt-get update

|

||||

sudo apt-get install upx

|

||||

sudo apt-get install build-essential libgtk-3-dev libwebkit2gtk-4.0-dev libasound2-dev

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

@ -91,14 +92,15 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_darwin_aarch64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_darwin_aarch64 -O ./backend-rust/web-rwkv-converter

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

@ -112,3 +114,4 @@ jobs:

|

||||

with:

|

||||

name: RWKV-Runner_macos_universal.zip

|

||||

path: build/bin/RWKV-Runner_macos_universal.zip

|

||||

|

||||

|

||||

18

.github/workflows/release.yml

vendored

18

.github/workflows/release.yml

vendored

@ -18,7 +18,7 @@ jobs:

|

||||

with:

|

||||

ref: master

|

||||

|

||||

- uses: jossef/action-set-json-field@v2.2

|

||||

- uses: jossef/action-set-json-field@v2.1

|

||||

with:

|

||||

file: manifest.json

|

||||

field: version

|

||||

@ -43,11 +43,11 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- uses: actions/setup-python@v5

|

||||

id: cp310

|

||||

with:

|

||||

python-version: "3.10"

|

||||

python-version: '3.10'

|

||||

- uses: crazy-max/ghaction-chocolatey@v3

|

||||

with:

|

||||

args: install upx

|

||||

@ -64,7 +64,7 @@ jobs:

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../include" -Destination "py310/include" -Recurse

|

||||

Copy-Item -Path "${{ steps.cp310.outputs.python-path }}/../libs" -Destination "py310/libs" -Recurse

|

||||

./py310/python -m pip install cyac==1.9

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

del ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

(Get-Content -Path ./backend-golang/app.go) -replace "//go:custom_build windows ", "" | Set-Content -Path ./backend-golang/app.go

|

||||

@ -83,17 +83,18 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_linux_x86_64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_linux_x86_64 -O ./backend-rust/web-rwkv-converter

|

||||

sudo apt-get update

|

||||

sudo apt-get install upx

|

||||

sudo apt-get install build-essential libgtk-3-dev libwebkit2gtk-4.0-dev libasound2-dev

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.dylib

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

@ -112,14 +113,15 @@ jobs:

|

||||

ref: master

|

||||

- uses: actions/setup-go@v5

|

||||

with:

|

||||

go-version: "1.20.5"

|

||||

go-version: '1.20.5'

|

||||

- run: |

|

||||

wget https://github.com/josStorer/ai00_rwkv_server/releases/latest/download/webgpu_server_darwin_aarch64 -O ./backend-rust/webgpu_server

|

||||

wget https://github.com/josStorer/web-rwkv-converter/releases/latest/download/web-rwkv-converter_darwin_aarch64 -O ./backend-rust/web-rwkv-converter

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@v2.8.0

|

||||

go install github.com/wailsapp/wails/v2/cmd/wails@latest

|

||||

rm ./backend-python/rwkv_pip/wkv_cuda.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv5.pyd

|

||||

rm ./backend-python/rwkv_pip/rwkv6.pyd

|

||||

rm ./backend-python/rwkv_pip/beta/wkv_cuda.pyd

|

||||

rm ./backend-python/get-pip.py

|

||||

rm ./backend-python/rwkv_pip/cpp/rwkv.dll

|

||||

rm ./backend-python/rwkv_pip/cpp/librwkv.so

|

||||

|

||||

@ -1,26 +1,25 @@

|

||||

## v1.8.4

|

||||

## Changes

|

||||

|

||||

- fix f05a4a, __init__.py is not embedded

|

||||

### Features

|

||||

|

||||

## v1.8.3

|

||||

|

||||

### Deprecations

|

||||

|

||||

- rwkv-beta is deprecated

|

||||

|

||||

### Upgrades

|

||||

|

||||

- bump webgpu(python) (https://github.com/cryscan/web-rwkv-py)

|

||||

- sync https://github.com/JL-er/RWKV-PEFT (LoRA)

|

||||

|

||||

### Improvements

|

||||

|

||||

- improve default LoRA fine-tune params

|

||||

- add Docker support (#291) @LonghronShen

|

||||

|

||||

### Fixes

|

||||

|

||||

- fix #342, #345: cannot import name 'packaging' from 'pkg_resources'

|

||||

- fix the huge error prompt that pops up when running in webgpu mode

|

||||

- fix a generation exception caused by potentially dangerous regex being passed into the stop array

|

||||

- fix max_tokens parameter of Chat page not being passed to backend

|

||||

- fix the issue where penalty_decay and global_penalty are not being passed to the backend default config when running

|

||||

the model through client

|

||||

|

||||

### Improvements

|

||||

|

||||

- prevent 'torch' has no attribute 'cuda' error in torch_gc, so user can use CPU or WebGPU (#302)

|

||||

|

||||

### Chores

|

||||

|

||||

- bump dependencies

|

||||

- add pre-release workflow

|

||||

- dep_check.py now ignores GPUtil

|

||||

|

||||

## Install

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -94,8 +94,7 @@ English | [简体中文](README_ZH.md) | [日本語](README_JA.md)

|

||||

- Built-in model conversion tool.

|

||||

- Built-in download management and remote model inspection.

|

||||

- Built-in one-click LoRA Finetune. (Windows Only)

|

||||

- Can also be used as an OpenAI ChatGPT, GPT-Playground, Ollama and more clients. (Fill in the API URL and API Key in

|

||||

Settings page)

|

||||

- Can also be used as an OpenAI ChatGPT and GPT-Playground client. (Fill in the API URL and API Key in Settings page)

|

||||

- Multilingual localization.

|

||||

- Theme switching.

|

||||

- Automatic updates.

|

||||

@ -248,13 +247,13 @@ computer keyboard as MIDI input.

|

||||

|

||||

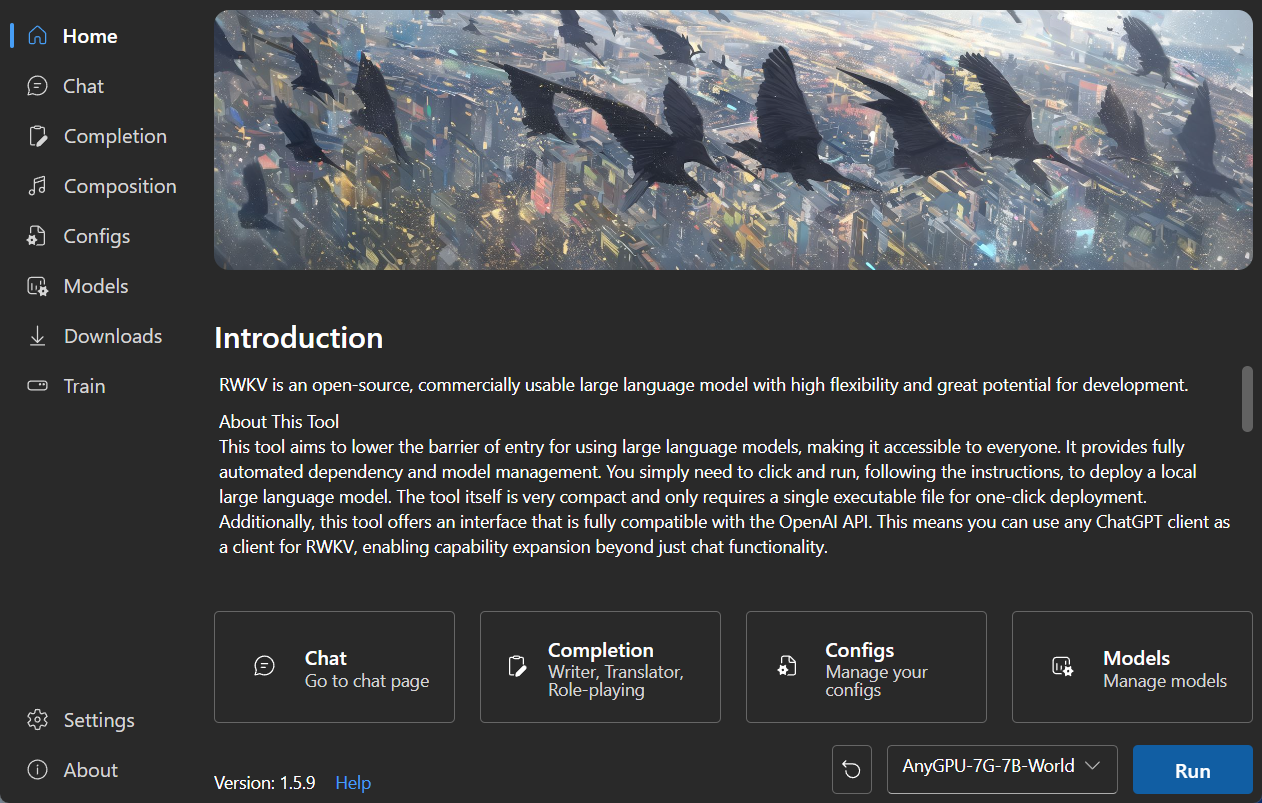

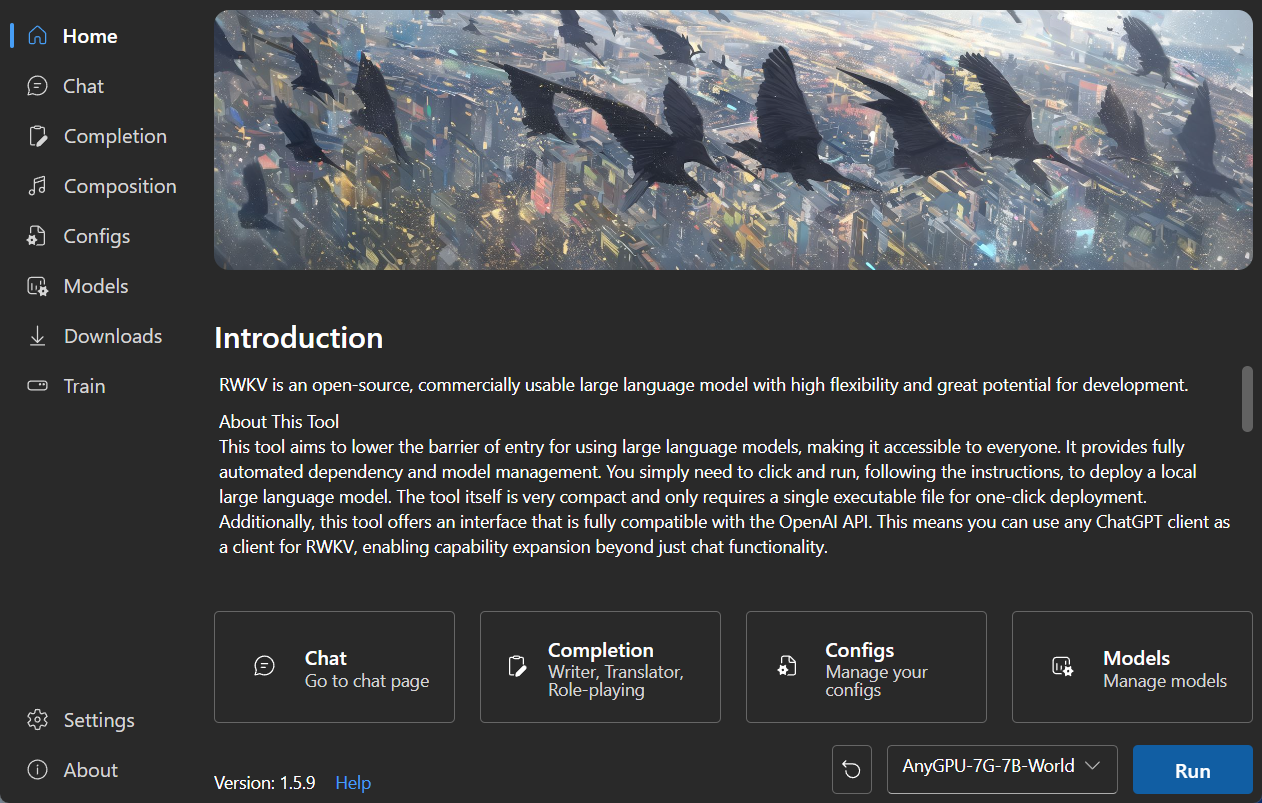

### Homepage

|

||||

|

||||

|

||||

|

||||

|

||||

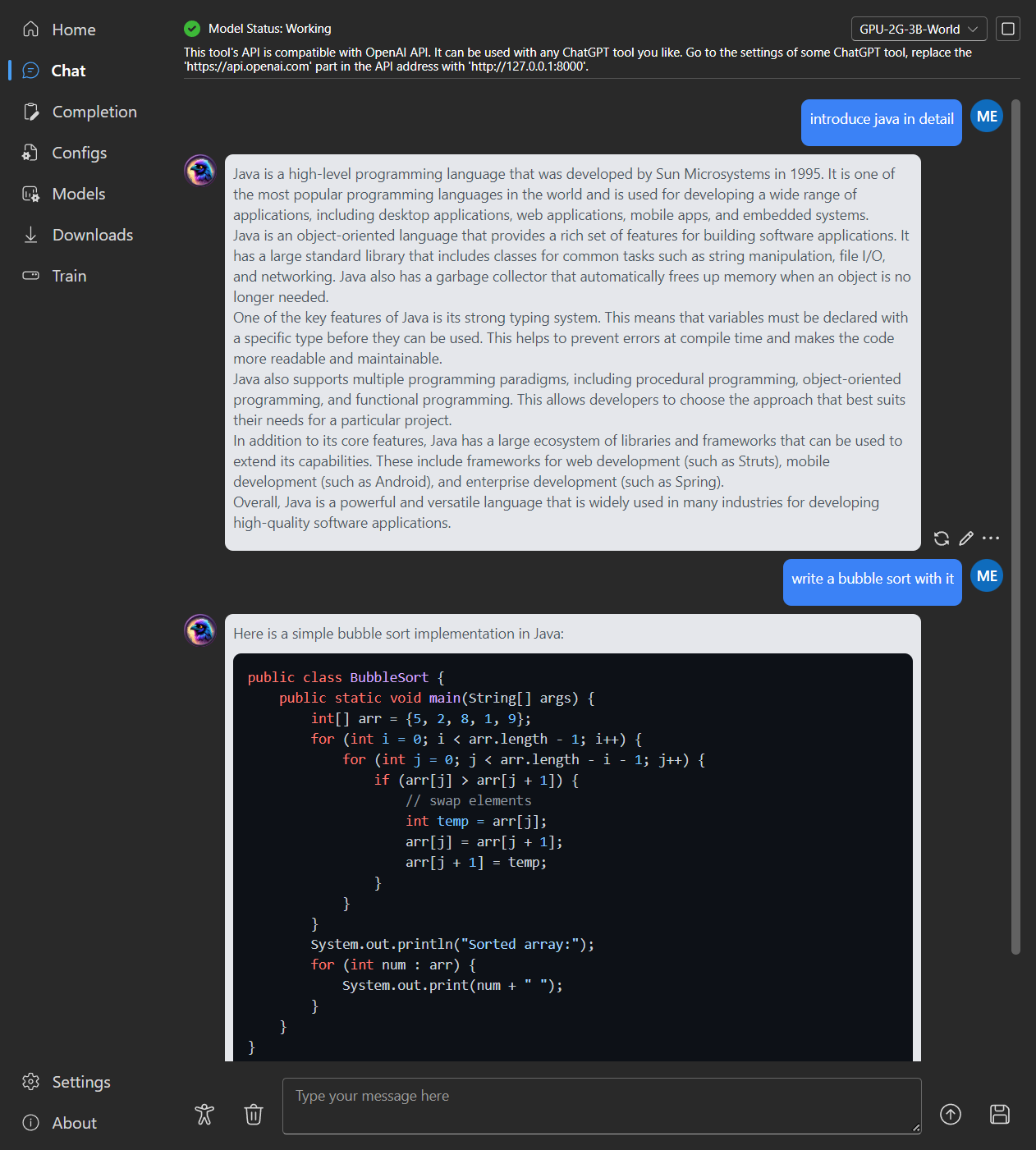

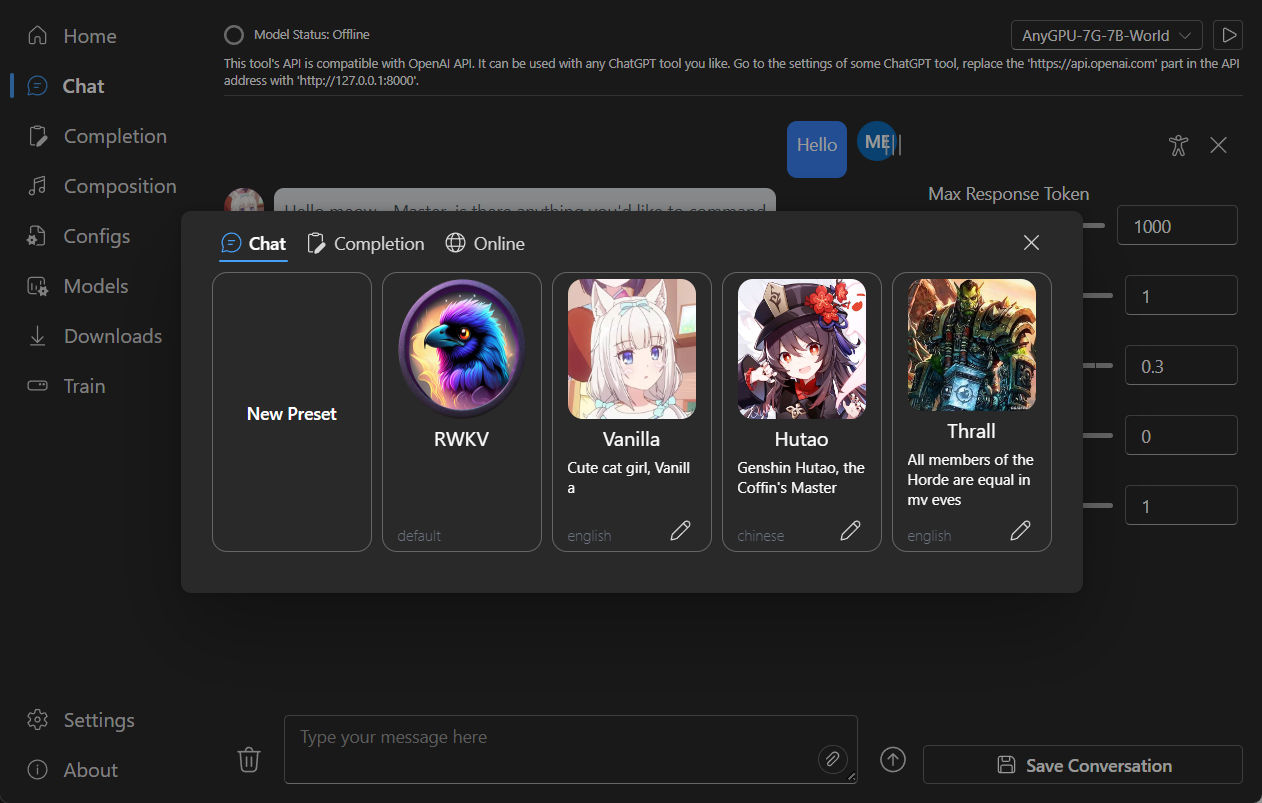

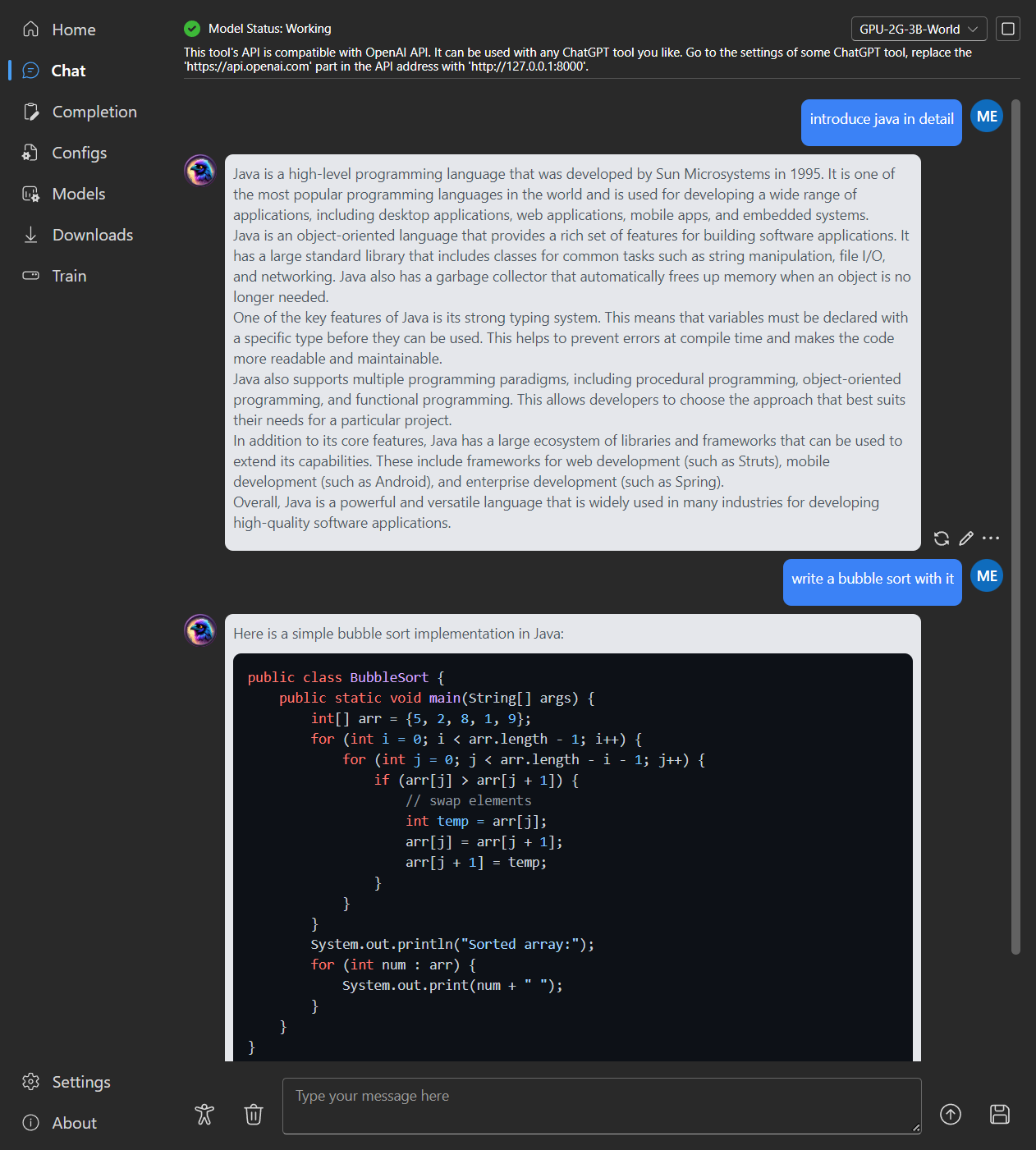

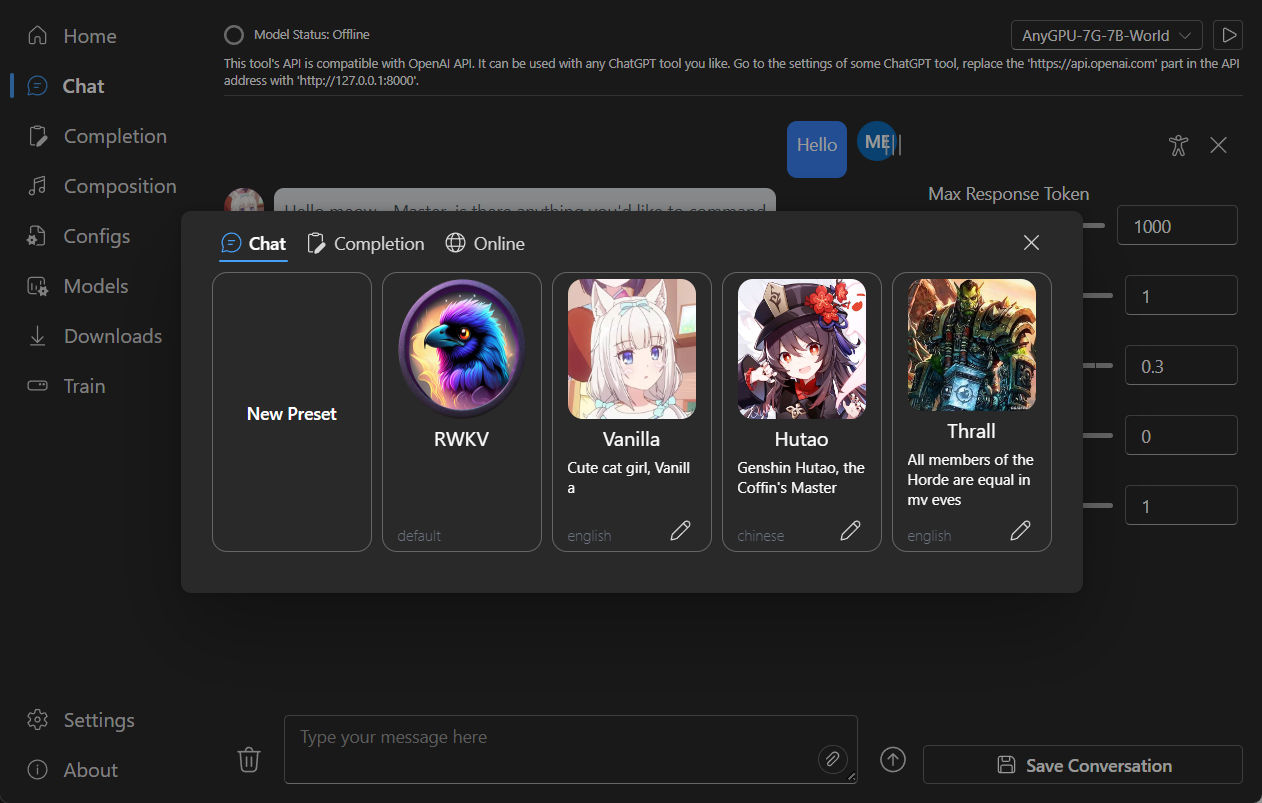

### Chat

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Completion

|

||||

|

||||

|

||||

10

README_JA.md

10

README_JA.md

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -89,8 +89,8 @@

|

||||

- 内蔵モデル変換ツール

|

||||

- ダウンロード管理とリモートモデル検査機能内蔵

|

||||

- 内蔵のLoRA微調整機能を搭載しています (Windowsのみ)

|

||||

- このプログラムは、OpenAI ChatGPT、GPT Playground、Ollama などのクライアントとしても使用できます(設定ページで `API URL`

|

||||

と `API Key` を入力してください)

|

||||

- このプログラムは、OpenAI ChatGPTとGPT Playgroundのクライアントとしても使用できます(設定ページで `API URL` と `API Key`

|

||||

を入力してください)

|

||||

- 多言語ローカライズ

|

||||

- テーマ切り替え

|

||||

- 自動アップデート

|

||||

@ -244,13 +244,13 @@ MIDIキーボードをお持ちでない場合、`Virtual Midi Controller 3 LE`

|

||||

|

||||

### ホームページ

|

||||

|

||||

|

||||

|

||||

|

||||

### チャット

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 補完

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

<p align="center">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/65c46133-7506-4b54-b64f-fe49f188afa7">

|

||||

<img src="https://github.com/josStorer/RWKV-Runner/assets/13366013/d24834b0-265d-45f5-93c0-fac1e19562af">

|

||||

</p>

|

||||

|

||||

<h1 align="center">RWKV Runner</h1>

|

||||

@ -83,7 +83,7 @@ API兼容的接口,这意味着一切ChatGPT客户端都是RWKV客户端。

|

||||

- 内置模型转换工具

|

||||

- 内置下载管理和远程模型检视

|

||||

- 内置一键LoRA微调 (仅限Windows)

|

||||

- 也可用作 OpenAI ChatGPT, GPT Playground, Ollama 等服务的客户端 (在设置内填写API URL和API Key)

|

||||

- 也可用作 OpenAI ChatGPT 和 GPT Playground 客户端 (在设置内填写API URL和API Key)

|

||||

- 多语言本地化

|

||||

- 主题切换

|

||||

- 自动更新

|

||||

@ -226,13 +226,13 @@ for i in np.argsort(embeddings_cos_sim)[::-1]:

|

||||

|

||||

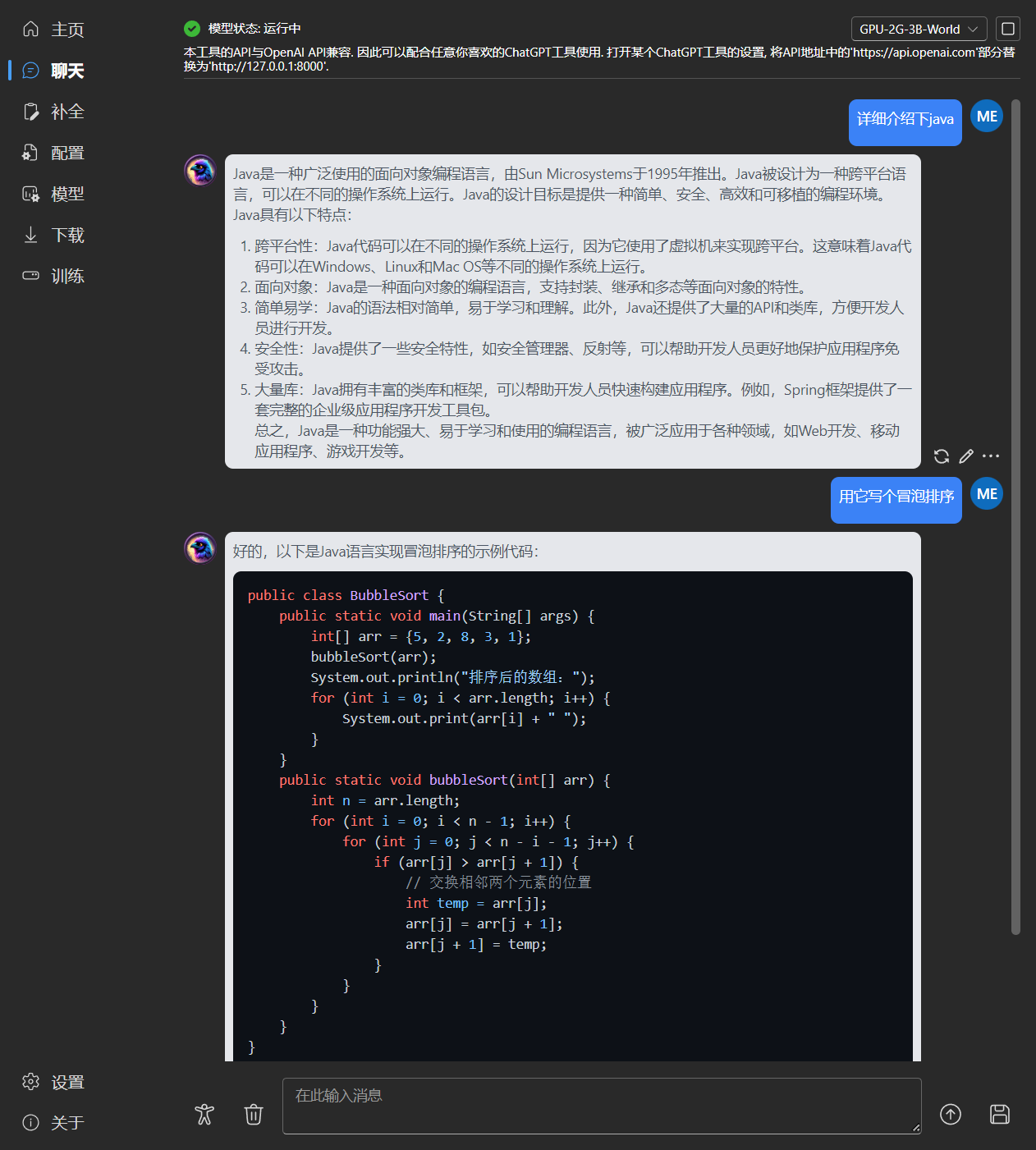

### 主页

|

||||

|

||||

|

||||

|

||||

|

||||

### 聊天

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 续写

|

||||

|

||||

|

||||

@ -7,11 +7,7 @@ import (

|

||||

"context"

|

||||

"errors"

|

||||

"io"

|

||||

"log"

|

||||

"net"

|

||||

"net/http"

|

||||

"net/http/httputil"

|

||||

"net/url"

|

||||

"os"

|

||||

"os/exec"

|

||||

"path/filepath"

|

||||

@ -31,7 +27,6 @@ type App struct {

|

||||

HasConfigData bool

|

||||

ConfigData map[string]any

|

||||

Dev bool

|

||||

proxyPort int

|

||||

exDir string

|

||||

cmdPrefix string

|

||||

}

|

||||

@ -41,63 +36,6 @@ func NewApp() *App {

|

||||

return &App{}

|

||||

}

|

||||

|

||||

func (a *App) newFetchProxy() {

|

||||

go func() {

|

||||

handler := func(w http.ResponseWriter, r *http.Request) {

|

||||

if r.Method == "OPTIONS" {

|

||||

w.Header().Set("Access-Control-Allow-Methods", "GET, POST, OPTIONS")

|

||||

w.Header().Set("Access-Control-Allow-Headers", "*")

|

||||

w.Header().Set("Access-Control-Allow-Origin", "*")

|

||||

return

|

||||

}

|

||||

proxy := &httputil.ReverseProxy{

|

||||

ModifyResponse: func(res *http.Response) error {

|

||||

res.Header.Set("Access-Control-Allow-Origin", "*")

|

||||

return nil

|

||||

},

|

||||

Director: func(req *http.Request) {

|

||||

realTarget := req.Header.Get("Real-Target")

|

||||

if realTarget != "" {

|

||||

realTarget, err := url.PathUnescape(realTarget)

|

||||

if err != nil {

|

||||

log.Printf("Error decoding target URL: %v\n", err)

|

||||

return

|

||||

}

|

||||

target, err := url.Parse(realTarget)

|

||||

if err != nil {

|

||||

log.Printf("Error parsing target URL: %v\n", err)

|

||||

return

|

||||

}

|

||||

req.Header.Set("Accept", "*/*")

|

||||

req.Header.Del("Origin")

|

||||

req.Header.Del("Referer")

|

||||

req.Header.Del("Real-Target")

|

||||

req.Header.Del("Sec-Fetch-Dest")

|

||||

req.Header.Del("Sec-Fetch-Mode")

|

||||

req.Header.Del("Sec-Fetch-Site")

|

||||

req.URL.Scheme = target.Scheme

|

||||

req.URL.Host = target.Host

|

||||

req.URL.Path = target.Path

|

||||

req.URL.RawQuery = url.PathEscape(target.RawQuery)

|

||||

log.Println("Proxying to", realTarget)

|

||||

} else {

|

||||

log.Println("Real-Target header is missing")

|

||||

}

|

||||

},

|

||||

}

|

||||

proxy.ServeHTTP(w, r)

|

||||

}

|

||||

http.HandleFunc("/", handler)

|

||||

listener, err := net.Listen("tcp", "127.0.0.1:0")

|

||||

if err != nil {

|

||||

return

|

||||

}

|

||||

a.proxyPort = listener.Addr().(*net.TCPAddr).Port

|

||||

|

||||

http.Serve(listener, nil)

|

||||

}()

|

||||

}

|

||||

|

||||

// startup is called when the app starts. The context is saved

|

||||

// so we can call the runtime methods

|

||||

func (a *App) OnStartup(ctx context.Context) {

|

||||

@ -125,7 +63,6 @@ func (a *App) OnStartup(ctx context.Context) {

|

||||

os.Chmod(a.exDir+"backend-rust/web-rwkv-converter", 0777)

|

||||

os.Mkdir(a.exDir+"models", os.ModePerm)

|

||||

os.Mkdir(a.exDir+"lora-models", os.ModePerm)

|

||||

os.Mkdir(a.exDir+"state-models", os.ModePerm)

|

||||

os.Mkdir(a.exDir+"finetune/json2binidx_tool/data", os.ModePerm)

|

||||

trainLogPath := "lora-models/train_log.txt"

|

||||

if !a.FileExists(trainLogPath) {

|

||||

@ -139,7 +76,6 @@ func (a *App) OnStartup(ctx context.Context) {

|

||||

a.midiLoop()

|

||||

a.watchFs()

|

||||

a.monitorHardware()

|

||||

a.newFetchProxy()

|

||||

}

|

||||

|

||||

func (a *App) OnBeforeClose(ctx context.Context) bool {

|

||||

@ -152,9 +88,8 @@ func (a *App) OnBeforeClose(ctx context.Context) bool {

|

||||

func (a *App) watchFs() {

|

||||

watcher, err := fsnotify.NewWatcher()

|

||||

if err == nil {

|

||||

watcher.Add(a.exDir + "./models")

|

||||

watcher.Add(a.exDir + "./lora-models")

|

||||

watcher.Add(a.exDir + "./state-models")

|

||||

watcher.Add(a.exDir + "./models")

|

||||

go func() {

|

||||

for {

|

||||

select {

|

||||

@ -304,7 +239,3 @@ func (a *App) RestartApp() error {

|

||||

func (a *App) GetPlatform() string {

|

||||

return runtime.GOOS

|

||||

}

|

||||

|

||||

func (a *App) GetProxyPort() int {

|

||||

return a.proxyPort

|

||||

}

|

||||

|

||||

@ -28,7 +28,7 @@ func (a *App) StartServer(python string, port int, host string, webui bool, rwkv

|

||||

args = append(args, "--webui")

|

||||

}

|

||||

if rwkvBeta {

|

||||

// args = append(args, "--rwkv-beta")

|

||||

args = append(args, "--rwkv-beta")

|

||||

}

|

||||

if rwkvcpp {

|

||||

args = append(args, "--rwkv.cpp")

|

||||

@ -215,12 +215,8 @@ func (a *App) DepCheck(python string) error {

|

||||

|

||||

func (a *App) InstallPyDep(python string, cnMirror bool) (string, error) {

|

||||

var err error

|

||||

torchWhlUrl := "torch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 --index-url https://download.pytorch.org/whl/cu117"

|

||||

if python == "" {

|

||||

python, err = GetPython()

|

||||

if cnMirror && python == "py310/python.exe" {

|

||||

torchWhlUrl = "https://mirrors.aliyun.com/pytorch-wheels/cu117/torch-1.13.1+cu117-cp310-cp310-win_amd64.whl"

|

||||

}

|

||||

if runtime.GOOS == "windows" {

|

||||

python = `"%CD%/` + python + `"`

|

||||

}

|

||||

@ -231,12 +227,12 @@ func (a *App) InstallPyDep(python string, cnMirror bool) (string, error) {

|

||||

|

||||

if runtime.GOOS == "windows" {

|

||||

ChangeFileLine("./py310/python310._pth", 3, "Lib\\site-packages")

|

||||

installScript := python + " ./backend-python/get-pip.py -i https://mirrors.aliyun.com/pypi/simple --no-warn-script-location\n" +

|

||||

python + " -m pip install " + torchWhlUrl + " --no-warn-script-location\n" +

|

||||

python + " -m pip install -r ./backend-python/requirements.txt -i https://mirrors.aliyun.com/pypi/simple --no-warn-script-location\n" +

|

||||

installScript := python + " ./backend-python/get-pip.py -i https://pypi.tuna.tsinghua.edu.cn/simple --no-warn-script-location\n" +

|

||||

python + " -m pip install torch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 --index-url https://download.pytorch.org/whl/cu117 --no-warn-script-location\n" +

|

||||

python + " -m pip install -r ./backend-python/requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple --no-warn-script-location\n" +

|

||||

"exit"

|

||||

if !cnMirror {

|

||||

installScript = strings.Replace(installScript, " -i https://mirrors.aliyun.com/pypi/simple", "", -1)

|

||||

installScript = strings.Replace(installScript, " -i https://pypi.tuna.tsinghua.edu.cn/simple", "", -1)

|

||||

}

|

||||

err = os.WriteFile(a.exDir+"install-py-dep.bat", []byte(installScript), 0644)

|

||||

if err != nil {

|

||||

@ -246,7 +242,7 @@ func (a *App) InstallPyDep(python string, cnMirror bool) (string, error) {

|

||||

}

|

||||

|

||||

if cnMirror {

|

||||

return Cmd(python, "-m", "pip", "install", "-r", "./backend-python/requirements_without_cyac.txt", "-i", "https://mirrors.aliyun.com/pypi/simple")

|

||||

return Cmd(python, "-m", "pip", "install", "-r", "./backend-python/requirements_without_cyac.txt", "-i", "https://pypi.tuna.tsinghua.edu.cn/simple")

|

||||

} else {

|

||||

return Cmd(python, "-m", "pip", "install", "-r", "./backend-python/requirements_without_cyac.txt")

|

||||

}

|

||||

|

||||

2

backend-python/convert_safetensors.py

vendored

2

backend-python/convert_safetensors.py

vendored

@ -102,8 +102,6 @@ if __name__ == "__main__":

|

||||

"time_mix_w2",

|

||||

"time_decay_w1",

|

||||

"time_decay_w2",

|

||||

"time_state",

|

||||

"lora.0",

|

||||

],

|

||||

)

|

||||

print(f"Saved to {args.output}")

|

||||

|

||||

@ -1,8 +1,3 @@

|

||||

import setuptools

|

||||

|

||||

if setuptools.__version__ >= "70.0.0":

|

||||

raise ImportError("setuptools>=70.0.0 is not supported")

|

||||

|

||||

import multipart

|

||||

import fitz

|

||||

import safetensors

|

||||

|

||||

@ -27,6 +27,11 @@ def get_args(args: Union[Sequence[str], None] = None):

|

||||

action="store_true",

|

||||

help="whether to enable WebUI (default: False)",

|

||||

)

|

||||

group.add_argument(

|

||||

"--rwkv-beta",

|

||||

action="store_true",

|

||||

help="whether to use rwkv-beta (default: False)",

|

||||

)

|

||||

group.add_argument(

|

||||

"--rwkv.cpp",

|

||||

action="store_true",

|

||||

|

||||

@ -1,8 +1,7 @@

|

||||

torch

|

||||

torchvision

|

||||

torchaudio

|

||||

setuptools==69.5.1

|

||||

rwkv==0.8.26

|

||||

rwkv==0.8.25

|

||||

langchain==0.0.322

|

||||

fastapi==0.109.1

|

||||

uvicorn==0.23.2

|

||||

|

||||

@ -1,8 +1,7 @@

|

||||

torch

|

||||

torchvision

|

||||

torchaudio

|

||||

setuptools==69.5.1

|

||||

rwkv==0.8.26

|

||||

rwkv==0.8.25

|

||||

langchain==0.0.322

|

||||

fastapi==0.109.1

|

||||

uvicorn==0.23.2

|

||||

|

||||

@ -4,7 +4,6 @@ from threading import Lock

|

||||

from typing import List, Union

|

||||

from enum import Enum

|

||||

import base64

|

||||

import time

|

||||

|

||||

from fastapi import APIRouter, Request, status, HTTPException

|

||||

from sse_starlette.sse import EventSourceResponse

|

||||

@ -54,11 +53,8 @@ class ChatCompletionBody(ModelConfigBody):

|

||||

assistant_name: Union[str, None] = Field(

|

||||

None, description="Internal assistant name", min_length=1

|

||||

)

|

||||

system_name: Union[str, None] = Field(

|

||||

None, description="Internal system name", min_length=1

|

||||

)

|

||||

presystem: bool = Field(

|

||||

False, description="Whether to insert default system prompt at the beginning"

|

||||

True, description="Whether to insert default system prompt at the beginning"

|

||||

)

|

||||

|

||||

model_config = {

|

||||

@ -72,7 +68,6 @@ class ChatCompletionBody(ModelConfigBody):

|

||||

"stop": None,

|

||||

"user_name": None,

|

||||

"assistant_name": None,

|

||||

"system_name": None,

|

||||

"presystem": True,

|

||||

"max_tokens": 1000,

|

||||

"temperature": 1,

|

||||

@ -152,13 +147,10 @@ async def eval_rwkv(

|

||||

print(get_rwkv_config(model))

|

||||

|

||||

response, prompt_tokens, completion_tokens = "", 0, 0

|

||||

completion_start_time = None

|

||||

for response, delta, prompt_tokens, completion_tokens in model.generate(

|

||||

prompt,

|

||||

stop=stop,

|

||||

):

|

||||

if not completion_start_time:

|

||||

completion_start_time = time.time()

|

||||

if await request.is_disconnected():

|

||||

break

|

||||

if stream:

|

||||

@ -171,15 +163,12 @@ async def eval_rwkv(

|

||||

),

|

||||

# "response": response,

|

||||

"model": model.name,

|

||||

"id": "chatcmpl-123",

|

||||

"system_fingerprint": "fp_44709d6fcb",

|

||||

"choices": [

|

||||

(

|

||||

{

|

||||

"delta": {"role":Role.Assistant.value,"content": delta},

|

||||

"delta": {"content": delta},

|

||||

"index": 0,

|

||||

"finish_reason": None,

|

||||

"logprobs":None

|

||||

}

|

||||

if chat_mode

|

||||

else {

|

||||

@ -193,13 +182,6 @@ async def eval_rwkv(

|

||||

)

|

||||

# torch_gc()

|

||||

requests_num = requests_num - 1

|

||||

completion_end_time = time.time()

|

||||

completion_interval = completion_end_time - completion_start_time

|

||||

tps = 0

|

||||

if completion_interval > 0:

|

||||

tps = completion_tokens / completion_interval

|

||||

print(f"Generation TPS: {tps:.2f}")

|

||||

|

||||

if await request.is_disconnected():

|

||||

print(f"{request.client} Stop Waiting")

|

||||

quick_log(

|

||||

@ -221,14 +203,11 @@ async def eval_rwkv(

|

||||

),

|

||||

# "response": response,

|

||||

"model": model.name,

|

||||

"id": "chatcmpl-123",

|

||||

"system_fingerprint": "fp_44709d6fcb",

|

||||

"choices": [

|

||||

(

|

||||

{

|

||||

"delta": {},

|

||||

"index": 0,

|

||||

"logprobs": None,

|

||||

"finish_reason": "stop",

|

||||

}

|

||||

if chat_mode

|

||||

@ -273,9 +252,20 @@ async def eval_rwkv(

|

||||

}

|

||||

|

||||

|

||||

def chat_template_old(

|

||||

model: TextRWKV, body: ChatCompletionBody, interface: str, user: str, bot: str

|

||||

):

|

||||

@router.post("/v1/chat/completions", tags=["Completions"])

|

||||

@router.post("/chat/completions", tags=["Completions"])

|

||||

async def chat_completions(body: ChatCompletionBody, request: Request):

|

||||

model: TextRWKV = global_var.get(global_var.Model)

|

||||

if model is None:

|

||||

raise HTTPException(status.HTTP_400_BAD_REQUEST, "model not loaded")

|

||||

|

||||

if body.messages is None or body.messages == []:

|

||||

raise HTTPException(status.HTTP_400_BAD_REQUEST, "messages not found")

|

||||

|

||||

interface = model.interface

|

||||

user = model.user if body.user_name is None else body.user_name

|

||||

bot = model.bot if body.assistant_name is None else body.assistant_name

|

||||

|

||||

is_raven = model.rwkv_type == RWKVType.Raven

|

||||

|

||||

completion_text: str = ""

|

||||

@ -344,53 +334,6 @@ The following is a coherent verbose detailed conversation between a girl named {

|

||||

completion_text += append_message + "\n\n"

|

||||

completion_text += f"{bot}{interface}"

|

||||

|

||||

return completion_text

|

||||

|

||||

|

||||

def chat_template(

|

||||

model: TextRWKV, body: ChatCompletionBody, interface: str, user: str, bot: str

|

||||

):

|

||||

completion_text: str = ""

|

||||

if body.presystem:

|

||||

completion_text = (

|

||||

f"{user}{interface} hi\n\n{bot}{interface} Hi. "

|

||||

+ "I am your assistant and I will provide expert full response in full details. Please feel free to ask any question and I will always answer it.\n\n"

|

||||

)

|

||||

|

||||

system = "System" if body.system_name is None else body.system_name

|

||||

for message in body.messages:

|

||||

append_message: str = ""

|

||||

if message.role == Role.User:

|

||||

append_message = f"{user}{interface} " + message.content

|

||||

elif message.role == Role.Assistant:

|

||||

append_message = f"{bot}{interface} " + message.content

|

||||

elif message.role == Role.System:

|

||||

append_message = f"{system}{interface} " + message.content

|

||||

completion_text += append_message + "\n\n"

|

||||

completion_text += f"{bot}{interface}"

|

||||

|

||||

return completion_text

|

||||

|

||||

|

||||

@router.post("/v1/chat/completions", tags=["Completions"])

|

||||

@router.post("/chat/completions", tags=["Completions"])

|

||||

async def chat_completions(body: ChatCompletionBody, request: Request):

|

||||

model: TextRWKV = global_var.get(global_var.Model)

|

||||

if model is None:

|

||||

raise HTTPException(status.HTTP_400_BAD_REQUEST, "model not loaded")

|

||||

|

||||

if body.messages is None or body.messages == []:

|

||||

raise HTTPException(status.HTTP_400_BAD_REQUEST, "messages not found")

|

||||

|

||||

interface = model.interface

|

||||

user = model.user if body.user_name is None else body.user_name

|

||||

bot = model.bot if body.assistant_name is None else body.assistant_name

|

||||

|

||||

if model.version < 5:

|

||||

completion_text = chat_template_old(model, body, interface, user, bot)

|

||||

else:

|

||||

completion_text = chat_template(model, body, interface, user, bot)

|

||||

|

||||

user_code = model.pipeline.decode([model.pipeline.encode(user)[0]])

|

||||

bot_code = model.pipeline.decode([model.pipeline.encode(bot)[0]])

|

||||

if type(body.stop) == str:

|

||||

@ -399,9 +342,9 @@ async def chat_completions(body: ChatCompletionBody, request: Request):

|

||||

body.stop.append(f"\n\n{user_code}")

|

||||

body.stop.append(f"\n\n{bot_code}")

|

||||

elif body.stop is None:

|

||||

body.stop = default_stop + [f"\n\n{user_code}", f"\n\n{bot_code}"]

|

||||

# if not body.presystem:

|

||||

# body.stop.append("\n\n")

|

||||

body.stop = default_stop

|

||||

if not body.presystem:

|

||||

body.stop.append("\n\n")

|

||||

|

||||

if body.stream:

|

||||

return EventSourceResponse(

|

||||

|

||||

@ -120,11 +120,6 @@ def update_config(body: ModelConfigBody):

|

||||

model_config = ModelConfigBody()

|

||||

global_var.set(global_var.Model_Config, model_config)

|

||||

merge_model(model_config, body)

|

||||

exception = load_rwkv_state(

|

||||

global_var.get(global_var.Model), model_config.state, True

|

||||

)

|

||||

if exception is not None:

|

||||

raise exception

|

||||

print("Updated Model Config:", model_config)

|

||||

|

||||

return "success"

|

||||

|

||||

@ -96,9 +96,7 @@ def copy_tensor_to_cpu(tensors):

|

||||

elif tensors_type == np.ndarray: # rwkv.cpp

|

||||

copied = tensors

|

||||

else: # WebGPU state

|

||||

model = global_var.get(global_var.Model)

|

||||

if model:

|

||||

copied = model.model.model.back_state()

|

||||

copied = tensors.back()

|

||||

|

||||

return copied, devices

|

||||

|

||||

@ -178,19 +176,6 @@ def reset_state():

|

||||

return "success"

|

||||

|

||||

|

||||

def force_reset_state():

|

||||

global trie, dtrie

|

||||

|

||||

if trie is None:

|

||||

return

|

||||

|

||||

import cyac

|

||||

|

||||

trie = cyac.Trie()

|

||||

dtrie = {}

|

||||

gc.collect()

|

||||

|

||||

|

||||

class LongestPrefixStateBody(BaseModel):

|

||||

prompt: str

|

||||

|

||||

@ -240,14 +225,11 @@ def longest_prefix_state(body: LongestPrefixStateBody, request: Request):

|

||||

state: Union[Any, None] = v["state"]

|

||||

logits: Union[Any, None] = v["logits"]

|

||||

|

||||

state_type = type(state)

|

||||

if state_type == list and hasattr(state[0], "device"): # torch

|

||||

if type(state) == list and hasattr(state[0], "device"): # torch

|

||||

state = [

|

||||

(

|

||||

tensor.to(devices[i])

|

||||

if devices[i] != torch.device("cpu")

|

||||

else tensor.clone()

|

||||

)

|

||||

tensor.to(devices[i])

|

||||

if devices[i] != torch.device("cpu")

|

||||

else tensor.clone()

|

||||

for i, tensor in enumerate(state)

|

||||

]

|

||||

logits = (

|

||||

@ -255,9 +237,7 @@ def longest_prefix_state(body: LongestPrefixStateBody, request: Request):

|

||||

if logits_device != torch.device("cpu")

|

||||

else logits.clone()

|

||||

)

|

||||

elif state_type == np.ndarray: # rwkv.cpp

|

||||

logits = np.copy(logits)

|

||||

else: # WebGPU

|

||||

else: # rwkv.cpp, WebGPU

|

||||

logits = np.copy(logits)

|

||||

|

||||

quick_log(request, body, "Hit:\n" + prompt)

|

||||

|

||||

124

backend-python/rwkv_pip/beta/cuda/att_one.cu

vendored

Normal file

124

backend-python/rwkv_pip/beta/cuda/att_one.cu

vendored

Normal file

@ -0,0 +1,124 @@

|

||||

#include "ATen/ATen.h"

|

||||

#include <cuda_fp16.h>

|

||||

#include <cuda_runtime.h>

|

||||

#include <torch/extension.h>

|

||||

|

||||

#include "element_wise.h"

|

||||

#include "util.h"

|

||||

|

||||

// Equivalent Python code:

|

||||

// ww = t_first + k

|

||||

// p = torch.maximum(pp, ww)

|

||||

// e1 = torch.exp(pp - p)

|

||||

// e2 = torch.exp(ww - p)

|

||||

// wkv = ((e1 * aa + e2 * v) / (e1 * bb + e2)).to(dtype=x.dtype)

|

||||

// ww = t_decay + pp

|

||||

// p = torch.maximum(ww, k)

|

||||

// e1 = torch.exp(ww - p)

|

||||

// e2 = torch.exp(k - p)

|

||||

// t1 = e1 * aa + e2 * v

|

||||

// t2 = e1 * bb + e2

|

||||

// r = r * wkv

|

||||

// return t1, t2, p, r

|

||||

struct WkvForwardOne {

|

||||

const float *t_first;

|

||||

const float *k;

|

||||

const float *pp;

|

||||

const float *aa;

|

||||

const float *bb;

|

||||

const float *t_decay;

|

||||

const float *v;

|

||||

/* out */ float *t1;

|

||||

/* out */ float *t2;

|

||||

/* out */ float *p;

|

||||

/* in & out */ half *r;

|

||||

|

||||

__device__ void operator()(int i) const {

|

||||

float ww = t_first[i] + k[i];

|

||||

float pp_ = pp[i];

|

||||

float p_ = (pp_ > ww) ? pp_ : ww;

|

||||

float e1 = expf(pp_ - p_);

|

||||

float e2 = expf(ww - p_);

|

||||

float aa_ = aa[i];

|

||||

float bb_ = bb[i];

|

||||

float v_ = v[i];

|

||||

r[i] = __hmul(r[i], __float2half(((e1 * aa_ + e2 * v_) / (e1 * bb_ + e2))));

|

||||

ww = t_decay[i] + pp_;

|

||||

float k_ = k[i];

|

||||

p_ = (ww > k_) ? ww : k_;

|

||||

e1 = expf(ww - p_);

|

||||

e2 = expf(k_ - p_);

|

||||

t1[i] = e1 * aa_ + e2 * v_;

|

||||

t2[i] = e1 * bb_ + e2;

|

||||

p[i] = p_;

|

||||

}

|

||||

};

|

||||

|

||||

/*

|

||||

Equivalent Python code:

|

||||

kx = xx * k_mix + sx * (1 - k_mix)

|

||||

vx = xx * v_mix + sx * (1 - v_mix)

|

||||

rx = xx * r_mix + sx * (1 - r_mix)

|

||||

*/

|

||||

|

||||

struct Mix {

|

||||

const half *xx;

|

||||

const half *sx;

|

||||

const half *k_mix;

|

||||

const half *v_mix;

|

||||

const half *r_mix;

|

||||

/* out */ half *kx;

|

||||

/* out */ half *vx;

|

||||

/* out */ half *rx;

|

||||

|

||||

__device__ void operator()(int i) const {

|

||||

half xx_ = xx[i];

|

||||

half sx_ = sx[i];

|

||||

half k_mix_ = k_mix[i];

|

||||

half v_mix_ = v_mix[i];

|

||||

half r_mix_ = r_mix[i];

|

||||

kx[i] = __hadd(__hmul(xx_, k_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), k_mix_)));

|

||||

vx[i] = __hadd(__hmul(xx_, v_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), v_mix_)));

|

||||

rx[i] = __hadd(__hmul(xx_, r_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), r_mix_)));

|

||||

}

|

||||

};

|

||||

|

||||

using torch::Tensor;

|

||||

|

||||

void gemm_fp16_cublas_tensor(Tensor a, Tensor b, Tensor c);

|

||||

|

||||

Tensor att_one(Tensor x, Tensor ln_w, Tensor ln_b, Tensor sx, Tensor k_mix,

|

||||

Tensor v_mix, Tensor r_mix, Tensor kw,

|

||||

/* imm */ Tensor kx, Tensor vw, /* imm */ Tensor vx, Tensor rw,

|

||||

/* imm */ Tensor rx, Tensor ow, Tensor t_first,

|

||||

/* imm */ Tensor k, Tensor pp, Tensor ww, Tensor aa, Tensor bb,

|

||||

Tensor t_decay, /* imm */ Tensor v, /* in & out */ Tensor r,

|

||||

/* out */ Tensor x_plus_out, /* out */ Tensor t1,

|

||||

/* out */ Tensor t2, /* out */ Tensor p) {

|

||||

Tensor xx = at::layer_norm(x, {x.size(-1)}, ln_w, ln_b);

|

||||

element_wise(Mix{data_ptr<half>(xx), data_ptr<half>(sx),

|

||||

data_ptr<half>(k_mix), data_ptr<half>(v_mix),

|

||||

data_ptr<half>(r_mix), data_ptr<half>(kx),

|

||||

data_ptr<half>(vx), data_ptr<half>(rx)},

|

||||

x.numel());

|

||||

|

||||

gemm_fp16_cublas_tensor(kx, kw, k);

|

||||

gemm_fp16_cublas_tensor(vx, vw, v);

|

||||

gemm_fp16_cublas_tensor(rx, rw, r);

|

||||

at::sigmoid_(r);

|

||||

|

||||

element_wise(WkvForwardOne{data_ptr<float>(t_first), data_ptr<float>(k),

|

||||

data_ptr<float>(pp), data_ptr<float>(aa),

|

||||

data_ptr<float>(bb), data_ptr<float>(t_decay),

|

||||

data_ptr<float>(v), data_ptr<float>(t1),

|

||||

data_ptr<float>(t2), data_ptr<float>(p),

|

||||

data_ptr<half>(r)},

|

||||

x.numel());

|

||||

|

||||

gemm_fp16_cublas_tensor(r, ow, x_plus_out);

|

||||

x_plus_out += x;

|

||||

return xx;

|

||||

}

|

||||

109

backend-python/rwkv_pip/beta/cuda/att_one_v5.cu

vendored

Normal file

109

backend-python/rwkv_pip/beta/cuda/att_one_v5.cu

vendored

Normal file

@ -0,0 +1,109 @@

|

||||

#include "ATen/ATen.h"

|

||||

#include <cuda_fp16.h>

|

||||

#include <cuda_runtime.h>

|

||||

#include <torch/extension.h>

|

||||

|

||||

#include "element_wise.h"

|

||||

#include "util.h"

|

||||

|

||||

// Equivalent Python code:

|

||||

// s1 = t_first * a + s

|

||||

// s2 = a + t_decay * s

|

||||

struct Fused1 {

|

||||

const float *t_first;

|

||||

const float *t_decay;

|

||||

const float *a;

|

||||

const float *s;

|

||||

const int32_t inner_size;

|

||||

/* out */ float *s1;

|

||||

/* out */ float *s2;

|

||||

|

||||

__device__ void operator()(int i) const {

|

||||

const int j = i / inner_size;

|

||||

s1[i] = t_first[j] * a[i] + s[i];

|

||||

s2[i] = a[i] + t_decay[j] * s[i];

|

||||

}

|

||||

};

|

||||

|

||||

/*

|

||||

Equivalent Python code:

|

||||

kx = xx * k_mix + sx * (1 - k_mix)

|

||||

vx = xx * v_mix + sx * (1 - v_mix)

|

||||

rx = xx * r_mix + sx * (1 - r_mix)

|

||||

*/

|

||||

|

||||

struct Mix {

|

||||

const half *xx;

|

||||

const half *sx;

|

||||

const half *k_mix;

|

||||

const half *v_mix;

|

||||

const half *r_mix;

|

||||

/* out */ half *kx;

|

||||

/* out */ half *vx;

|

||||

/* out */ half *rx;

|

||||

|

||||

__device__ void operator()(int i) const {

|

||||

half xx_ = xx[i];

|

||||

half sx_ = sx[i];

|

||||

half k_mix_ = k_mix[i];

|

||||

half v_mix_ = v_mix[i];

|

||||

half r_mix_ = r_mix[i];

|

||||

kx[i] = __hadd(__hmul(xx_, k_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), k_mix_)));

|

||||

vx[i] = __hadd(__hmul(xx_, v_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), v_mix_)));

|

||||

rx[i] = __hadd(__hmul(xx_, r_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), r_mix_)));

|

||||

}

|

||||

};

|

||||

|

||||

using torch::Tensor;

|

||||

|

||||

void gemm_fp16_cublas_tensor(Tensor a, Tensor b, Tensor c);

|

||||

|

||||

Tensor att_one_v5(Tensor x, Tensor sx, Tensor s, Tensor ln_w, Tensor ln_b,

|

||||

Tensor lx_w, Tensor lx_b, Tensor k_mix, Tensor v_mix,

|

||||

Tensor r_mix, Tensor kw,

|

||||

/* imm */ Tensor kx, Tensor vw, /* imm */ Tensor vx,

|

||||

Tensor rw,

|

||||

/* imm */ Tensor rx, Tensor ow, Tensor t_first,

|

||||

/* imm */ Tensor k, Tensor t_decay, /* imm */ Tensor v,

|

||||

/* imm */ Tensor r, /* imm */ Tensor s1,

|

||||

/* out */ Tensor x_plus_out, /* out */ Tensor s2) {

|

||||

Tensor xx = at::layer_norm(x, {x.size(-1)}, ln_w, ln_b);

|

||||

element_wise(Mix{data_ptr<half>(xx), data_ptr<half>(sx),

|

||||

data_ptr<half>(k_mix), data_ptr<half>(v_mix),

|

||||

data_ptr<half>(r_mix), data_ptr<half>(kx),

|

||||

data_ptr<half>(vx), data_ptr<half>(rx)},

|

||||

x.numel());

|

||||

|

||||

int H = t_decay.size(0);

|

||||

int S = x.size(-1) / H;

|

||||

gemm_fp16_cublas_tensor(rx, rw, r);

|

||||

r = at::reshape(r, {H, 1, S});

|

||||

gemm_fp16_cublas_tensor(kx, kw, k);

|

||||

k = at::reshape(k, {H, S, 1});

|

||||

gemm_fp16_cublas_tensor(vx, vw, v);

|

||||

v = at::reshape(v, {H, 1, S});

|

||||

|

||||

{

|

||||

Tensor a = at::matmul(k, v);

|

||||

|

||||

// s1 = t_first * a + s

|

||||

// s2 = a + t_decay * s

|

||||

element_wise(Fused1{data_ptr<float>(t_first), data_ptr<float>(t_decay),

|

||||

data_ptr<float>(a), data_ptr<float>(s),

|

||||

static_cast<int32_t>(a.size(1) * a.size(2)),

|

||||

data_ptr<float>(s1), data_ptr<float>(s2)},

|

||||

a.numel());

|

||||

}

|

||||

|

||||

Tensor out = at::matmul(r, s1);

|

||||

out = at::flatten(out);

|

||||

out = at::squeeze(at::group_norm(at::unsqueeze(out, 0), H, lx_w, lx_b), 0);

|

||||

out = at::_cast_Half(out);

|

||||

|

||||

gemm_fp16_cublas_tensor(out, ow, x_plus_out);

|

||||

x_plus_out += x;

|

||||

return xx;

|

||||

}

|

||||

178

backend-python/rwkv_pip/beta/cuda/att_seq.cu

vendored

Normal file

178

backend-python/rwkv_pip/beta/cuda/att_seq.cu

vendored

Normal file

@ -0,0 +1,178 @@

|

||||

#include "ATen/ATen.h"

|

||||

#include <cuda_fp16.h>

|

||||

#include <cuda_runtime.h>

|

||||

#include <torch/extension.h>

|

||||

|

||||

#include "util.h"

|

||||

#include "element_wise.h"

|

||||

|

||||

using torch::Tensor;

|

||||

|

||||

void gemm_fp16_cublas(const void *a, const void *b, void *c, int m,

|

||||

int n, int k, bool output_fp32);

|

||||

|

||||

// based on `kernel_wkv_forward`, fusing more operations

|

||||

__global__ void kernel_wkv_forward_new(

|

||||

const int B, const int T, const int C, const float *__restrict__ const _w,

|

||||

const float *__restrict__ const _u, const float *__restrict__ const _k,

|

||||

const float *__restrict__ const _v, const half *__restrict__ const r,

|

||||

half *__restrict__ const _y, float *__restrict__ const _aa,

|

||||

float *__restrict__ const _bb, float *__restrict__ const _pp) {

|

||||

const int idx = blockIdx.x * blockDim.x + threadIdx.x;

|

||||

const int _b = idx / C;

|

||||

const int _c = idx % C;

|

||||

const int _offset = _b * T * C + _c;

|

||||

const int _state_offset = _b * C + _c;

|

||||

|

||||

float u = _u[_c];

|

||||

float w = _w[_c];

|

||||

const float *__restrict__ const k = _k + _offset;

|

||||

const float *__restrict__ const v = _v + _offset;

|

||||

half *__restrict__ const y = _y + _offset;

|

||||

|

||||

float aa = _aa[_state_offset];

|

||||

float bb = _bb[_state_offset];

|

||||

float pp = _pp[_state_offset];

|

||||

for (int i = 0; i < T; i++) {

|

||||

const int ii = i * C;

|

||||

const float kk = k[ii];

|

||||

const float vv = v[ii];

|

||||

float ww = u + kk;

|

||||

float p = max(pp, ww);

|

||||

float e1 = exp(pp - p);

|

||||

float e2 = exp(ww - p);

|

||||

y[ii] = __float2half((e1 * aa + e2 * vv) / (e1 * bb + e2));

|

||||

ww = w + pp;

|

||||

p = max(ww, kk);

|

||||

e1 = exp(ww - p);

|

||||

e2 = exp(kk - p);

|

||||

aa = e1 * aa + e2 * vv;

|

||||

bb = e1 * bb + e2;

|

||||

pp = p;

|

||||

}

|

||||

_aa[_state_offset] = aa;

|

||||

_bb[_state_offset] = bb;

|

||||

_pp[_state_offset] = pp;

|

||||

}

|

||||

|

||||

void cuda_wkv_forward_new(int B, int T, int C, float *w, float *u, float *k,

|

||||

float *v, half *r, half *y, float *aa, float *bb,

|

||||

float *pp) {

|

||||

dim3 threadsPerBlock(min(C, 32));

|

||||

assert(B * C % threadsPerBlock.x == 0);

|

||||

dim3 numBlocks(B * C / threadsPerBlock.x);

|

||||

kernel_wkv_forward_new<<<numBlocks, threadsPerBlock>>>(B, T, C, w, u, k, v, r,

|

||||

y, aa, bb, pp);

|

||||

}

|

||||

|

||||

__global__ void _att_mix(const half *xx, const half *sx, const half *k_mix,

|

||||

const half *v_mix, const half *r_mix,

|

||||

const int outer_size, const int inner_size, half *kx,

|

||||

half *vx, half *rx) {

|

||||

for (int idx2 = blockIdx.x * blockDim.x + threadIdx.x; idx2 < inner_size;

|

||||

idx2 += blockDim.x * gridDim.x) {

|

||||

half k_mix_ = k_mix[idx2];

|

||||

half v_mix_ = v_mix[idx2];

|

||||

half r_mix_ = r_mix[idx2];

|

||||

for (int row = 0; row < outer_size; ++row) {

|

||||

int idx1 = row * inner_size + idx2;

|

||||

half xx_ = xx[idx1];

|

||||

half sx_ = sx[idx1];

|

||||

kx[idx1] = __hadd(__hmul(xx_, k_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), k_mix_)));

|

||||

vx[idx1] = __hadd(__hmul(xx_, v_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), v_mix_)));

|

||||

rx[idx1] = __hadd(__hmul(xx_, r_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), r_mix_)));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void att_mix(const half *xx, const half *sx, const half *k_mix,

|

||||

const half *v_mix, const half *r_mix, const int outer_size,

|

||||

const int inner_size, half *kx, half *vx, half *rx) {

|

||||

// 256 is good enough on most GPUs

|

||||

const int32_t BLOCK_SIZE = 256;

|

||||

assert(inner_size % BLOCK_SIZE == 0);

|

||||

_att_mix<<<inner_size / BLOCK_SIZE, BLOCK_SIZE>>>(

|

||||

xx, sx, k_mix, v_mix, r_mix, outer_size, inner_size, kx, vx, rx);

|

||||

}

|

||||

|

||||

struct InplaceSigmoid {

|

||||

__device__ __forceinline__ half operator()(int i) const {

|

||||

ptr[i] = __float2half(1.0 / (1.0 + exp(-__half2float(ptr[i]))));

|

||||

}

|

||||

half *ptr;

|

||||

};

|

||||

|

||||

struct InplaceMul {

|

||||

__device__ __forceinline__ half operator()(int i) const {

|

||||

y[i] = __hmul(x[i], y[i]);

|

||||

}

|

||||

half *y;

|

||||

half *x;

|

||||

};

|

||||

|

||||

/*

|

||||

Equivalent Python code:

|

||||

|

||||

xx = F.layer_norm(x, (x.shape[-1],), weight=ln_w, bias=ln_b)

|

||||

sx = torch.cat((sx.unsqueeze(0), xx[:-1,:]))

|

||||

kx = xx * k_mix + sx * (1 - k_mix)

|

||||

vx = xx * v_mix + sx * (1 - v_mix)

|

||||

rx = xx * r_mix + sx * (1 - r_mix)

|

||||

|

||||

r = torch.sigmoid(gemm(rx, rw))

|

||||

k = gemm(kx, kw, output_dtype=torch.float32)

|

||||

v = gemm(vx, vw, output_dtype=torch.float32)

|

||||

|

||||

T = x.shape[0]

|

||||

for t in range(T):

|

||||

kk = k[t]

|

||||

vv = v[t]

|

||||

ww = t_first + kk

|

||||

p = torch.maximum(pp, ww)

|

||||

e1 = torch.exp(pp - p)

|

||||

e2 = torch.exp(ww - p)

|

||||

sx[t] = ((e1 * aa + e2 * vv) / (e1 * bb + e2)).to(dtype=x.dtype)

|

||||

ww = t_decay + pp

|

||||

p = torch.maximum(ww, kk)

|

||||

e1 = torch.exp(ww - p)

|

||||

e2 = torch.exp(kk - p)

|

||||

aa = e1 * aa + e2 * vv

|

||||

bb = e1 * bb + e2

|

||||

pp = p

|

||||

out = gemm(r * sx, ow)

|

||||

return x + out, xx[-1,:], aa, bb, pp

|

||||

*/

|

||||

Tensor att_seq(Tensor x, Tensor sx, Tensor ln_w, Tensor ln_b, Tensor k_mix,

|

||||

Tensor v_mix, Tensor r_mix, Tensor kw, Tensor vw, Tensor rw,

|

||||

Tensor ow, Tensor t_first, Tensor pp, Tensor aa, Tensor bb,

|

||||

Tensor t_decay, /* imm */ Tensor buf, /* out */ Tensor x_plus_out) {

|

||||

Tensor xx = at::layer_norm(x, {x.size(-1)}, ln_w, ln_b);

|

||||

sx = at::cat({sx.unsqueeze(0), xx.slice(0, 0, -1)}, 0);

|

||||

char* buf_ptr = (char*)buf.data_ptr();

|

||||

half* kx = (half*)buf_ptr;

|

||||

half* vx = kx + x.numel();

|

||||

half* rx = vx + x.numel();

|

||||

half* wkv_y = rx + x.numel();

|

||||

att_mix(data_ptr<half>(xx), data_ptr<half>(sx), data_ptr<half>(k_mix),

|

||||

data_ptr<half>(v_mix), data_ptr<half>(r_mix), xx.size(0), xx.size(1),

|

||||

kx, vx, rx);

|

||||

float* k = reinterpret_cast<float*>(wkv_y + x.numel());

|

||||

float* v = k + x.size(0) * kw.size(1);

|

||||

half* r = reinterpret_cast<half*>(v + x.size(0) * vw.size(1));

|

||||

|

||||

gemm_fp16_cublas(kx, kw.data_ptr(), k, x.size(0), kw.size(1), kw.size(0), true);

|

||||

gemm_fp16_cublas(vx, vw.data_ptr(), v, x.size(0), vw.size(1), vw.size(0), true);

|

||||

gemm_fp16_cublas(rx, rw.data_ptr(), r, x.size(0), rw.size(1), rw.size(0), false);

|

||||

element_wise(InplaceSigmoid{r}, x.size(0) * rw.size(1));

|

||||

cuda_wkv_forward_new(1, x.size(0), x.size(1), data_ptr<float>(t_decay),

|

||||

data_ptr<float>(t_first), k, v, r,

|

||||

wkv_y, data_ptr<float>(aa),

|

||||

data_ptr<float>(bb), data_ptr<float>(pp));

|

||||

element_wise(InplaceMul{wkv_y, r}, x.numel());

|

||||

gemm_fp16_cublas(wkv_y, ow.data_ptr(), x_plus_out.data_ptr(), x.size(0), ow.size(1), ow.size(0), false);

|

||||

x_plus_out += x;

|

||||

return xx;

|

||||

}

|

||||

21

backend-python/rwkv_pip/beta/cuda/element_wise.h

vendored

Normal file

21

backend-python/rwkv_pip/beta/cuda/element_wise.h

vendored

Normal file

@ -0,0 +1,21 @@

|

||||

#include <cassert>

|

||||

#include <cstddef>

|

||||

#include <cstdint>

|

||||

|

||||

template <typename Func> __global__ void _element_wise(Func func, int n) {

|

||||

for (int i = blockIdx.x * blockDim.x + threadIdx.x; i < n;

|

||||

i += blockDim.x * gridDim.x) {

|

||||

func(i);

|

||||

}

|

||||

}

|

||||

|

||||

// NOTE: packed data type (e.g. float4) is a overkill for current sizes

|

||||

// (4096 in 7B model and 768 in 0.1B model),

|

||||

// and is not faster than the plain float version.

|

||||

template <typename Func>

|

||||

void element_wise(Func func, int n) {

|

||||

// 256 is good enough on most GPUs

|

||||

const int32_t BLOCK_SIZE = 256;

|

||||

assert(n % BLOCK_SIZE == 0);

|

||||

_element_wise<<<n / BLOCK_SIZE, BLOCK_SIZE>>>(func, n);

|

||||

}

|

||||

165

backend-python/rwkv_pip/beta/cuda/ffn.cu

vendored

Normal file

165

backend-python/rwkv_pip/beta/cuda/ffn.cu

vendored

Normal file

@ -0,0 +1,165 @@

|

||||

#include "ATen/ATen.h"

|

||||

#include <cuda_fp16.h>

|

||||

#include <cuda_runtime.h>

|

||||

#include <torch/extension.h>

|

||||

|

||||

#include "element_wise.h"

|

||||

#include "util.h"

|

||||

|

||||

using torch::Tensor;

|

||||

|

||||

void gemm_fp16_cublas(const void *a, const void *b, void *c, int ori_m,

|

||||

int ori_n, int ori_k, bool output_fp32);

|

||||

|

||||

__global__ void _ffn_seq_mix(const half *xx, const half *sx, const half *k_mix,

|

||||

const half *r_mix, const int outer_size,

|

||||

const int inner_size, half *kx, half *rx) {

|

||||

for (int idx2 = blockIdx.x * blockDim.x + threadIdx.x; idx2 < inner_size;

|

||||

idx2 += blockDim.x * gridDim.x) {

|

||||

half k_mix_ = k_mix[idx2];

|

||||

half r_mix_ = r_mix[idx2];

|

||||

for (int row = 0; row < outer_size; ++row) {

|

||||

int idx1 = row * inner_size + idx2;

|

||||

half xx_ = xx[idx1];

|

||||

half sx_ = sx[idx1];

|

||||

kx[idx1] = __hadd(__hmul(xx_, k_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), k_mix_)));

|

||||

rx[idx1] = __hadd(__hmul(xx_, r_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), r_mix_)));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

void ffn_seq_mix(const half *xx, const half *sx, const half *k_mix,

|

||||

const half *r_mix, const int outer_size, const int inner_size,

|

||||

half *kx, half *rx) {

|

||||

// 256 is good enough on most GPUs

|

||||

const int32_t BLOCK_SIZE = 256;

|

||||

assert(inner_size % BLOCK_SIZE == 0);

|

||||

_ffn_seq_mix<<<inner_size / BLOCK_SIZE, BLOCK_SIZE>>>(

|

||||

xx, sx, k_mix, r_mix, outer_size, inner_size, kx, rx);

|

||||

}

|

||||

|

||||

struct InplaceSigmoid {

|

||||

__device__ __forceinline__ void operator()(int i) const {

|

||||

ptr[i] = __float2half(1.0 / (1.0 + exp(-__half2float(ptr[i]))));

|

||||

}

|

||||

half *ptr;

|

||||

};

|

||||

|

||||

struct InplaceReLUAndSquare {

|

||||

__device__ __forceinline__ void operator()(int i) const {

|

||||

// __hmax is not defined in old cuda

|

||||

if (__hgt(ptr[i], __float2half(0))) {

|

||||

ptr[i] = __hmul(ptr[i], ptr[i]);

|

||||

} else {

|

||||

ptr[i] = __float2half(0);

|

||||

}

|

||||

}

|

||||

half *ptr;

|

||||

};

|

||||

|

||||

struct InplaceFma {

|

||||

__device__ __forceinline__ void operator()(int i) const {

|

||||

a[i] = __hfma(a[i], b[i], c[i]);

|

||||

}

|

||||

half *a;

|

||||

const half *b;

|

||||

const half *c;

|

||||

};

|

||||

|

||||

/*

|

||||

Equivalent Python code:

|

||||

|

||||

xx = F.layer_norm(x, (x.shape[-1],), weight=ln_w, bias=ln_b)

|

||||

sx = torch.cat((sx.unsqueeze(0), xx[:-1,:]))

|

||||

kx = xx * k_mix + sx * (1 - k_mix)

|

||||

rx = xx * r_mix + sx * (1 - r_mix)

|

||||

|

||||

r = torch.sigmoid(gemm(rx, rw))

|

||||

vx = torch.square(torch.relu(gemm(kx, kw)))

|

||||

out = r * gemm(vx, vw)

|

||||

return x + out, xx[-1,:]

|

||||

*/

|

||||

Tensor ffn_seq(Tensor x, Tensor sx, Tensor ln_w, Tensor ln_b, Tensor k_mix,

|

||||

Tensor r_mix, Tensor kw, Tensor vw, Tensor rw,

|

||||

/* imm */ Tensor buf,

|

||||

/* out */ Tensor x_plus_out) {

|

||||

Tensor xx = at::layer_norm(x, {x.size(-1)}, ln_w, ln_b);

|

||||

sx = at::cat({sx.unsqueeze(0), xx.slice(0, 0, -1)}, 0);

|

||||

char *buf_ptr = (char *)buf.data_ptr();

|

||||

half *kx = (half *)buf_ptr;

|

||||

half *rx = kx + x.numel();

|

||||

half *vx = rx + x.numel();

|

||||

half *r = vx + x.size(0) * kw.size(1);

|

||||

ffn_seq_mix(data_ptr<half>(xx), data_ptr<half>(sx), data_ptr<half>(k_mix),

|

||||

data_ptr<half>(r_mix), xx.size(0), xx.size(1), kx, rx);

|

||||

|

||||

gemm_fp16_cublas(rx, rw.data_ptr(), r, x.size(0), rw.size(1), x.size(1),

|

||||

false);

|

||||

element_wise(InplaceSigmoid{r}, x.size(0) * rw.size(1));

|

||||

gemm_fp16_cublas(kx, kw.data_ptr(), vx, x.size(0), kw.size(1), x.size(1),

|

||||

false);

|

||||

element_wise(InplaceReLUAndSquare{vx}, x.size(0) * kw.size(1));

|

||||

gemm_fp16_cublas(vx, vw.data_ptr(), x_plus_out.data_ptr(), x.size(0),

|

||||

vw.size(1), vw.size(0), false);

|

||||

element_wise(InplaceFma{data_ptr<half>(x_plus_out), r, data_ptr<half>(x)},

|

||||

x_plus_out.numel());

|

||||

return xx;

|

||||

}

|

||||

|

||||

struct FfnOneMix {

|

||||

__device__ __forceinline__ void operator()(int idx) {

|

||||

half k_mix_ = k_mix[idx];

|

||||

half r_mix_ = r_mix[idx];

|

||||

half xx_ = xx[idx];

|

||||

half sx_ = sx[idx];

|

||||

kx[idx] = __hadd(__hmul(xx_, k_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), k_mix_)));

|

||||

rx[idx] = __hadd(__hmul(xx_, r_mix_),

|

||||

__hmul(sx_, __hsub(__float2half(1), r_mix_)));

|

||||

}

|

||||

half *k_mix;

|

||||

half *r_mix;

|

||||

half *xx;

|

||||

half *sx;

|

||||

half *kx;

|

||||

half *rx;

|

||||

};

|

||||

|

||||

/*

|

||||

Equivalent Python code:

|

||||

|

||||

xx = F.layer_norm(x, (x.shape[-1],), weight=ln_w, bias=ln_b)

|

||||

kx = xx * k_mix + sx * (1 - k_mix)

|

||||

rx = xx * r_mix + sx * (1 - r_mix)

|

||||

|

||||

r = torch.sigmoid(gemm(rx, rw))

|

||||

vx = torch.square(torch.relu(gemm(kx, kw)))

|

||||

out = r * gemm(vx, vw)

|

||||

return x + out, xx

|

||||

*/

|

||||

Tensor ffn_one(Tensor x, Tensor sx, Tensor ln_w, Tensor ln_b, Tensor k_mix,

|

||||

Tensor r_mix, Tensor kw, Tensor vw, Tensor rw,

|

||||

/* imm */ Tensor buf,

|

||||

/* out */ Tensor x_plus_out) {

|

||||

Tensor xx = at::layer_norm(x, {x.size(-1)}, ln_w, ln_b);

|

||||

char *buf_ptr = (char *)buf.data_ptr();

|

||||

half *kx = (half *)buf_ptr;

|

||||

half *rx = kx + x.numel();

|

||||

half *vx = rx + x.numel();

|

||||

half *r = vx + x.size(0) * kw.size(1);

|

||||

element_wise(FfnOneMix{data_ptr<half>(k_mix), data_ptr<half>(r_mix),

|

||||

data_ptr<half>(xx), data_ptr<half>(sx), kx, rx},

|

||||

x.numel());

|

||||

// vector * matrix, so m = 1

|

||||

gemm_fp16_cublas(rx, rw.data_ptr(), r, 1, rw.size(1), rw.size(0), false);

|

||||

element_wise(InplaceSigmoid{r}, rw.size(1));

|

||||

gemm_fp16_cublas(kx, kw.data_ptr(), vx, 1, kw.size(1), kw.size(0), false);

|

||||

element_wise(InplaceReLUAndSquare{vx}, kw.size(1));

|

||||

gemm_fp16_cublas(vx, vw.data_ptr(), x_plus_out.data_ptr(), 1, vw.size(1),

|

||||

vw.size(0), false);

|

||||

element_wise(InplaceFma{data_ptr<half>(x_plus_out), r, data_ptr<half>(x)},

|

||||

x_plus_out.numel());

|

||||

return xx;

|

||||

}

|

||||

128

backend-python/rwkv_pip/beta/cuda/gemm_fp16_cublas.cpp

vendored

Normal file

128

backend-python/rwkv_pip/beta/cuda/gemm_fp16_cublas.cpp

vendored

Normal file

@ -0,0 +1,128 @@

|

||||

#include <cublas_v2.h>

|

||||

#include <cuda.h>

|

||||

#include <cuda_fp16.h>

|

||||

#include <cuda_runtime.h>

|

||||

#include <torch/extension.h>

|

||||

|

||||

#define CUBLAS_CHECK(condition) \

|

||||

for (cublasStatus_t _cublas_check_status = (condition); \

|

||||

_cublas_check_status != CUBLAS_STATUS_SUCCESS;) \

|

||||

throw std::runtime_error("cuBLAS error " + \

|

||||

std::to_string(_cublas_check_status) + " at " + \

|

||||

std::to_string(__LINE__));

|

||||

|

||||

#define CUDA_CHECK(condition) \

|

||||

for (cudaError_t _cuda_check_status = (condition); \

|

||||

_cuda_check_status != cudaSuccess;) \

|

||||

throw std::runtime_error( \

|

||||

"CUDA error " + std::string(cudaGetErrorString(_cuda_check_status)) + \

|

||||